This website uses cookies.

By clicking the "Accept" button or continuing to browse our site, you agree to first-party and session-only cookies being stored on your device to enhance site navigation and analyze site performance and traffic. For more information on our use of cookies, please see our Privacy Policy .

- Research Highlights

The convergence hypothesis

April 6, 2020

Are poor countries catching up with rich countries?

Tyler Smith

The economies of today’s wealthiest nations raced ahead of the rest of the world about two centuries ago. That event initiated a new era in economic history, one defined by growth.

More recently, countries like Japan seem to have successfully copied this playbook, and it appears others like China are following suit. But unfortunately those countries are the exception rather than the rule, according to a paper in the Journal of Economic Literature .

Authors Paul Johnson and Chris Papageorgiou found there’s little evidence that national economies are catching up to their richest peers. Most low-income countries haven’t been able to maintain what growth spurts they’ve had like traditional economic theory would predict.

“The consensus that we find in the literature leads us to believe that poor countries, unless something changes, are destined to stay poor,” Johnson told the AEA in an interview.

In fact, slowdowns in the poorest countries have left millions in extreme poverty . Understanding which countries are catching up and how they’re doing so could help explain the elusive origins of economic growth.

The consensus that we find in the literature leads us to believe that poor countries, unless something changes, are destined to stay poor. Paul Johnson

The debate over catch-up growth—what economists have dubbed the convergence hypothesis —has a long history. The authors choose to focus on research published over the last 30 years.

In this recent research, capital, technology, and productivity have been at the root of most understandings of economic growth and convergence. But that has traditionally led economists to conclude that however poor a country starts off, it will adopt the best practices of the rich countries and eventually be just as well-off as their forerunners.

That’s the theory. But when the authors looked at the numbers over the last 60 years that’s not what they saw.

Data from the Penn World Tables —which covers 182 countries—revealed an unprecedented level of global growth over the period, but it was spread unevenly across the globe and across income levels.

The researchers separated countries into three income levels: low, middle, and high.

Each decade, high-income countries tended to grow faster than middle-income countries, which in turn tended to grow faster than low-income countries.

Every group experienced periods of relatively slow growth. But low-income countries actually experienced negative average growth rates during the 80s and 90s. The contractions were mostly driven by periods of extreme violence, corruption, and other state dysfunctions.

This unequal growth has led to steadily more and more dispersed national incomes around the world—the opposite of what a strong version of the convergence hypothesis predicts.

But while there wasn’t any absolute convergence, the researchers did find that the literature supported the idea of “convergence clubs.” In other words, countries that started with a similar income level in 1960 still had a similar income level in 2010, the end of the dataset.

The convergence clubs might be a clue for national leaders. According to Johnson, it suggests that policy interventions need to be bold enough to reach the next rung in the income ladder, or they risk slow, start-and-stop growth.

It also might help make sense of why some countries jump out of low-income clubs and eventually join the richest one, while other countries are stuck in poverty or middle-income traps.

Still other types of convergence are possible. Previous work has found that within some industries, such as manufacturing, convergence is happening. Countries may need to organize their workforces around these sectors to jumpstart growth.

As the authors point out, even half a century is short compared to the long run. The limited data span may mean that pessimism isn’t ultimately warranted, but the authors’ work warns against being complacent.

“There have been signs of a little catch-up over the last few years . . . but we don't know if that will continue,” Johnson said.

“ What Remains of Cross-Country Convergence? ” appears in the March issue of the Journal of Economic Literature.

The Great Divergence

This video explains how technology diffusion contributes to the growing productivity gap between rich and poor countries.

Can pathogen concentrations explain which countries developed sooner?

A new unified growth model of the demographic transition

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

The convergence hypothesis: History, theory, and evidence

1998, Open economies review

Related Papers

Applied Economics Letters

Gerry Boyle

SSRN Electronic Journal

Shlomo Yitzhaki

Sandra Quijada

Economics Bulletin

This paper takes a closer look at the typical growth convergence regression of Barro (1991), Mankiw, Romer and Weil (1992), Sala-i-Martin (1996), and others. By interpreting the two components of the regression coefi¬ cient separately, i.e. the correlation coefficient and the ratio of standard deviations, we distinguish between "time-series" convergence and "cross-section" convergence, and consequently the relationship between I²âˆ’ and Iƒâˆ’convergence. And, using data from the latest Penn World Table database (version 9.1), we investigate the convergence or the lack-of-convergence in samples of countries representing the “World†, OECD and Sub-Saharan Africa. The implications of this study for the neoclassical growth model are also discussed.

Angel De la Fuente

Jeffrey Sachs

KALSOOM ZULFIQAR

Convergence debate has been an important topic of economic growth literature. This article aims to investigate convergence at assorted level of disaggregation among a sample of almost 60 countries. It has tested absolute and conditional convergence hypotheses for a set of developed and developing countries by applying pooled least square methodology. The results suggest absolute convergence for countries having similar characteristics and conditional convergence for countries having heterogeneous structures. Disparity level for each country is also calculated with reference to average steady state income. The study has also scrutinized the role of investment, openness and population growth in accelerating the convergence process.

Annali d’Italia, scientific journal of Italy, №13, VOLUME 1, Florence, Italy

Karen Grigoryan

This paper analyzes the nature, causes, and consequences of income convergence in developing countries, and their attempt to catch-up with developed countries. It also addresses the question of sustainability of the convergence in the near future. In the context of income convergence, the relevance of productivity and welfare growth are discussed as indicators of convergence. The paper examines the catch-up growth and convergence approaches in the example of Armenia as a developing economy.

Robert Waldmann

Journal of Yaşar University

Burak Camurdan

In this paper, we study the GDP per capita convergence in emerging market economies for the period of 1950-2008. As the convergence in emerging market economies hasn’t been concerned as much as in the developed countries in the literature, hereby the situation of the convergence in emerging market economies is tried to be found out by the investigation. For this purpose, GDP per capita convergences in 25 developing countries were tested by ADF unit root test, Nahar and Inder (2002) Test and Kapetanios, Snell, and Shin (KSS) (2003) Test, which is based on the non-linear time series technique. While ADF unit root test allows to infer that there is convergence upon to the used series are stationary, the Nahar and Inder (2002) Test asserts that the existence of the convergence could also infer whether the used series are not stationary. On the other hand, the Kapetanios, Snell, and Shin (KSS) (2003) Test reveals that, if non-linear time series are stationary, the convergence could be in...

RELATED PAPERS

Maheep Bhatnagar

Tudásmenedzsment

Daniella Tirner

Indian Pediatrics

Narayanan Namboodiri

Journal of Research and Applications in Agricultural Engineering

Tomasz Sekutowski

Thuto Mosuang

Arquivos de Neuro-Psiquiatria

Raquel Gomes

Cecilia Ros

Ronald Evans

Önder Sezer

Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence

Xiaocheng Feng

Filosofía y ciencia: reflexiones contemporáneas

Luisa Gonzalez-Reiche

Rev. nefrol. diálisis …

Nestor Lago

Journal of Pineal Research

Gerhard Heldmaier

Wilson Senne

Lenka Vrablikova

ChemistrySelect

Bekir Salih

Applied mathematical sciences

Ibrahim Massy

Infectious diseases

GIUSI MACALUSO

Ankara üniversitesi eğitim bilimleri fakültesi özel eğitim dergisi

Frontiers in Public Health

Aida Turrini

Arthroscopy Techniques

João Antonio Matheus Guimarães

Occupational Medicine

Susan Tarlo

ANA MARIA CALLES DOÑATE

Advances in Geometry

Mauro Nacinovich

David Kliger

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

- Search Menu

- Browse content in Arts and Humanities

- Archaeology

- Browse content in Art

- History of Art

- Browse content in Classical Studies

- Classical Literature

- Classical Reception

- Classical History

- Classical Philosophy

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Archaeology

- Greek and Roman Epigraphy

- Late Antiquity

- Religion in the Ancient World

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Literature

- Literary Studies (American)

- Literary Studies (19th Century)

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Ethnomusicology

- Gender and Sexuality in Music

- Music Cultures

- Music and Culture

- Music and Religion

- Music and Media

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Race and Ethnicity in Music

- Browse content in Philosophy

- Philosophy of Religion

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Technology and Society

- Visual Culture

- Browse content in Medicine and Health

- History of Medicine

- Browse content in Public Health and Epidemiology

- Public Health

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Zoology and Animal Sciences

- Browse content in Earth Sciences and Geography

- Environmental Geography

- Palaeontology

- Environmental Science

- History of Science and Technology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Criminology and Criminal Justice

- Browse content in Economics

- Economic History

- Economic Development and Growth

- Browse content in Education

- Educational Strategies and Policy

- Browse content in Environment

- Climate Change

- Conservation of the Environment (Social Science)

- Browse content in Politics

- Political Sociology

- US Politics

- Browse content in Sociology

- Childhood Studies

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Urban and Rural Studies

- Journals on Oxford Academic

- Books on Oxford Academic

- < Previous

- Next chapter >

1 Convergence Theory

- Published: January 2002

- Cite Icon Cite

- Permissions Icon Permissions

This chapter discusses evidence for convergence theory, the idea that as rich countries got richer, they developed similar economic, political, and social structures and to some extent common values and beliefs. The analysis reveals that high levels of economic development and related changes in the occupational and industrial composition of the labor force result in two areas of convergence: the gradual spread of nonstandard schedules of work and the substantial and rapid growth of contingent labor in both manufacturing and service. Total annual hours of work declined from the late nineteenth century until about 1960, a trend that has continued in all rich democracies ever since.

Signed in as

Institutional accounts.

- Google Scholar Indexing

- GoogleCrawler [DO NOT DELETE]

Personal account

- Sign in with email/username & password

- Get email alerts

- Save searches

- Purchase content

- Activate your purchase/trial code

Institutional access

- Sign in with a library card Sign in with username/password Recommend to your librarian

- Institutional account management

- Get help with access

Access to content on Oxford Academic is often provided through institutional subscriptions and purchases. If you are a member of an institution with an active account, you may be able to access content in one of the following ways:

IP based access

Typically, access is provided across an institutional network to a range of IP addresses. This authentication occurs automatically, and it is not possible to sign out of an IP authenticated account.

Sign in through your institution

Choose this option to get remote access when outside your institution. Shibboleth/Open Athens technology is used to provide single sign-on between your institution’s website and Oxford Academic.

- Click Sign in through your institution.

- Select your institution from the list provided, which will take you to your institution's website to sign in.

- When on the institution site, please use the credentials provided by your institution. Do not use an Oxford Academic personal account.

- Following successful sign in, you will be returned to Oxford Academic.

If your institution is not listed or you cannot sign in to your institution’s website, please contact your librarian or administrator.

Sign in with a library card

Enter your library card number to sign in. If you cannot sign in, please contact your librarian.

Society Members

Society member access to a journal is achieved in one of the following ways:

Sign in through society site

Many societies offer single sign-on between the society website and Oxford Academic. If you see ‘Sign in through society site’ in the sign in pane within a journal:

- Click Sign in through society site.

- When on the society site, please use the credentials provided by that society. Do not use an Oxford Academic personal account.

If you do not have a society account or have forgotten your username or password, please contact your society.

Sign in using a personal account

Some societies use Oxford Academic personal accounts to provide access to their members. See below.

A personal account can be used to get email alerts, save searches, purchase content, and activate subscriptions.

Some societies use Oxford Academic personal accounts to provide access to their members.

Viewing your signed in accounts

Click the account icon in the top right to:

- View your signed in personal account and access account management features.

- View the institutional accounts that are providing access.

Signed in but can't access content

Oxford Academic is home to a wide variety of products. The institutional subscription may not cover the content that you are trying to access. If you believe you should have access to that content, please contact your librarian.

For librarians and administrators, your personal account also provides access to institutional account management. Here you will find options to view and activate subscriptions, manage institutional settings and access options, access usage statistics, and more.

Our books are available by subscription or purchase to libraries and institutions.

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Rights and permissions

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

20.4 Economic Convergence

Learning objectives.

By the end of this section, you will be able to:

- Explain economic convergence

- Analyze various arguments for and against economic convergence

- Evaluate the speed of economic convergence between high-income countries and the rest of the world

Some low-income and middle-income economies around the world have shown a pattern of convergence , in which their economies grow faster than those of high-income countries. GDP increased by an average rate of 2.7% per year in the 1990s and 1.7% per year from 2010 to 2019 in the high-income countries of the world, which include the United States, Canada, the European Union countries, Japan, Australia, and New Zealand.

Table 20.5 lists eight countries that belong to an informal “fast growth club.” These countries averaged GDP growth (after adjusting for inflation) of at least 5% per year in both the time periods from 1990 to 2000 and from 2010 to 2019. Since economic growth in these countries has exceeded the average of the world’s high-income economies, these countries may converge with the high-income countries. The second part of Table 20.5 lists the “slow growth club,” which consists of countries that averaged GDP growth of 2% per year or less (after adjusting for inflation) during the same time periods. The final portion of Table 20.5 shows GDP growth rates for the countries of the world divided by income. (Note that the reason there is no data for 2001–2009 is because of the Great Recession, which lasted from 2007–2009. Many country’s GDP shrank during these years.)

Each of the countries in Table 20.5 has its own unique story of investments in human and physical capital, technological gains, market forces, government policies, and even lucky events, but an overall pattern of convergence is clear. The low-income countries have GDP growth that is faster than that of the middle-income countries, which in turn have GDP growth that is faster than that of the high-income countries. Two prominent members of the fast-growth club are China and India, which between them have nearly 40% of the world’s population. Some prominent members of the slow-growth club are high-income countries like France, Germany, Italy, and Japan.

Will this pattern of economic convergence persist into the future? This is a controversial question among economists that we will consider by looking at some of the main arguments on both sides.

Arguments Favoring Convergence

Several arguments suggest that low-income countries might have an advantage in achieving greater worker productivity and economic growth in the future.

A first argument is based on diminishing marginal returns. Even though deepening human and physical capital will tend to increase GDP per capita, the law of diminishing returns suggests that as an economy continues to increase its human and physical capital, the marginal gains to economic growth will diminish. For example, raising the average education level of the population by two years from a tenth-grade level to a high school diploma (while holding all other inputs constant) would produce a certain increase in output. An additional two-year increase, so that the average person had a two-year college degree, would increase output further, but the marginal gain would be smaller. Yet another additional two-year increase in the level of education, so that the average person would have a four-year-college bachelor’s degree, would increase output still further, but the marginal increase would again be smaller. A similar lesson holds for physical capital. If the quantity of physical capital available to the average worker increases, by, say, $5,000 to $10,000 (again, while holding all other inputs constant), it will increase the level of output. An additional increase from $10,000 to $15,000 will increase output further, but the marginal increase will be smaller.

Low-income countries like China and India tend to have lower levels of human capital and physical capital, so an investment in capital deepening should have a larger marginal effect in these countries than in high-income countries, where levels of human and physical capital are already relatively high. Diminishing returns implies that low-income economies could converge to the levels that the high-income countries achieve.

A second argument is that low-income countries may find it easier to improve their technologies than high-income countries. High-income countries must continually invent new technologies, whereas low-income countries can often find ways of applying technology that has already been invented and is well understood. The economist Alexander Gerschenkron (1904–1978) gave this phenomenon a memorable name: “the advantages of backwardness.” Of course, he did not literally mean that it is an advantage to have a lower standard of living. He was pointing out that a country that is behind has some extra potential for catching up.

Finally, optimists argue that many countries have observed the experience of those that have grown more quickly and have learned from it. Moreover, once the people of a country begin to enjoy the benefits of a higher standard of living, they may be more likely to build and support the market-friendly institutions that will help provide this standard of living.

View this video to learn about economic growth across the world.

Arguments That Convergence Is neither Inevitable nor Likely

If the economy's growth depended only on the deepening of human capital and physical capital, then we would expect that economy's growth rate to slow down over the long run because of diminishing marginal returns. However, there is another crucial factor in the aggregate production function: technology.

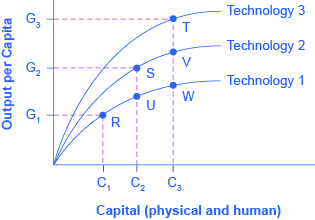

Developing new technology can provide a way for an economy to sidestep the diminishing marginal returns of capital deepening. Figure 20.7 shows how. The figure's horizontal axis measures the amount of capital deepening, which on this figure is an overall measure that includes deepening of both physical and human capital. The amount of human and physical capital per worker increases as you move from left to right, from C 1 to C 2 to C 3 . The diagram's vertical axis measures per capita output. Start by considering the lowest line in this diagram, labeled Technology 1. Along this aggregate production function, the level of technology is held constant, so the line shows only the relationship between capital deepening and output. As capital deepens from C 1 to C 2 to C 3 and the economy moves from R to U to W, per capita output does increase—but the way in which the line starts out steeper on the left but then flattens as it moves to the right shows the diminishing marginal returns, as additional marginal amounts of capital deepening increase output by ever-smaller amounts. The shape of the aggregate production line (Technology 1) shows that the ability of capital deepening, by itself, to generate sustained economic growth is limited, since diminishing returns will eventually set in.

Now, bring improvements in technology into the picture. Improved technology means that with a given set of inputs, more output is possible. The production function labeled Technology 1 in the figure is based on one level of technology, but Technology 2 is based on an improved level of technology, so for every level of capital deepening on the horizontal axis, it produces a higher level of output on the vertical axis. In turn, production function Technology 3 represents a still higher level of technology, so that for every level of inputs on the horizontal axis, it produces a higher level of output on the vertical axis than either of the other two aggregate production functions.

Most healthy, growing economies are deepening their human and physical capital and increasing technology at the same time. As a result, the economy can move from a choice like point R on the Technology 1 aggregate production line to a point like S on Technology 2 and a point like T on the still higher aggregate production line (Technology 3). With the combination of technology and capital deepening, the rise in GDP per capita in high-income countries does not need to fade away because of diminishing returns. The gains from technology can offset the diminishing returns involved with capital deepening.

Will technological improvements themselves run into diminishing returns over time? That is, will it become continually harder and more costly to discover new technological improvements? Perhaps someday, but, at least over the last two centuries since the beginning of the Industrial Revolution, improvements in technology have not run into diminishing marginal returns. Modern inventions, like the internet or discoveries in genetics or materials science, do not seem to provide smaller gains to output than earlier inventions like the steam engine or the railroad. One reason that technological ideas do not seem to run into diminishing returns is that we often can apply widely the ideas of new technology at a marginal cost that is very low or even zero. A specific worker or group of workers must use a specific additional machine, or an additional year of education. Many workers across the economy can use a new technology or invention at very low marginal cost.

The argument that it is easier for a low-income country to copy and adapt existing technology than it is for a high-income country to invent new technology is not necessarily true, either. When it comes to adapting and using new technology, a society’s performance is not necessarily guaranteed, but is the result of whether the country's economic, educational, and public policy institutions are supportive. In theory, perhaps, low-income countries have many opportunities to copy and adapt technology, but if they lack the appropriate supportive economic infrastructure and institutions, the theoretical possibility that backwardness might have certain advantages is of little practical relevance.

Visit this website to read more about economic growth in India.

The Slowness of Convergence

Although economic convergence between the high-income countries and the rest of the world seems possible and even likely, it will proceed slowly. Consider, for example, a country that starts off with a GDP per capita of $40,000, which would roughly represent a typical high-income country today, and another country that starts out at $4,000, which is roughly the level in low-income but not impoverished countries like Indonesia, Guatemala, or Egypt. Say that the rich country chugs along at a 2% annual growth rate of GDP per capita, while the poorer country grows at the aggressive rate of 7% per year. After 30 years, GDP per capita in the rich country will be $72,450 (that is, $40,000 (1 + 0.02) 30 ) while in the poor country it will be $30,450 (that is, $4,000 (1 + 0.07) 30 ). Convergence has occurred. The rich country used to be 10 times as wealthy as the poor one, and now it is only about 2.4 times as wealthy. Even after 30 consecutive years of very rapid growth, however, people in the low-income country are still likely to feel quite poor compared to people in the rich country. Moreover, as the poor country catches up, its opportunities for catch-up growth are reduced, and its growth rate may slow down somewhat.

The slowness of convergence illustrates again that small differences in annual rates of economic growth become huge differences over time. The high-income countries have been building up their advantage in standard of living over decades—more than a century in some cases. Even in an optimistic scenario, it will take decades for the low-income countries of the world to catch up significantly.

Bring It Home

Calories and economic growth.

We can tell the story of modern economic growth by looking at calorie consumption over time. The dramatic rise in incomes allowed the average person to eat better and consume more calories. How did these incomes increase? The neoclassical growth consensus uses the aggregate production function to suggest that the period of modern economic growth came about because of increases in inputs such as technology and physical and human capital. Also important was the way in which technological progress combined with physical and human capital deepening to create growth and convergence. The issue of distribution of income notwithstanding, it is clear that the average worker can afford more calories in 2020 than in 1875.

Aside from increases in income, there is another reason why the average person can afford more food. Modern agriculture has allowed many countries to produce more food than they need. Despite having more than enough food, however, many governments and multilateral agencies have not solved the food distribution problem. In fact, food shortages, famine, or general food insecurity are caused more often by the failure of government macroeconomic policy, according to the Nobel Prize-winning economist Amartya Sen. Sen has conducted extensive research into issues of inequality, poverty, and the role of government in improving standards of living. Macroeconomic policies that strive toward stable inflation, full employment, education of women, and preservation of property rights are more likely to eliminate starvation and provide for a more even distribution of food.

Because we have more food per capita, global food prices have decreased since 1875. The prices of some foods, however, have decreased more than the prices of others. For example, researchers from the University of Washington have shown that in the United States, calories from zucchini and lettuce are 100 times more expensive than calories from oil, butter, and sugar. Research from countries like India, China, and the United States suggests that as incomes rise, individuals want more calories from fats and protein and fewer from carbohydrates. This has very interesting implications for global food production, obesity, and environmental consequences. Affluent urban India has an obesity problem much like many parts of the United States. The forces of convergence are at work.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/principles-economics-3e/pages/1-introduction

- Authors: Steven A. Greenlaw, David Shapiro, Daniel MacDonald

- Publisher/website: OpenStax

- Book title: Principles of Economics 3e

- Publication date: Dec 14, 2022

- Location: Houston, Texas

- Book URL: https://openstax.org/books/principles-economics-3e/pages/1-introduction

- Section URL: https://openstax.org/books/principles-economics-3e/pages/20-4-economic-convergence

© Jan 23, 2024 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

- Subscriber Services

- For Authors

- Publications

- Archaeology

- Art & Architecture

- Bilingual dictionaries

- Classical studies

- Encyclopedias

- English Dictionaries and Thesauri

- Language reference

- Linguistics

- Media studies

- Medicine and health

- Names studies

- Performing arts

- Science and technology

- Social sciences

- Society and culture

- Overview Pages

- Subject Reference

- English Dictionaries

- Bilingual Dictionaries

Recently viewed (0)

- Save Search

- Share This Facebook LinkedIn Twitter

Related Content

Related overviews.

market economy

Raymond Aron (1905—1983)

See all related overviews in Oxford Reference »

More Like This

Show all results sharing this subject:

convergence hypothesis

Quick reference.

The theory that socialism and capitalism will, over time, become increasingly alike in economic and social terms. Convergence theory was popular in the 1950s and 1960s, when Raymond Aron, John ...

From: convergence hypothesis in Dictionary of the Social Sciences »

Subjects: Social sciences

Related content in Oxford Reference

Reference entries.

View all related items in Oxford Reference »

Search for: 'convergence hypothesis' in Oxford Reference »

- Oxford University Press

PRINTED FROM OXFORD REFERENCE (www.oxfordreference.com). (c) Copyright Oxford University Press, 2023. All Rights Reserved. Under the terms of the licence agreement, an individual user may print out a PDF of a single entry from a reference work in OR for personal use (for details see Privacy Policy and Legal Notice ).

date: 14 May 2024

- Cookie Policy

- Privacy Policy

- Legal Notice

- Accessibility

- [66.249.64.20|185.80.149.115]

- 185.80.149.115

Character limit 500 /500

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 20. Economic Growth

20.4 Economic Convergence

Learning objectives.

- Explain economic convergence

- Analyze various arguments for and against economic convergence

- Evaluate the speed of economic convergence between high-income countries and the rest of the world

Some low-income and middle-income economies around the world have shown a pattern of convergence , in which their economies grow faster than those of high-income countries. GDP increased by an average rate of 2.7% per year in the 1990s and 2.3% per year from 2000 to 2008 in the high-income countries of the world, which include the United States, Canada, the countries of the European Union, Japan, Australia, and New Zealand.

Table 5 lists 10 countries of the world that belong to an informal “fast growth club.” These countries averaged GDP growth (after adjusting for inflation) of at least 5% per year in both the time periods from 1990 to 2000 and from 2000 to 2008. Since economic growth in these countries has exceeded the average of the world’s high-income economies, these countries may converge with the high-income countries. The second part of Table 5 lists the “slow growth club,” which consists of countries that averaged GDP growth of 2% per year or less (after adjusting for inflation) during the same time periods. The final portion of Table 5 shows GDP growth rates for the countries of the world divided by income.

Each of the countries in Table 5 has its own unique story of investments in human and physical capital, technological gains, market forces, government policies, and even lucky events, but an overall pattern of convergence is clear. The low-income countries have GDP growth that is faster than that of the middle-income countries, which in turn have GDP growth that is faster than that of the high-income countries. Two prominent members of the fast-growth club are China and India, which between them have nearly 40% of the world’s population. Some prominent members of the slow-growth club are high-income countries like the United States, France, Germany, Italy, and Japan.

Will this pattern of economic convergence persist into the future? This is a controversial question among economists that we will consider by looking at some of the main arguments on both sides.

Arguments Favoring Convergence

Several arguments suggest that low-income countries might have an advantage in achieving greater worker productivity and economic growth in the future.

A first argument is based on diminishing marginal returns. Even though deepening human and physical capital will tend to increase GDP per capita, the law of diminishing returns suggests that as an economy continues to increase its human and physical capital, the marginal gains to economic growth will diminish. For example, raising the average education level of the population by two years from a tenth-grade level to a high school diploma (while holding all other inputs constant) would produce a certain increase in output. An additional two-year increase, so that the average person had a two-year college degree, would increase output further, but the marginal gain would be smaller. Yet another additional two-year increase in the level of education, so that the average person would have a four-year-college bachelor’s degree, would increase output still further, but the marginal increase would again be smaller. A similar lesson holds for physical capital. If the quantity of physical capital available to the average worker increases, by, say, $5,000 to $10,000 (again, while holding all other inputs constant), it will increase the level of output. An additional increase from $10,000 to $15,000 will increase output further, but the marginal increase will be smaller.

Low-income countries like China and India tend to have lower levels of human capital and physical capital, so an investment in capital deepening should have a larger marginal effect in these countries than in high-income countries, where levels of human and physical capital are already relatively high. Diminishing returns implies that low-income economies could converge to the levels achieved by the high-income countries.

A second argument is that low-income countries may find it easier to improve their technologies than high-income countries. High-income countries must continually invent new technologies, whereas low-income countries can often find ways of applying technology that has already been invented and is well understood. The economist Alexander Gerschenkron (1904–1978) gave this phenomenon a memorable name: “the advantages of backwardness.” Of course, he did not literally mean that it is an advantage to have a lower standard of living. He was pointing out that a country that is behind has some extra potential for catching up.

Finally, optimists argue that many countries have observed the experience of those that have grown more quickly and have learned from it. Moreover, once the people of a country begin to enjoy the benefits of a higher standard of living, they may be more likely to build and support the market-friendly institutions that will help provide this standard of living.

View this video to learn about economic growth across the world.

Arguments That Convergence Is neither Inevitable nor Likely

If the growth of an economy depended only on the deepening of human capital and physical capital, then the growth rate of that economy would be expected to slow down over the long run because of diminishing marginal returns. However, there is another crucial factor in the aggregate production function: technology.

The development of new technology can provide a way for an economy to sidestep the diminishing marginal returns of capital deepening. Figure 1 shows how. The horizontal axis of the figure measures the amount of capital deepening, which on this figure is an overall measure that includes deepening of both physical and human capital. The amount of human and physical capital per worker increases as you move from left to right, from C 1 to C 2 to C 3 . The vertical axis of the diagram measures per capita output. Start by considering the lowest line in this diagram, labeled Technology 1. Along this aggregate production function, the level of technology is being held constant, so the line shows only the relationship between capital deepening and output. As capital deepens from C 1 to C 2 to C 3 and the economy moves from R to U to W, per capita output does increase—but the way in which the line starts out steeper on the left but then flattens as it moves to the right shows the diminishing marginal returns, as additional marginal amounts of capital deepening increase output by ever-smaller amounts. The shape of the aggregate production line (Technology 1) shows that the ability of capital deepening, by itself, to generate sustained economic growth is limited, since diminishing returns will eventually set in.

Now, bring improvements in technology into the picture. Improved technology means that with a given set of inputs, more output is possible. The production function labeled Technology 1 in the figure is based on one level of technology, but Technology 2 is based on an improved level of technology, so for every level of capital deepening on the horizontal axis, it produces a higher level of output on the vertical axis. In turn, production function Technology 3 represents a still higher level of technology, so that for every level of inputs on the horizontal axis, it produces a higher level of output on the vertical axis than either of the other two aggregate production functions.

Most healthy, growing economies are deepening their human and physical capital and increasing technology at the same time. As a result, the economy can move from a choice like point R on the Technology 1 aggregate production line to a point like S on Technology 2 and a point like T on the still higher aggregate production line (Technology 3). With the combination of technology and capital deepening, the rise in GDP per capita in high-income countries does not need to fade away because of diminishing returns. The gains from technology can offset the diminishing returns involved with capital deepening.

Will technological improvements themselves run into diminishing returns over time? That is, will it become continually harder and more costly to discover new technological improvements? Perhaps someday, but, at least over the last two centuries since the Industrial Revolution, improvements in technology have not run into diminishing marginal returns. Modern inventions, like the Internet or discoveries in genetics or materials science, do not seem to provide smaller gains to output than earlier inventions like the steam engine or the railroad. One reason that technological ideas do not seem to run into diminishing returns is that the ideas of new technology can often be widely applied at a marginal cost that is very low or even zero. A specific additional machine, or an additional year of education, must be used by a specific worker or group of workers. A new technology or invention can be used by many workers across the economy at very low marginal cost.

The argument that it is easier for a low-income country to copy and adapt existing technology than it is for a high-income country to invent new technology is not necessarily true, either. When it comes to adapting and using new technology, a society’s performance is not necessarily guaranteed, but is the result of whether the economic, educational, and public policy institutions of the country are supportive. In theory, perhaps, low-income countries have many opportunities to copy and adapt technology, but if they lack the appropriate supportive economic infrastructure and institutions, the theoretical possibility that backwardness might have certain advantages is of little practical relevance.

Visit this website to read more about economic growth in India.

The Slowness of Convergence

Although economic convergence between the high-income countries and the rest of the world seems possible and even likely, it will proceed slowly. Consider, for example, a country that starts off with a GDP per capita of $40,000, which would roughly represent a typical high-income country today, and another country that starts out at $4,000, which is roughly the level in low-income but not impoverished countries like Indonesia, Guatemala, or Egypt. Say that the rich country chugs along at a 2% annual growth rate of GDP per capita, while the poorer country grows at the aggressive rate of 7% per year. After 30 years, GDP per capita in the rich country will be $72,450 (that is, $40,000 (1 + 0.02) 30 ) while in the poor country it will be $30,450 (that is, $4,000 (1 + 0.07) 30 ). Convergence has occurred; the rich country used to be 10 times as wealthy as the poor one, and now it is only about 2.4 times as wealthy. Even after 30 consecutive years of very rapid growth, however, people in the low-income country are still likely to feel quite poor compared to people in the rich country. Moreover, as the poor country catches up, its opportunities for catch-up growth are reduced, and its growth rate may slow down somewhat.

The slowness of convergence illustrates again that small differences in annual rates of economic growth become huge differences over time. The high-income countries have been building up their advantage in standard of living over decades—more than a century in some cases. Even in an optimistic scenario, it will take decades for the low-income countries of the world to catch up significantly.

Calories and Economic Growth

The story of modern economic growth can be told by looking at calorie consumption over time. The dramatic rise in incomes allowed the average person to eat better and consume more calories. How did these incomes increase? The neoclassical growth consensus uses the aggregate production function to suggest that the period of modern economic growth came about because of increases in inputs such as technology and physical and human capital. Also important was the way in which technological progress combined with physical and human capital deepening to create growth and convergence. The issue of distribution of income notwithstanding, it is clear that the average worker can afford more calories in 2014 than in 1875.

Aside from increases in income, there is another reason why the average person can afford more food. Modern agriculture has allowed many countries to produce more food than they need. Despite having more than enough food, however, many governments and multilateral agencies have not solved the food distribution problem. In fact, food shortages, famine, or general food insecurity are caused more often by the failure of government macroeconomic policy, according to the Nobel Prize-winning economist Amartya Sen. Sen has conducted extensive research into issues of inequality, poverty, and the role of government in improving standards of living. Macroeconomic policies that strive toward stable inflation, full employment, education of women, and preservation of property rights are more likely to eliminate starvation and provide for a more even distribution of food.

Because we have more food per capita, global food prices have decreased since 1875. The prices of some foods, however, have decreased more than the prices of others. For example, researchers from the University of Washington have shown that in the United States, calories from zucchini and lettuce are 100 times more expensive than calories from oil, butter, and sugar. Research from countries like India, China, and the United States suggests that as incomes rise, individuals want more calories from fats and protein and fewer from carbohydrates. This has very interesting implications for global food production, obesity, and environmental consequences. Affluent urban India has an obesity problem much like many parts of the United States. The forces of convergence are at work.

Key Concepts and Summary

When countries with lower levels of GDP per capita catch up to countries with higher levels of GDP per capita, the process is called convergence. Convergence can occur even when both high- and low-income countries increase investment in physical and human capital with the objective of growing GDP. This is because the impact of new investment in physical and human capital on a low-income country may result in huge gains as new skills or equipment are combined with the labor force. In higher-income countries, however, a level of investment equal to that of the low income country is not likely to have as big an impact, because the more developed country most likely has high levels of capital investment. Therefore, the marginal gain from this additional investment tends to be successively less and less. Higher income countries are more likely to have diminishing returns to their investments and must continually invent new technologies; this allows lower-income economies to have a chance for convergent growth. However, many high-income economies have developed economic and political institutions that provide a healthy economic climate for an ongoing stream of technological innovations. Continuous technological innovation can counterbalance diminishing returns to investments in human and physical capital.

Self-Check Questions

- Use an example to explain why, after periods of rapid growth, a low-income country that has not caught up to a high-income country may feel poor.

- A weak economy in which businesses become reluctant to make long-term investments in physical capital.

- A rise in international trade.

- A trend in which many more adults participate in continuing education courses through their employers and at colleges and universities.

- What are the “advantages of backwardness” for economic growth?

- Would you expect capital deepening to result in diminished returns? Why or why not? Would you expect improvements in technology to result in diminished returns? Why or why not?

- Why does productivity growth in high-income economies not slow down as it runs into diminishing returns from additional investments in physical capital and human capital? Does this show one area where the theory of diminishing returns fails to apply? Why or why not?

Review Questions

- For a high-income economy like the United States, what elements of the aggregate production function are most important in bringing about growth in GDP per capita? What about a middle-income country such as Brazil? A low-income country such as Niger?

- List some arguments for and against the likelihood of convergence.

Critical Thinking Questions

- What sorts of policies can governments implement to encourage convergence?

- As technological change makes us more sedentary and food costs increase, obesity is likely. What factors do you think may limit obesity?

Central Intelligence Agency. “The World Factbook: Country Comparison: GDP–Real Growth Rate.” https://www.cia.gov/library/publications/the-world-factbook/rankorder/2003rank.html.

Sen, Amartya. “Hunger in the Contemporary World (Discussion Paper DEDPS/8).” The Suntory Centre: London School of Economics and Political Science . Last modified November 1997. http://sticerd.lse.ac.uk/dps/de/dedps8.pdf.

Answers to Self-Check Questions

- A good way to think about this is how a runner who has fallen behind in a race feels psychologically and physically as he catches up. Playing catch-up can be more taxing than maintaining one’s position at the head of the pack.

- No. Capital deepening refers to an increase in the amount of capital per person in an economy. A decrease in investment by firms will actually cause the opposite of capital deepening (since the population will grow over time).

- There is no direct connection between and increase in international trade and capital deepening. One could imagine particular scenarios where trade could lead to capital deepening (for example, if international capital inflows which are the counterpart to increasing the trade deficit) lead to an increase in physical capital investment), but in general, no.

- Yes. Capital deepening refers to an increase in either physical capital or human capital per person. Continuing education or any time of lifelong learning adds to human capital and thus creates capital deepening.

- The advantages of backwardness include faster growth rates because of the process of convergence, as well as the ability to adopt new technologies that were developed first in the “leader” countries. While being “backward” is not inherently a good thing, Gerschenkron stressed that there are certain advantages which aid countries trying to “catch up.”

- Capital deepening, by definition, should lead to diminished returns because you’re investing more and more but using the same methods of production, leading to the marginal productivity declining. This is shown on a production function as a movement along the curve. Improvements in technology should not lead to diminished returns because you are finding new and more efficient ways of using the same amount of capital. This can be illustrated as a shift upward of the production function curve.

- Productivity growth from new advances in technology will not slow because the new methods of production will be adopted relatively quickly and easily, at very low marginal cost. Also, countries that are seeing technology growth usually have a vast and powerful set of institutions for training workers and building better machines, which allows the maximum amount of people to benefit from the new technology. These factors have the added effect of making additional technological advances even easier for these countries.

Principles of Economics Copyright © 2016 by Rice University is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

What Is Convergence Theory?

How Industrialization Affects Developing Nations

Danny Lehman/Getty Images

- Key Concepts

- Major Sociologists

- News & Issues

- Research, Samples, and Statistics

- Recommended Reading

- Archaeology

Convergence theory presumes that as nations move from the early stages of industrialization toward becoming fully industrialized , they begin to resemble other industrialized societies in terms of societal norms and technology.

The characteristics of these nations effectively converge. Ultimately, this could lead to a unified global culture if nothing impeded the process.

Convergence theory has its roots in the functionalist perspective of economics which assumes that societies have certain requirements that must be met if they are to survive and operate effectively.

Convergence theory became popular in the 1960s when it was formulated by the University of California, Berkeley Professor of Economics Clark Kerr.

Some theorists have since expounded upon Kerr's original premise. They say industrialized nations may become more alike in some ways than in others.

Convergence theory is not an across-the-board transformation. Although technologies may be shared , it's not as likely that more fundamental aspects of life such as religion and politics would necessarily converge—though they may.

Convergence vs. Divergence

Convergence theory is also sometimes referred to as the "catch-up effect."

When technology is introduced to nations still in the early stages of industrialization, money from other nations may pour in to develop and take advantage of this opportunity. These nations may become more accessible and susceptible to international markets. This allows them to "catch up" with more advanced nations.

If capital is not invested in these countries, however, and if international markets do not take notice or find that opportunity is viable there, no catch-up can occur. The country is then said to have diverged rather than converged.

Unstable nations are more likely to diverge because they are unable to converge due to political or social-structural factors, such as lack of educational or job-training resources. Convergence theory, therefore, would not apply to them.

Convergence theory also allows that the economies of developing nations will grow more rapidly than those of industrialized countries under these circumstances. Therefore, all should reach an equal footing eventually.

Some examples of convergence theory include Russia and Vietnam, formerly purely communist countries that have eased away from strict communist doctrines as the economies in other countries, such as the United States, have burgeoned.

State-controlled socialism is less the norm in these countries now than is market socialism, which allows for economic fluctuations and, in some cases, private businesses as well. Russia and Vietnam have both experienced economic growth as their socialistic rules and politics have changed and relaxed to some degree.

Former World War II Axis nations including Italy, Germany, and Japan rebuilt their economic bases into economies not dissimilar to those that existed among the Allied Powers of the United States, the Soviet Union, and Great Britain.

More recently, in the mid-20th century, some East Asian countries converged with other more developed nations. Singapore , South Korea, and Taiwan are now all considered to be developed, industrialized nations.

Sociological Critiques

Convergence theory is an economic theory that presupposes that the concept of development is

- a universally good thing

- defined by economic growth.

It frames convergence with supposedly "developed" nations as a goal of so-called "undeveloped" or "developing" nations, and in doing so, fails to account for the numerous negative outcomes that often follow this economically-focused model of development.

Many sociologists, postcolonial scholars, and environmental scientists have observed that this type of development often only further enriches the already wealthy, and/or creates or expands a middle class while exacerbating the poverty and poor quality of life experienced by the majority of the nation in question.

Additionally, it is a form of development that typically relies on the over-use of natural resources, displaces subsistence and small-scale agriculture, and causes widespread pollution and damage to the natural habitat.

- Dependency Theory

- Rostow's Stages of Growth Development Model

- What Is Socialism? Definition and Examples

- What Is Communism? Definition and Examples

- What Is Free Trade? Definition, Theories, Pros, and Cons

- Socialism vs. Capitalism: What Is the Difference?

- Economics for Beginners: Understanding the Basics

- What Is Human Capital? Definition and Examples

- A List of Current Communist Countries in the World

- What Is Fiscal Policy? Definition and Examples

- Economic Geography Overview

- What Is an Embargo? Definition and Examples

- The U.S. Economy of the1960s and 1970s

- The Geography and Modern History of China

- The International Monetary Fund

- The Critical View on Global Capitalism

- Search Search Please fill out this field.

What Is the Catch-Up Effect?

- How It Works

- Limitations

The Bottom Line

- Macroeconomics

The Catch-Up Effect Definition and Theory of Convergence

:max_bytes(150000):strip_icc():format(webp)/wk_headshot_aug_2018_02__william_kenton-5bfc261446e0fb005118afc9.jpg)

The catch-up effect is a theory that the per capita incomes of all economies will eventually converge.

This theory is based on the observation that underdeveloped economies tend to grow more rapidly than wealthier economies. As a result, the less wealthy economies literally catch up to the more robust economies.

The catch-up effect is also referred to as the theory of convergence .

Key Takeaways

- The catch-up effect is a theory that the per capita incomes of developing economies catch up to those of more developed economies.

- It is based on the law of diminishing marginal returns , applied to investment at the national level.

- It also involves the empirical observation that growth rates tend to slow as an economy matures.

- Developing nations can enhance their catch-up effect by opening up their economies to free trade.

- They should pursue "social capabilities," or the ability to absorb new technology, attract capital, and participate in global markets.

Understanding the Catch-Up Effect

The catch-up effect, or theory of convergence, is predicated on several key ideas.

1. One is the law of diminishing marginal returns . This is the idea that, as a country invests and profits, the amount gained from the investment will eventually decline as the level of investment rises.

Each time a country invests, it benefits slightly less from that investment. So, returns on capital investments in capital-rich countries are not as large as they would be in developing countries.

2. This is backed up by the empirical observation that more developed economies tend to grow at a slower, though more stable, rate than less developed countries.

According to the World Bank, high-income countries averaged 2.8% gross domestic product (GDP) growth in 2022, versus 3.6% for middle-income countries and 3.4% for low-income countries.

3. Developing and underdeveloped countries may also be able to experience more rapid growth because they can replicate the production methods, technologies, policies, and institutions of developed countries.

This is also known as a second-mover advantage. When developing markets have access to the technological know-how of the advanced nations, they can experience rapid rates of growth.

Limitations to the Catch-Up Effect

Lack of capital.

Although developing countries can see faster economic growth than more economically advanced countries, the limitations posed by a lack of capital can greatly reduce a developing country's ability to catch up.

Historically, some developing countries have been very successful in managing resources and securing capital to efficiently increase economic productivity . However, this has not become the norm on a global scale.

Lack of Social Capabilities

Economist Moses Abramowitz wrote that in order for countries to benefit from the catch-up effect, they need to develop and leverage what he called "social capabilities."

These include the ability to absorb new technology, attract capital, and participate in global markets. This means that if technology is not freely traded, or is prohibitively expensive, then the catch-up effect won't occur.

Lack of Open Trade

The adoption of open trade policies, especially with respect to international trade, also plays a role. According to a longitudinal study by economists Jeffrey Sachs and Andrew Warner, national economic policies of free trade and openness are associated with more rapid growth.

Studying 111 countries from 1970 to 1989, the researchers found that industrialized nations had a growth rate of 2.3% per year per capita. Developing countries with open trade policies had a rate of 4.5%. And developing countries with more protectionist and closed economy policies had a growth rate of only 2%.

Population Growth

Another major obstacle for the catch-up effect is that per capita income is not just a function of GDP, but also of a country's population growth. Less developed countries tend to have higher population growth than developed economies. The greater the number of people, the less the per capita income.

According to the World Bank figures for 2022, more developed countries ( OECD members) experienced 0.3% average population growth, while the UN-classified least developed countries had an average 2.3% population growth rate.

Economic convergence appears to stem primarily from latecomer countries borrowing or imitating the established technologies available in industrialized countries.

Example of the Catch-Up Effect

During the period between 1911 to 1940, Japan was the fastest-growing economy in the world. It colonized and invested heavily in its neighbors South Korea and Taiwan, contributing to their economic growth as well. After the Second World War, however, Japan's economy lay in tatters.

The country rebuilt a sustainable environment for economic growth during the 1950s and began importing machinery and technology from the United States. It clocked incredible growth rates in the period from 1960 to the early 1980s.

As Japan's economy powered forward, the U.S. economy, which was a source for much of Japan's infrastructural and industrial underpinnings, hummed along. Then by the late 1970s, when the Japanese economy ranked among the world's top five, its growth rate had slowed down.

The economies of the Asian Tigers , a moniker used to describe the rapid growth of economies in Southeast Asia, have seen a similar trajectory, displaying rapid economic growth during the initial years of their development, followed by a more moderate (and declining) growth rate as they transitioned from a developing stage to that of developed.

How Can Underdeveloped Countries Benefit From the Catch-Up Effect?

Those without technological innovations and the financial resources to develop them can borrow from what has already been innovated and developed successfully to grow their GDP and per capita income.

Has Globalization Made the Catch-Up Effect More Prevalent?

Perhaps. For example, globalization has made advances in technologies and supply chain innovations more readily available to underdeveloped and developing nations, due to the loosening of trade restrictions and economic cooperation between countries.

Why Does the Catch-Up Effect Diminish for Industrialized Countries?

The effect is far less for such countries because, with their greater amounts of capital, freer trade, and beneficial economic policies, they have less room in which to achieve more.

The catch-up effect refers to a theory that holds that the per capita incomes of emerging and developing nations will eventually converge with the per capita incomes of more developed, wealthier countries.

The effect can be hampered by certain circumstances, such as the lack of capital and open trade policies, and the inability to attract investments.

The World Bank. " GDP Growth (annual %) ."

Abramowitz, Moses, via JSTOR. " Catching Up, Forging Ahead, Falling Behind ." Journal of Economic History, vol 46, no. 2, June 1986, pp. 385-406.

Brookings. " Economic Reform and the Process of Global Integration ."

The World Bank. " Population Growth (annual %) ."

Oxford Academic. " Catching Up or Developing Differently? Techno-Institutional Learning with a Sustainable Planet in Mind ."

:max_bytes(150000):strip_icc():format(webp)/TermDefinitions_PercapitaGDP-09e9332fe3d04e68b34e676554168077.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 10 May 2024

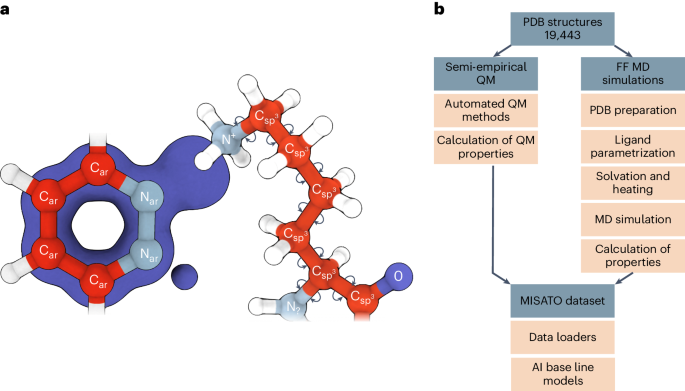

MISATO: machine learning dataset of protein–ligand complexes for structure-based drug discovery

- Till Siebenmorgen ORCID: orcid.org/0009-0008-5160-8100 1 , 2 na1 ,

- Filipe Menezes ORCID: orcid.org/0000-0002-7630-5447 1 , 2 na1 ,

- Sabrina Benassou 3 ,

- Erinc Merdivan 4 ,

- Kieran Didi ORCID: orcid.org/0000-0001-6839-3320 5 ,

- André Santos Dias Mourão 1 , 2 ,

- Radosław Kitel 6 ,

- Pietro Liò 5 ,

- Stefan Kesselheim ORCID: orcid.org/0000-0003-0940-5752 3 ,

- Marie Piraud 4 ,

- Fabian J. Theis ORCID: orcid.org/0000-0002-2419-1943 4 , 7 , 8 ,

- Michael Sattler ORCID: orcid.org/0000-0002-1594-0527 1 , 2 &

- Grzegorz M. Popowicz ORCID: orcid.org/0000-0003-2818-7498 1 , 2

Nature Computational Science ( 2024 ) Cite this article

5822 Accesses

35 Altmetric

Metrics details

- Computational biophysics

- Drug discovery

- Machine learning

A preprint version of the article is available at bioRxiv.

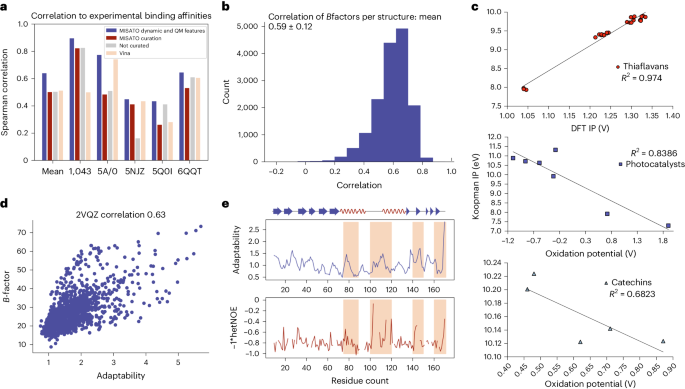

Large language models have greatly enhanced our ability to understand biology and chemistry, yet robust methods for structure-based drug discovery, quantum chemistry and structural biology are still sparse. Precise biomolecule–ligand interaction datasets are urgently needed for large language models. To address this, we present MISATO, a dataset that combines quantum mechanical properties of small molecules and associated molecular dynamics simulations of ~20,000 experimental protein–ligand complexes with extensive validation of experimental data. Starting from the existing experimental structures, semi-empirical quantum mechanics was used to systematically refine these structures. A large collection of molecular dynamics traces of protein–ligand complexes in explicit water is included, accumulating over 170 μs. We give examples of machine learning (ML) baseline models proving an improvement of accuracy by employing our data. An easy entry point for ML experts is provided to enable the next generation of drug discovery artificial intelligence models.

Similar content being viewed by others

Accurate structure prediction of biomolecular interactions with AlphaFold 3

Highly accurate protein structure prediction with AlphaFold

De novo generation of multi-target compounds using deep generative chemistry

In recent years, artificial intelligence (AI) predictions have revolutionized many fields of science. In structural biology, AlphaFold2 (ref. 1 ) predicts accurate protein structures from amino-acid sequences only. Its accuracy nears state-of-the-art experimental data. The success of AlphaFold2 is made possible due to a rich database of nearly 200,000 protein structures that have been deposited and are available in the Protein Data Bank (PDB) 2 . These structures were determined over the past decades using X-ray crystallography, nuclear magnetic resonance (NMR) or cryo-electron microscopy. Despite enormous investments, there are still few new drugs approved yearly, with development costs reaching several billion dollars 3 . An ongoing grand challenge is rational, structure-based drug discovery (DD). Compared with protein structure prediction, this task is substantially more difficult.

In the early stages of DD, structure-based methods are popular and efficient approaches. The biomolecule provides the starting point for rational ligand search. Later, it guides optimization to optimally explore the chemical combinatorial space 4 while still ensuring drug-like properties. In silico methods that are in principle able to tackle structure-based DD include semi-empirical quantum mechanical (QM) methods 5 , molecular dynamics (MD) simulations 6 , 7 , docking 8 and coarse-grained simulations 9 , which can also be combined to be more efficient. However, these methods either suffer from generally low precision or are computationally too expensive while still requiring substantial experimental validation. Recent examples show that classical, ball-and-stick atomistic model representations of biomolecular structures might be too inaccurate in certain situations to allow for correct predictions 10 , 11 , 12 , 13 .

The introduction of AI into the process is still at an early stage. AI approaches are, in principle, able to learn the fundamental state variables that describe experimental data 14 . Thus, they are likely to abstract from electronic and force field-based descriptions of the protein–ligand complex. However, so far mostly simple solutions have been proposed that do not incorporate the available protein–ligand data to their full extent, such as scoring protein–ligand Gibbs free energies 15 , 16 , ADME (absorption, distribution, metabolism and excretion) property estimation 17 or prediction of synthetic routes 18 , 19 . Most of these approaches are constructed using one-dimensional SMILES (simplified molecular-input line-entry system) 20 , 21 and only a few attempts have been made to properly tackle three-dimensional (3D) biomolecule–ligand data 22 , 23 , 24 .

Several databases are available that contain raw experimental structures of protein–ligand complexes, usually extracted from the PDB (for example, PDBbind 25 , bindingDB 26 , Binding MOAD 27 , Sperrylite 28 ). Only recently a database of MD-derived traces of protein–ligand structures was reported 29 , 30 . Despite these efforts, so far no AI model has been proposed that convincingly addresses the rational DD challenge in the way that AlphaFold2 answered the protein structure prediction problem 31 , 32 .

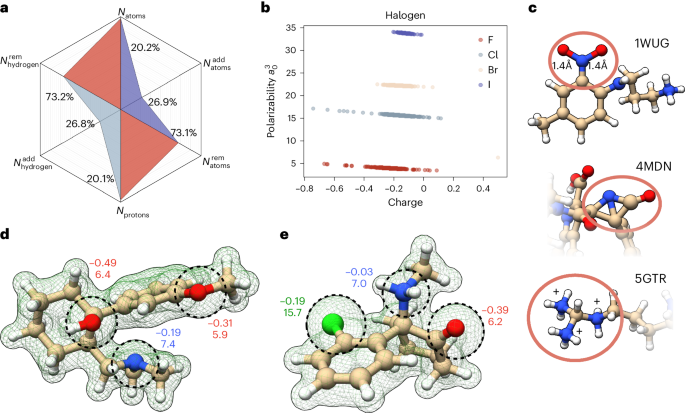

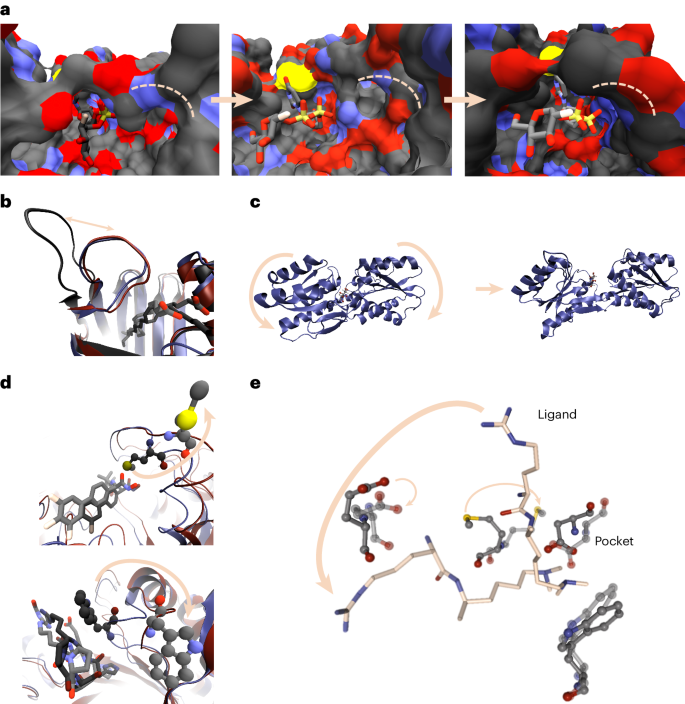

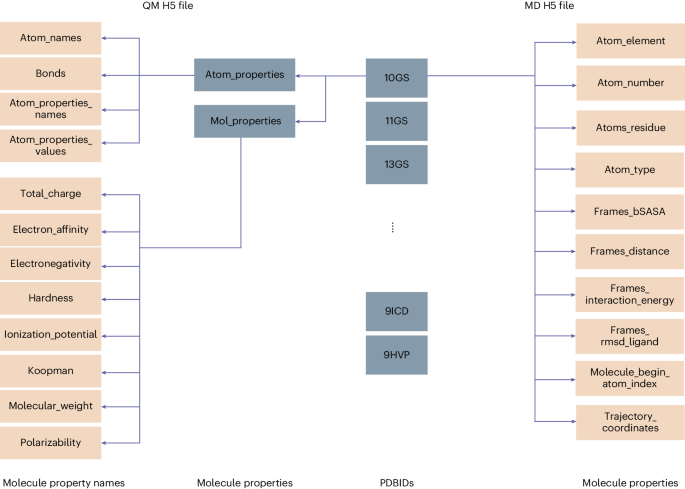

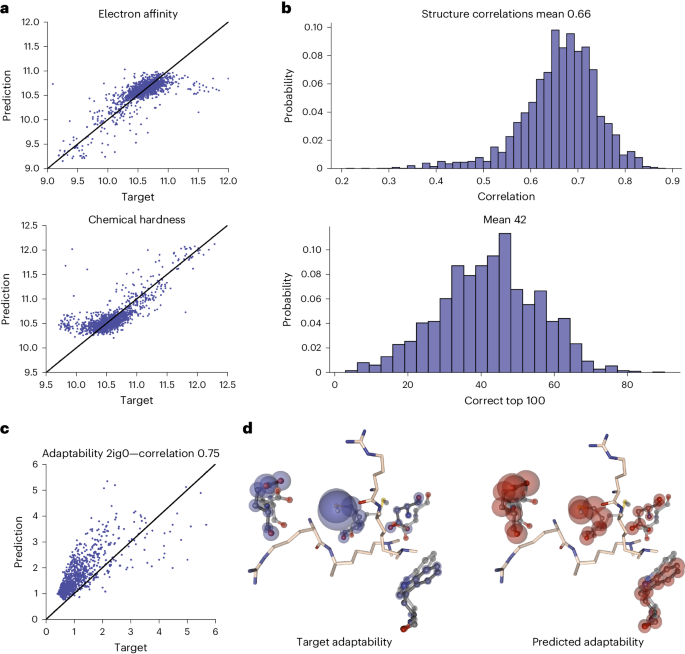

In addition to DD, the structure-based AI models are useful for biomolecule structure analysis and quantum chemistry. However, they are severely hindered by several factors: neglecting the conformational flexibility (dynamics and induced fit upon binding); entropic considerations; inaccuracies in the deposited structural data (incorrect atom types due to missing hydrogen atoms, incorrect evaluation of functional group flexibility, inconsistent geometry restraints, fitting errors); chemical complexity (for example, non-obvious protonation states); overly simplified atomic properties; highly complex energy landscapes in molecular recognition by their targets. Attempts to train AI models currently require inferring this missing information implicitly. The limited number of publicly available protein–ligand structures ( ~ 20,000) and lack of thermodynamic data cause this inference to fail. This is preventing structure-based models from producing groundbreaking results 31 , 32 .