EDITORIAL article

Editorial: the impact of music on human development and well-being.

- 1 Department of Culture, Communication and Media, University College London, London, United Kingdom

- 2 Department of Philosophy, Sociology, Education and Applied Psychology, University of Padua, Padua, Italy

- 3 School of Humanities and Communication Arts, Western Sydney University, Penrith, NSW, Australia

- 4 Melbourne Conservatorium of Music, University of Melbourne, Melbourne, VIC, Australia

Editorial on the Research Topic The Impact of Music on Human Development and Well-Being

Music is one of the most universal ways of expression and communication for humankind and is present in the everyday lives of people of all ages and from all cultures around the world ( Mehr et al., 2019 ). Hence, it seems more appropriate to talk about musics (plural) rather than in the singular ( Goble, 2015 ). Furthermore, research by anthropologists as well as ethnomusicologists suggests that music has been a characteristic of the human condition for millennia (cf. Blacking, 1976 ; Brown, 1999 ; Mithen, 2005 ; Dissanayake, 2012 ; Higham et al., 2012 ; Cross, 2016 ). Nevertheless, whilst the potential for musical behavior is a characteristic of all human beings, its realization is shaped by the environment and the experiences of individuals, often within groups ( North and Hargreaves, 2008 ; Welch and McPherson, 2018 ). Listening to music, singing, playing (informally, formally), creating (exploring, composing, improvising), whether individually and collectively, are common activities for the vast majority of people. Music represents an enjoyable activity in and of itself, but its influence goes beyond simple amusement.

These activities not only allow the expression of personal inner states and feelings, but also can bring about many positive effects in those who engage in them. There is an increasing body of empirical and experimental studies concerning the wider benefits of musical activity, and research in the sciences associated with music suggests that there are many dimensions of human life—including physical, social, educational, psychological (cognitive and emotional)—which can be affected positively by successful engagement in music ( Biasutti and Concina, 2013 ). Learning in and through music is something that can happen formally (such as part of structured lessons in school), as well as in other-than-formal situations, such as in the home with family and friends, often non-sequentially and not necessarily intentional, and where participation in music learning is voluntary, rather than mandated, such as in a community setting (cf. Green, 2002 ; Folkestad, 2006 ; Saether, 2016 ; Welch and McPherson, 2018 ).

Such benefits are evidenced across the lifespan, including early childhood ( Gerry et al., 2012 ; Williams et al., 2015 ; Linnavalli et al., 2018 ), adolescence ( McFerran et al., 2018 ), and older adulthood ( Lindblad and de Boise, 2020 ). Within these lifespan perspectives, research into music's contribution to health and well-being provides evidence of physical and psychological impacts ( MacDonald et al., 2013 ; Fancourt and Finn, 2019 ; van den Elzen et al., 2019 ). Benefits are also reported in terms of young people's educational outcomes ( Guhn et al., 2019 ), and successful musical activity can enhance an individual's sense of social inclusion ( Welch et al., 2014 ) and social cohesion ( Elvers et al., 2017 ).

This special issue provides a collection of 21, new research articles that deepen and develop our understanding of the ways and means that music can impact positively on human development and well-being. The collection draws on the work of 88 researchers from 17 different countries across the world, with each article offering an illustration of how music can relate to other important aspects of human functioning. In addition, the articles collectively illustrate a wide range of contemporary research approaches. These provide evidence of how different research aims concerning the wider benefits of music require sensitive and appropriate methodologies.

In terms of childhood and adolescence, for example, Putkinen et al. demonstrate how musical training is likely to foster enhanced sound encoding in 9 to 15-year-olds and thus be related to reading skills. A separate Finnish study by Saarikallio et al. provides evidence of how musical listening influences adolescents' perceived sense of agency and emotional well-being, whilst demonstrating how this impact is particularly nuanced by context and individuality. Aspects of mental health are the focus for an Australian study by Stewart et al. of young people with tendencies to depression. The article explores how, despite existing literature on the positive use of music for mood regulation, music listening can be double-edged and could actually sustain or intensify a negative mood.

A Portuguese study by Martins et al. shifts the center of attention from mental to physical benefits in their study of how learning music can support children's coordination. They provide empirical data on how a sustained, 24-week programme of Orff-based music education, which included the playing of simple tuned percussion instruments, significantly enhanced the manual dexterity and bimanual coordination in participant 8-year-olds compared to their active control (sports) and passive control peers. A related study by Loui et al. in the USA offers insights into the neurological impact of sustained musical instrument practice. Eight-year-old children who play one or more musical instruments for at least 0.5 h per week had higher scores on verbal ability and intellectual ability, and these correlated with greater measurable connections between particular regions of the brain related to both auditory-motor and bi-hemispheric connectivity.

Younger, pre-school children can also benefit from musical activities, with associations being reported between informal musical experiences in the home and specific aspects of language development. A UK-led study by Politimou et al. found that rhythm perception and production were the best predictors of young children's phonological awareness, whilst melody perception was the best predictor of grammar acquisition, a novel association not previously observed in developmental research. In another pre-school study, Barrett et al. explored the beliefs and values held by Australian early childhood and care practitioners concerning the value of music in young children's learning. Despite having limited formal qualifications and experience of personal music learning, practitioners tended overall to have positive attitudes to music, although this was biased toward music as a recreational and fun activity, with limited support for the notion of how music might be used to support wider aspects of children's learning and development.

Engaging in music to support a positive sense of personal agency is an integral feature of several articles in the collection. In addition to the Saarikallio team's research mentioned above, Moors et al. provide a novel example of how engaging in collective beatboxing can be life-enhancing for throat cancer patients in the UK who have undergone laryngectomy, both in terms of supporting their voice rehabilitation and alaryngeal phonation, as well as patients' sense of social inclusion and emotional well-being.

One potential reason for these positive findings is examined in an Australian study by Krause et al. . They apply the lens of self-determination theory to examine musical participation and well-being in a large group of 17 to 85-year-olds. Respondents to an online questionnaire signaled the importance of active music making in their lives in meeting three basic psychological needs embracing a sense of competency, relatedness and autonomy.

The use of public performance in music therapy is the subject of a US study by Vaudreuil et al. concerning the social transformation and reintegration of US military service members. Two example case studies are reported of service members who received music therapy as part of their treatment for post-traumatic stress disorder, traumatic brain injury, and other psychological health concerns. The participants wrote, learned, and refined songs over multiple music therapy sessions and created song introductions to share with audiences. Subsequent interviews provide positive evidence of the beneficial psychological effects of this programme of audience-focused musical activity.

Relatedly, McFerran et al. in Australia examined the ways in which music and trauma have been reported in selected music therapy literature from the past 10 years. The team's critical interpretive synthesis of 36 related articles led them to identify four different ways in which music has been used beneficially to support those who have experienced trauma. These approaches embrace the use of music for stabilizing (the modulation of physiological processes) and entrainment (the synchronization of music and movement), as well as for expressive and performative purposes—the fostering of emotional and social well-being.

The therapeutic potential of music is also explored in a detailed case study by Fachner et al. . Their research focuses on the nature of critical moments in a guided imagery and music session between a music therapist and a client, and evidences how these moments relate to underlying neurological function in the mechanics of music therapy.

At the other end of the age span, and also related to therapy, an Australian study by Brancatisano et al. reports on a new Music, Mind, and Movement programme for people in their eighties with mild to moderate dementia. Participants involved in the programme tended to show an improvement in aspects of cognition, particularly verbal fluency and attention. Similarly, Wilson and MacDonald report on a 10-week group music programme for young Scottish adults with learning difficulties. The research data suggest that participants enjoyed the programme and tended to sustain participation, with benefits evidenced in increased social engagement, interaction and communication.

The role of technology in facilitating access to music and supporting a sense of agency in older people is the focus for a major literature review by Creech , now based in Canada. Although this is a relatively under-researched field, the available evidence suggests that that older people, even those with complex needs, are capable of engaging with and using technology in a variety of ways that support their musical perception, learning and participation and wider quality of life.

Related to the particular needs of the young, children's general behavior can also improve through music, as exampled in an innovative, school-based, intensive 3-month orchestral programme in Italy with 8 to 10-year-olds. Fasano et al. report that the programme was particularly beneficial in reducing hyperactivity, inattention and impulsivity, whilst enhancing inhibitory control. These benefits are in line with research findings concerning successful music education with specific cases of young people with ADHD whose behavior is characterized by these same disruptive symptoms (hyperactivity, inattention, and impulsivity).

Extra-musical benefits are also reported in a study of college students (Bachelors and Masters) and amateur musicians in a joint Swiss-UK study. Antonini Philippe et al. suggest that, whilst music making can offer some health protective effects, there is a need for greater health awareness and promotion among advanced music students. Compared to the amateur musicians, the college music students evaluated their overall quality of life and general and physical health more negatively, as did females in terms of their psychological health. Somewhat paradoxically, the college students who had taken part in judged performances reported higher psychological health ratings. This may have been because this sub-group were slightly older and more experienced musicians.

Music appears to be a common accompaniment to exercise, whether in the gym, park or street. Nikol et al. in South East Asia explore the potential physical benefits of synchronous exercise to music, especially in hot and humid conditions. Their randomized cross-over study (2019) reports that “time-to-exhaustion” under the synchronous music condition was 2/3 longer compared to the no-music condition for the same participants. In addition, perceived exertion was significantly lower, by an average of 22% during the synchronous condition.

Comparisons between music and sport are often evidenced in the body of existing Frontiers research literature related to performance and group behaviors. Our new collection contains a contribution to this literature in a study by Habe et al. . The authors investigated elite musicians and top athletes in Slovenia in terms of their perceptions of flow in performance and satisfaction with life. The questionnaire data analyses suggest that the experience of flow appears to influence satisfaction with life in these high-functioning individuals, albeit with some variations related to discipline, participant sex and whether considering team or individual performance.

A more formal link between music and movement is the focus of an exploratory case study by Cirelli and Trehub . They investigated a 19-month-old infant's dance-like, motorically-complex responses to familiar and unfamiliar songs, presented at different speeds. Movements were faster for the more familiar items at their original tempo. The child had been observed previously as moving to music at the age of 6 months.

Finally, a novel UK-based study by Waddington-Jones et al. evaluated the impact of two professional composers who were tasked, individually, to lead a 4-month programme of group composing in two separate and diverse community settings—one with a choral group and the other in a residential home, both funded as part of a music programme for the Hull City of Culture in 2017. In addition to the two composers, the participants were older adults, with the residential group being joined by schoolchildren from a local Primary school to collaborate in a final performance. Qualitative data analyses provide evidence of multi-dimensional psychological benefits arising from the successful, group-focused music-making activities.

In summary, these studies demonstrate that engaging in musical activity can have a positive impact on health and well-being in a variety of ways and in a diverse range of contexts across the lifespan. Musical activities, whether focused on listening, being creative or re-creative, individual or collective, are infused with the potential to be therapeutic, developmental, enriching, and educational, with the caveat provided that such musical experiences are perceived to be engaging, meaningful and successful by those who participate.

Collectively, these studies also celebrate the multiplicity of ways in which music can be experienced. Reading across the articles might raise a question as to whether or not any particular type of musical experience is seen to be more beneficial compared with another. The answer, at least in part, is that the empirical evidence suggests that musical engagement comes in myriad forms along a continuum of more or less overt activity, embracing learning, performing, composing and improvising, as well as listening and appreciating. Furthermore, given the multidimensional neurological processing of musical experience, it seems reasonable to hypothesize that it is perhaps the level of emotional engagement in the activity that drives its degree of health and well-being efficacy as much as the activity's overt musical features. And therein are opportunities for further research!

Author Contributions

The editorial was drafted by GW and approved by the topic Co-editors. All authors listed have made a substantial, direct and intellectual contribution to the Edited Collection, and have approved this editorial for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We are very grateful to all the contributing authors and their participants for their positive engagement with this Frontiers Research Topic, and also for the Frontiers staff for their commitment and support in bringing this topic to press.

Biasutti, M., and Concina, E. (2013). “Music education and transfer of learning,” in Music: Social Impacts, Health Benefits and Perspectives , eds P. Simon and T. Szabo (New York, NY: Nova Science Publishers, Inc Series: Fine Arts, Music and Literature), 149–166.

Google Scholar

Blacking, J. (1976). How Musical Is Man? London: Faber & Faber.

Brown, S. (1999). “The ‘musilanguage’ model of music evolution,” in The Origins of Music , eds N. L. Wallin, B. Merker, and S. Brown (Cambridge: The MIT Press), 271–301. doi: 10.7551/mitpress/5190.003.0022

CrossRef Full Text

Cross, I. (2016). “The nature of music and its evolution,” in Oxford Handbook of Music Psychology , eds S. Hallam, I. Cross, and M. Thaut (New York, NY: Oxford University Press), 3–18. doi: 10.1093/oxfordhb/9780198722946.013.5

CrossRef Full Text | Google Scholar

Dissanayake, E. (2012). The earliest narratives were musical. Res. Stud. Music Educ. 34, 3–14. doi: 10.1177/1321103X12448148

Elvers, P., Fischinger, T., and Steffens, J. (2017). Music listening as self-enhancement: effects of empowering music on momentary explicit and implicit self-esteem. Psychol. Music 46, 307–325. doi: 10.1177/0305735617707354

Fancourt, D., and Finn, S. (2019). What Is the Evidence on the Role of the Arts in Improving Health and Well-Being? A Scoping Review . Copenhagen: World Health Organisation.

Folkestad, G. (2006). Formal and informal learning situations or practices vs formal and informal ways of learning. Br. J. Music Educ. 23, 135–145. doi: 10.1017/S0265051706006887

Gerry, D., Unrau, A., and Trainor, L. J. (2012). Active music classes in infancy enhance musical, communicative and social development. Dev. Sci. 15, 398–407. doi: 10.1111/j.1467-7687.2012.01142.x

PubMed Abstract | CrossRef Full Text | Google Scholar

Goble, J. S. (2015). Music or musics?: an important matter at hand. Act. Crit. Theor. Music Educ. 14, 27–42. Available online at: http://act.maydaygroup.org/articles/Goble14_3.pdf

Green, L. (2002). How Popular Musicians Learn. Aldershot: Ashgate Press.

Guhn, M., Emerson, S. D., and Gouzouasis, P. (2019). A population-level analysis of associations between school music participation and academic achievement. J. Educ. Psychol. 112, 308–328. doi: 10.1037/edu0000376

Higham, T., Basell, L., Jacobi, R., Wood, R., Ramsey, C. B., and Conard, N.J. (2012). Testing models for the beginnings of the Aurignacian and the advent of figurative art and music: the radiocarbon chronology of GeißenklÃsterle. J. Hum. Evol. 62, 664-676. doi: 10.1016/j.jhevol.2012.03.003

Lindblad, K., and de Boise, S. (2020). Musical engagement and subjective wellbeing amongst men in the third age. Nordic J. Music Therapy 29, 20–38. doi: 10.1080/08098131.2019.1646791

Linnavalli, T., Putkinen, V., Lipsanen, J., Huotilainen, M., and Tervaniemi, M. (2018). Music playschool enhances children's linguistic skills. Sci. Rep. 8:8767. doi: 10.1038/s41598-018-27126-5

MacDonald, R., Kreutz, G., and Mitchell, L. (eds.), (2013). Music, Health and Wellbeing. New York, NY: Oxford University Press. doi: 10.1093/acprof:oso/9780199586974.001.0001

McFerran, K. S., Hense, C., Koike, A., and Rickwood, D. (2018). Intentional music use to reduce psychological distress in adolescents accessing primary mental health care. Clin. Child Psychol. Psychiatry 23, 567–581. doi: 10.1177/1359104518767231

Mehr, A., Singh, M., Knox, D., Ketter, D. M., Pickens-Jones, D., Atwood, S., et al. (2019). Universality and diversity in human song. Science 366:eaax0868. doi: 10.1126/science.aax0868

Mithen, S., (ed.). (2005). Creativity in Human Evolution and Prehistory . London: Routledge. doi: 10.4324/9780203978627

North, A. C., and Hargreaves, D. J. (2008). The Social and Applied Psychology of Music . New York, NY: Oxford University Press. doi: 10.1093/acprof:oso/9780198567424.001.0001

Saether, M. (2016). Music in informal and formal learning situations in ECEC. Nordic Early Childhood Educ. Res. J. 13, 1–13. doi: 10.7577/nbf.1656

van den Elzen, N., Daman, V., Duijkers, M., Otte, K., Wijnhoven, E., Timmerman, H., et al. (2019). The power of music: enhancing muscle strength in older people. Healthcare 7:82. doi: 10.3390/healthcare7030082

Welch, G.F., and McPherson, G. E., (eds.). (2018). “Commentary: Music education and the role of music in people's lives,” in Music and Music Education in People's Lives: An Oxford Handbook of Music Education (New York, NY: Oxford University Press), 3–18. doi: 10.1093/oxfordhb/9780199730810.013.0002

Welch, G. F., Himonides, E., Saunders, J., Papageorgi, I., and Sarazin, M. (2014). Singing and social inclusion. Front. Psychol. 5:803. doi: 10.3389/fpsyg.2014.00803

Williams, K. E., Barrett, M. S., Welch, G. F., Abad, V., and Broughton, M. (2015). Associations between early shared music activities in the home and later child outcomes: findings from the longitudinal study of Australian Children. Early Childhood Res. Q. 31, 113–124. doi: 10.1016/j.ecresq.2015.01.004

Keywords: music, wider benefits, lifespan, health, well-being

Citation: Welch GF, Biasutti M, MacRitchie J, McPherson GE and Himonides E (2020) Editorial: The Impact of Music on Human Development and Well-Being. Front. Psychol. 11:1246. doi: 10.3389/fpsyg.2020.01246

Received: 12 January 2020; Accepted: 13 May 2020; Published: 17 June 2020.

Reviewed by:

Copyright © 2020 Welch, Biasutti, MacRitchie, McPherson and Himonides. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Graham F. Welch, graham.welch@ucl.ac.uk ; Michele Biasutti, michele.biasutti@unipd.it

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 28 March 2024

Song lyrics have become simpler and more repetitive over the last five decades

- Emilia Parada-Cabaleiro 2 na1 ,

- Maximilian Mayerl 4 na1 ,

- Stefan Brandl 3 ,

- Marcin Skowron 5 ,

- Markus Schedl 1 , 3 ,

- Elisabeth Lex 6 &

- Eva Zangerle 4

Scientific Reports volume 14 , Article number: 5531 ( 2024 ) Cite this article

68k Accesses

2 Citations

3128 Altmetric

Metrics details

- Computational science

- Computer science

An Author Correction to this article was published on 22 May 2024

This article has been updated

Music is ubiquitous in our everyday lives, and lyrics play an integral role when we listen to music. The complex relationships between lyrical content, its temporal evolution over the last decades, and genre-specific variations, however, are yet to be fully understood. In this work, we investigate the dynamics of English lyrics of Western, popular music over five decades and five genres, using a wide set of lyrics descriptors, including lyrical complexity, structure, emotion, and popularity. We find that pop music lyrics have become simpler and easier to comprehend over time: not only does the lexical complexity of lyrics decrease (for instance, captured by vocabulary richness or readability of lyrics), but we also observe that the structural complexity (for instance, the repetitiveness of lyrics) has decreased. In addition, we confirm previous analyses showing that the emotion described by lyrics has become more negative and that lyrics have become more personal over the last five decades. Finally, a comparison of lyrics view counts and listening counts shows that when it comes to the listeners’ interest in lyrics, for instance, rock fans mostly enjoy lyrics from older songs; country fans are more interested in new songs’ lyrics.

Similar content being viewed by others

The role of population size in folk tune complexity

The diachronic development of Debussy’s musical style: a corpus study with Discrete Fourier Transform

Computational modeling of interval distributions in tonal space reveals paradigmatic stylistic changes in Western music history

Introduction.

We are surrounded by music every day; it is pervasive in today’s society 1 and serves many functions. For instance, people listen to music to relieve boredom, fill uncomfortable silences, foster social cohesion and communication, or regulate their emotions 2 , 3 , 4 . When it comes to listeners liking or disliking a track, the most salient components of music, alongside the ability of a song to evoke emotion and the singing voice, are a song’s lyrics 5 . Likewise, the interplay between melody and lyrics is imperative as lyrics have been shown to influence the emotional valence of music; particularly, lyrics can enhance the negative emotion in angry and sad music 6 . Music containing lyrics has also been shown to activate different regions in the brain compared to music without lyrics 7 .

Seen from a different angle, lyrics can be considered a form of literary work 8 . Usually written in verse form, lyrics use poetic devices such as rhyme, repetition, metaphors, and imagery 9 , and hence can be considered similar to poems 8 . This is also showcased by Bob Dylan winning the Nobel Prize in literature in 2016 “for having created new poetic expressions within the great American song tradition” 10 . Just as literature can be considered a portrayal of society, lyrics also provide a reflection of a society’s shifting norms, emotions, and values over time 11 , 12 , 13 , 14 , 15 .

To this end, understanding trends in the lyrical content of music has gained importance in recent years: computational descriptors of lyrics have been leveraged to uncover and describe differences between songs with respect to genre 16 , 17 , or to analyze temporal changes of lyrics descriptors 11 , 17 , 18 . Lyrical differences between genres have been identified by Schedl in terms of repetitiveness (rhythm & blues (R&B) music having the most repetitive lyrics and heavy metal having the least repetitive lyrics) and readability (rap music being hardest to comprehend, punk and blues being easiest) 16 . In a study of 1879 unique songs over three years (2014–2016) across seven major genres (Christian, country, dance, pop, rap, rock, and R&B), Berger and Packard 19 find that songs with lyrics that are topically more differentiated from its genre are more popular in terms of their position in the Billboard digital download rankings. Kim et al. 20 use four sets of features extracted from song lyrics and one set of audio features extracted from the audio signal for the tasks of genre recognition, music recommendation, and music auto-tagging. They find that while the audio features show the largest and most consistent effect sizes, linguistic and psychology inventory features also show consistent contributions in the investigated tasks.

Studies investigating the temporal evolution of lyrics predominantly focus on tracing emotional cues over the years. For instance, Dodds et al. 17 identify a downward trend in the average valence of song lyrics from 1961 to 2007. Napier and Shamir 21 investigated the change in sentiment of the lyrics of 6150 Billboard 100 songs from 1951 through 2016. They find that positive sentiments (e.g., joy or confidence) have decreased, while negative sentiments (e.g., anger, disgust, or sadness) have increased. Brand et al. 13 use two datasets containing lyrics of 4913 and 159,015 pop songs, spanning from 1965 to 2015, to investigate the proliferation of negatively valenced emotional lyrical content. They find that the proliferation can partly be attributed to content bias (charts tend to favor negative lyrics), and partly to cultural transmission biases (e. g., success or prestige bias, where best-selling songs or artists are copied). Investigating the lyrics of the 10 most popular songs from the US Hot 100 year-end charts between 1980 and 2007, DeWall et al. 18 find that words related to oneself (e.g., me or mine) and words pointing to antisocial behavior (e.g., hate or kill) increased while words related to social interactions (e.g., talk or mate) and positive emotions (e.g., love or nice) decreased over time.

Alongside changes in emotional cues, Varnum et al. 11 find that the simplicity of lyrics in pop music increased over six decades (1958–2016). Similarly, Choi et al. 9 study the evolution of lyrical complexity. They particularly investigate the concreteness of lyrics (concreteness describes whether a word refers to a concrete or abstract concept) as it has been shown to correlate with readability and find that concreteness increased over the last four decades. Furthermore, there is also a body of research investigating the evolution of lyrical content (i.e., so-called themes). For instance, Christenson et al. 22 analyzed the evolution of themes in the U.S. Billboard top-40 singles from 1960 to 2010. They find that the fraction of lyrics describing relationships in romantic terms did not change. However, the fraction of sex-related aspects of relationships substantially increased.

Studies on the temporal evolution of music have also looked into temporal changes of acoustic descriptors , beyond lyrics. Interiano et al. 23 investigate acoustic descriptors of 500,000 songs from 1985 to 2015. They discover a downward trend in “happiness” and “brightness”, as well as a slight upward trend in “sadness”. They also correlate these descriptors with success and find that successful songs exhibit distinct dynamics. In particular, they tend to be “happier”, more “party-like”, and less “relaxed” than others.

Despite previous efforts to understand the functions, purposes, evolution, and predictive qualities of lyrics, there still exists a research gap in terms of uncovering the complete picture of the complex relationships between descriptors of lyrical content, their variations between genres, and their temporal evolution over the last decades. Earlier studies focused on specific descriptors, genres, or timeframes, and most commonly investigated smaller datasets. In this paper, we investigate the (joint) evolution of the complexity of lyrics, their emotion, and the corresponding song’s popularity based on a large dataset of English, Western, popular music spanning five decades, a wide variety of lyrics descriptors, and multiple musical genres. We measure the popularity of tracks and lyrics, where we distinguish between the listening count, i. e., the number of listening events since the start of the platform, and the lyrics view count, i. e., the number of views of lyrics on the Genius platform ( https://genius.com ). Thereby, we investigate the following research questions in this paper: (RQ1) Which trends can we observe concerning pop music lyrics across the last 50 years, drawing on multifaceted lyrics descriptors? We expect that descriptors that correlate more strongly with the release year lead to better-performing regression models. (RQ2) Which role does the popularity of songs and lyrics play in this scenario? We expect that lyric views vary across genres, and these variations can be attributed to changes in lyrics over time.

Our exploratory study differs from existing studies in several regards: (1) we provide the first joint analysis of the evolution of multiple lyrics descriptors and popularity, (2) we investigate a multitude of lyrics descriptors capturing lyrical complexity, structure, and emotion, (3) we provide an in-depth analysis of these descriptors’ evolution, not only over time but focusing on different genres , and (4) we leverage a substantially larger dataset than most existing works.

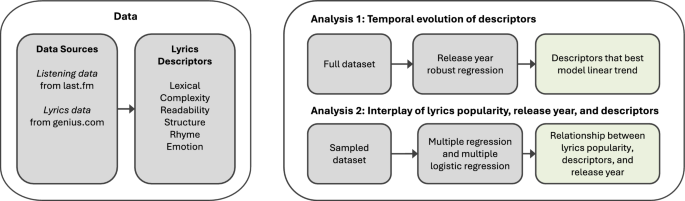

For our analyses, we create a dataset containing 353,320 English song lyrics from the Genius platform ( https://genius.com/ ), spanning five decades (1970–2020) in terms of the songs’ release years. Based on this collection of lyrics, we extract a wide variety of lyrics’ descriptors and popularity data for each song. In particular, we extract lexical, linguistic, structural, rhyme, emotion, and complexity descriptors and focus on five genres: rap, country, pop, R&B, and rock, as these are the most popular genres according to the widely used music streaming platform last.fm ( https://www.last.fm/ ) 24 , 25 , 26 , 27 , disregarding genres for which lyrics are less frequent (e. g., jazz and classical music). Our analysis is based on two complementary analyses, as shown in Fig. 1 . In our first analysis, we are interested in the evolution of pop music lyrics and the impact of descriptors in a regression task (i.e., we aim to find the predictors that are best suited to model a linear trend in a release year regression task). The second set of analyses investigates the relationship between lyrics view count, descriptors, and corresponding songs’ release year in a multiple linear regression analysis. Assessing lyrics’ view count, besides the typically analyzed measure listening play count, enables us to take into account another perspective of music popularity. In particular, lyrics’ view count allows us to expressively investigate the role played by lyrics in music consumption patterns over time (through the songs’ release year) as well as to relate such patterns with the lyrics characteristics (through the lyrics’ descriptors). Note that while listening play counts do not give any information about a listener’s interest in the lyrics, lyrics’ view count is a clear indicator of lyrics’ importance, which might not necessarily relate to a musical genre’s general popularity.

Overview of data and analyses performed. Based on a wide variety of descriptors capturing the lyrical characteristics from listening data and lyrics content, we perform two analyses. Analyses 1 identifies descriptors that are characteristic of the release year and genre. Analyses 2 investigates the relationship between the identified lyrics descriptors, popularity (listening counts and lyrics view counts), and release year.

Basic dataset and lyrics acquisition

For gathering song lyrics, we rely on the LFM-2b dataset 28 ( http://www.cp.jku.at/datasets/LFM-2b ) of listening events by last.fm users, since it is one of the largest publicly available datasets of music listening data. Last.fm is an online social network and streaming platform for music, where users can opt-in to share their listening data. It provides various connectors to other services, including Spotify, Deezer, Pandora, iTunes, and YouTube, through which users can share on last.fm what they are listening to on other platforms ( https://www.last.fm/about/trackmymusic ). While last.fm services are globally available, their user base is unevenly distributed geographically, with a strong bias towards the United States, Russia, Germany, United Kingdom, Poland, and Brazil. In fact, an analysis of a representative subset of last.fm users found that the users from these six countries comprise more than half of last.fm’s total user base 29 .

The LFM-2b dataset contains more than two billion listening records and more than fifty million songs by more than five million artists. We enrich the dataset with information about songs’ release year, genre, lyrics, and popularity information. For quantifying the popularity of tracks and lyrics, we distinguish between the listening count, i. e., the number of listening events in the LFM-2b dataset , and lyrics view count, i. e., the number of views of lyrics on the Genius platform ( https://genius.com ). Release year, genre information, and lyrics are obtained from the Genius platform. Genres are expressed by one primary genre and arbitrarily many additional genre tags. Lyrics on the Genius platform can be added by registered members and undergo an editorial process for quality checks. We use the polyglot package ( https://polyglot.readthedocs.io/ ) to automatically infer the language of the lyrics and consider only English lyrics. Adopting this procedure, we ultimately obtain complete information for 582,759 songs.

Lyrics style and emotion descriptors

Following the lines of previous research in the field of lyrics analysis 30 , 31 , 32 , we characterize lyrics based on stylistic (including descriptors of lexical, complexity, structure, and rhyme characteristics) and emotion descriptors. Lexical descriptors include, for instance, unique token ratio, repeated token ratio, pronoun frequency, line count, or punctuation counts, but also measures of lexical diversity 33 , 34 as these have been shown to be well-suited markers for textual style 35 . To quantify the diversity of lyrics, we compute the compression rate 36 , effectively capturing the repetitiveness of lyrics and several diversity measures. For structural descriptors of lyrics, we utilize the descriptors identified by Malheiro et al. 37 , which, for instance, include the number of times the chorus is repeated, the number of verses and choruses, and the alternation pattern of verse and chorus. For descriptors that capture rhymes contained in lyrics, we extract, for instance, the number of subsequent pairs of rhyming lines (i. e., clerihews), alternating rhymes, nested rhymes, or alliterations, but also rely on general descriptors such as the fraction of rhyming lyrics 30 as these have been shown to be characteristic for the style of lyrics 30 . For measuring readability, we use standard measures like the number of difficult words, or Flesch’s Reading Ease formula 38 . Furthermore, we extract emotional descriptors from lyrics by applying the widely used Linguistic Inquiry and Word Count (LIWC) dictionary 39 , which has also been applied to lyrics analyses 18 , 37 , 40 . We provide a complete list of all descriptors, including a short description and further information about how the descriptors are extracted in Table 1 .

Statistical analyses

Figure 1 provides an overview of the methodological framework used for the analyses presented. The two analyses conducted aim to (1) investigate the evolution of descriptors over five decades by performing a release year regression task to identify the importance of descriptors, and (2) investigate the interplay of lyrics descriptors, release year, and lyrics view count by performing a regression analysis on a dataset containing 12,000 songs, balanced for both genres and release years. The combination of these two analyses provides us with complementary findings; while the first analysis uses the entirety of our collected dataset and therefore derives general findings on descriptor importance, the second analysis, performed on a carefully balanced, reduced dataset, provides us with a more in-depth analysis on the strength of relationships of the individual lyrics and popularity descriptors and temporal aspects.

Analysis 1: evolution of descriptors and descriptor importance

In this analysis, we investigate which descriptors are most strongly correlated with the release year of a song. We expect that descriptors that correlate more strongly with the release year lead to better-performing regression models. Therefore, we train a release year regressor for each of the five genres. We are mostly interested in determining each descriptor’s importance, thereby identifying the descriptors that are most effective at predicting a linear trend across release years.

First, we perform z-score normalization of the descriptors. Subsequently, we remove multicollinear descriptors using the variance inflation factor (VIF). Here, we iteratively remove descriptors that exhibited a VIF higher than 5 until all of the remaining descriptors have a VIF lower than 5 (as also performed in Analysis 2). Preliminary analyses showed that the data associated with individual descriptors are heteroscedastic (i.e., the variance is not homogenous, but dependent on the release year). To overcome this bias, we use Huber’s M regressor 41 , a widely used robust linear regressor.

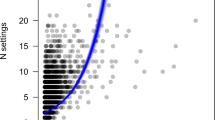

Notably, we perform these analyses on all available songs for the five genres for which we can successfully extract all descriptors (totaling to 353,320 songs). For each genre, we train the regression model and analyze the model’s performance and the computed regression coefficients to identify the most important descriptors for determining the release year of the songs. The models for this analysis are built in Python, using the statsmodels package 42 (via the Robust Linear Models ( RLM ) class; statsmodels version 0.14). The plots in Fig. 2 are generated using the Matplotlib library 43 (version 3.7.0) and used gaussian_kde of scipy.stats for the density computation 44 .

Analysis 2: interplay of lyrics descriptors, lyrics view count, and release year

In this analysis, we first assess whether lyrics’ view count is related to the underlying musical genre and to which extent such a connection might vary over time. Subsequently, we evaluate the evolution of pop music lyrics over time within each musical genre. We assume that lyric’s view count varies amongst musical genres, and these variations can be related to changes in the lyrics over time. Thus, to further deepen our understanding of the relationship between lyrics’ view count and genre, as well as whether the release date has a role in this relationship, we start by performing a multinomial logistic regression analysis considering genre as the dependent variable and the interaction between popularity and release year as predictors, where the number of views of the lyrics of a song is used to capture lyrics popularity. Subsequently, since the lyrics from different musical genres might develop differently over time, to investigate the relationship between release date and lyrics descriptors, a different linear model (considering release year as a dependent variable) is fitted for each genre individually. To find the model that best fits the data for each genre, we apply the backward stepwise method as appropriate in our exploratory study. From the stepwise methods, we consider backward elimination over forward selection to minimize the risk of excluding predictors involved in suppressor effects 45 .

To carry out a fair comparison, before starting the analyses, the collected dataset is randomly downsampled to guarantee a balanced distribution of songs across musical genres and years. To enable this, due to the highly skewed distribution of data over time, only the last three decades (1990–2020) could be considered for this analysis. The means and standard deviations of both the whole and the downsampled datasets are mostly comparable across the musical genres. There is, however, a larger difference between the standard deviations for pop and country. Unstandardized means and standard deviations for the whole dataset vs. the downsampled for both the lyrics views and the play count are shown in Table 2 .

A total of 2400 items, i. e., songs, are considered for each musical genre. Due to the high diversity across the measurement unit of the predictors, i. e., popularity scores and lyrics descriptors, these are z-score normalized and multicollinear outliers are identified by computing Mahalanobis distance 46 and subsequently removed. Highly correlated descriptors are also discarded until all of them presented a variance inflation factor less than 5. The results from the multinomial logistic regression show that lyrics view count differs across decades for the evaluated genres. Therefore, we investigate the relation between lyrics view count and particular lyrics descriptors by also fitting a multiple linear regression model containing the interaction between the lyrics view count and the other predictors. However, the model with the interaction is not significantly better than the baseline model (for all the musical genres, analysis of variance yields \(p>.01\) ); thus, only the model without interaction is considered in the evaluation of the multiple linear regression results for each genre. The statistical models of Analysis 2 are built on the statistical software R 47 version 4.1.2 (2021-11-01). Multinomial logistic regression is carried out using the mlogit package 48 (version 1.1-1) while the linear models for each genre are fitted with the nlme package 49 (version 3.1-155) and multiple comparisons across genres are performed with the multcomp package 50 (version 1.4-25). The graphic shown in Fig. 3 is generated with the ggplot2 package 51 (version 3.4.3).

Results and discussion

Analysis 1: evolution of lyrics and descriptor importance.

In this analysis, we are particularly interested in the most important and hence, most characteristic features for the task of per-genre release year regression. The top ten descriptors (i.e., the descriptors with the highest regression coefficients) for each of the five genres in our dataset are given in Table 3 . The R \(^2\) values obtained per genre are 0.0835 for pop, 0.0699 for rock, 0.3340 for rap, 0.2510 for R&B, and 0.1267 for country.

We can identify several descriptors that are among the most important for multiple genres. The number of unique rhyme words is among the top ten descriptors across all five genres analyzed. The number of dots and repeated line ratio descriptors are among the top descriptors in pop, rock, rap, and R&B. Four descriptors are featured in the top descriptors of three genres each ( ratio verses to sections , ratio choruses to sections , average token length , Dugast’s U (modelling the number of token types as a function of the token count), and blank line count. Interestingly, when considering a higher abstraction level (i.e., descriptor categories such as lexical, emotion, structure, rhyme, readability, or diversity), we observe that lexical descriptors emerge as the predominant category across all five genres. Furthermore, at least one rhyme descriptor is also among the top descriptors for each genre. Four out of the five genres also feature at least one structural descriptor, while R&B does not. While for pop and rap, the top-10 descriptors contain lexical, structural, diversity, and rhyme descriptors, rock features a readability descriptor. For country, five categories of descriptors are within the top 10. Interestingly, descriptors measuring the lexical diversity of lyrics are among the top descriptors for rap and R&B ( Dugast’s U ; and Measure of Textual Lexical Diversity MTLD that captures the average length of sequential token strings that fulfil a type-token-ratio threshold), country ( compression ratio , i.e., ratio of the size of zlib compressed lyrics compared to the original, uncompressed lyrics), and pop ( Dugast’s U ). Emotion descriptors only occur among the most important descriptors for country ( positive emotion ) and R&B ( positive emotions and anger ). Readability descriptors are among the top descriptors for rock ( Dale-Chall readability score, which is computed based on a list of 3000 words that fourth-graders should be familiar with).

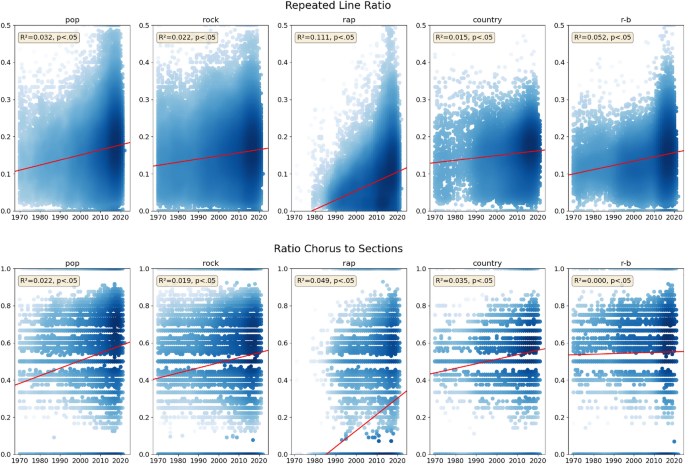

Evolution over time for the descriptors repeated line ratio and ratio chorus to sections for each genre. The linear regression lines (in red) show the evolution of descriptor values over time for each descriptor and genre (Huber’s M robust regression models are trained individually for each descriptor and genre combination). Blue colors denote the density of data points in a given region. R \(^2\) and p-values are provided in the yellow boxes.

Figure 2 shows the distribution of descriptor values for repeated line ratio and ratio of choruses to sections over time, separately for each of the five genres. Each genre is analyzed separately, with a robust regression model trained for each descriptor-genre combination; the resulting regression lines are depicted in red. The repeated line ratio increases over time for all five genres, indicating that lyrics are becoming more repetitive. This further substantiates previous findings that lyrics are increasingly becoming simpler 11 and that more repetitive music is perceived as more fluent and may drive market success 52 . The strongest such increase can be observed for rap (slope \(m = 0.002516\) ), whereas the weakest increase is displayed by country ( \(m = 0.000640\) ). The ratio of chorus to sections descriptor behaves similarly across different genres. The values for this descriptor have increased for all five genres. This implies that the structure of lyrics is shifting towards containing more choruses than in the past, in turn contributing to higher repetitiveness of lyrics. We see the strongest growth in the values of this descriptor for rap ( \(m = 0.008703\) ) and the weakest growth for R&B ( \(m = 0.000325\) ). The fact that the compression ratio descriptor (not shown in the figure) also shows an increase in all genres except R&B further substantiates the trend toward more repetitive lyrics. Another observation is that the lyrics seem to become more personal overall. The pronoun frequency is increasing for all genres except one ( country with \(m = -0.000145\) ). The strongest increase can be observed for rap ( \(m = 0.000926\) ), followed by pop ( \(m = 0.000831\) ), while rock ( \(m = 0.000372\) ) and R&B ( \(m = 0.000369\) ) show a moderate increase. Furthermore, our analysis shows that lyrics have become angrier across all genres, with rap showing the most profound increase in anger ( \(m = 0.015996\) ). Similarly, the amount of negative emotions conveyed also increases across all genres. Again rap shows the highest increase ( \(m = 0.021701\) ), followed by R&B ( \(m = 0.018663\) ), while country shows the lowest increase ( \(m = 0.000606\) ). At the same time, we witness a decrease in positive emotions for pop ( \(m = -0.020041\) ), rock ( \(m = -0.012124\) ), country ( \(m = -0.021662\) ), and R&B ( \(m = -0.048552\) ), while rap shows a moderate increase ( \(m = 0.000129\) ).

Analysis 2: interplay of lyrics descriptors, view counts, and release year

The second set of analyses first aims at investigating the interplay between lyrics descriptors, release year, and listening as well as lyrics view count. The employed multinomial logistic regression fits significantly better the data than the baseline model, i. e., a null model without predictors, indicating an increase in the explained variability (likelihood ratio chi-square of 314.56 with a \(p<.0001\) and McFadden pseudo R \(^2\) of 0.01).

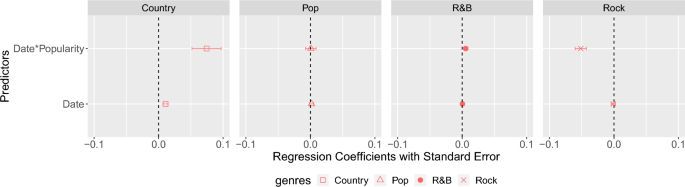

To assess the effect of the predictors, the genre class rap (i. e., the one with the highest average lyrics view count), is considered as the reference class of the dependent variable. Our results show that the probability of a song being from country or rock instead of rap, according to its lyrics view count , varies across decades. As lyrics view count increases, the effect of the year slightly augments (in 1.07 odds) the probability of a song being from country instead of from rap: \(\beta (SE)=0.07(0.02)\) , \(z=3.29\) , \(p=.0009\) . Differently, as lyrics view count increases, the effect of a raising year decreases (in 0.94 odds) the probability of a song being from rock instead of from rap: \(\beta (SE)=-0.05(0.01)\) , \(z=-5.89\) , \(p<.0001\) . In other words, the lyrics of older rock songs are generally more popular than new ones in comparison to rap, and vice versa for country. This is visualized in Fig. 3 , showing the estimated effects of the multinomial logistic regression model. The interaction between lyrics view count and release year did not show a significant effect for rap with respect to pop and R&B (cf. date * popularity for pop and R&B). Differently, for country and rock, the estimated regression coefficients are positive and negative, respectively (cf. date * popularity for country and rock). This shows that compared to rap, lyrics’ popularity increases over time for country, while decreasing for rock. The same analysis is performed considering song listening count instead of lyrics view count, i. e., we perform multinomial logistic regression considering genre as the dependent variable and the interaction between listening count and release date as predictors. This analysis shows that the release date does not affect the relationship between listening count and genre , as no significant effects are shown. While track listening counts do not show any effects, lyrics view counts do indeed show effects; suggesting that for some musical genres, fans’ interest in lyrics goes beyond their listening consumption. In other words, while the play counts for a given genre might not significantly differ, when it comes to the listeners’ interest in lyrics, clear patterns are displayed: rock fans mostly enjoy lyrics from older songs; country fans are more interested in new songs’ lyrics. However, the small determination coefficient of the multinomial logistic regression shows that the explanatory power of the model is limited; thus, documented significant p-values might partially result, due to the huge sample size, from random noise.

Forest plot displaying the estimated multinomial logistic regression coefficients (standardized beta) for the prediction of musical genre. As reference class, rap i. e., the genre with the highest average lyrics view count, is considered.

In Table 4 , the results from the individual multiple linear regression performed for each genre are given. We find that for rap, the most variance of the release year (the dependent variable) can be explained by the predictors. \(32\%\) (i. e., \(R^{2}=0.32\) ) of the variance in the release year for rap can be explained by the descriptors extracted from the lyrics. This is not surprising as rap, characterized by the use of semi-spoken rhymes, is a musical style that has grown in the context of practices marked by high-level linguistic competencies, such as competitive verbal games 53 . Indeed, among the evaluated musical genres, rap is the one in which lyrics play the most prominent role. The second genre for which a higher amount of variance in the release year is explained by the descriptors extracted from the lyrics is R&B ( \(R^{2}=0.20\) ). This might be explained, from a musicological perspective, by taking into account the relationship between R&B and other musical genres. For instance, R&B was simplified concerning the lyrics (besides the music) by eliminating adult-related themes and topics 54 . As such, it was a precursor to the development of rock-and-roll, which explains the higher importance of lyrics in modeling the evolution of R&B with respect to rock. Note that although we utilize R&B as a general musical genre, other terms subsequently introduced relating to R&B, such as soul , are also considered under the umbrella of R&B. This is particularly important as we investigate music released in more recent decades (1990–2020) with respect to the time when the term R&B was originally coined. At the same time, it highlights the importance of historically contextualizing the musical genres assessed in the comparison, since beyond their intrinsic characteristics (e. g., lyrics having a central role in rap), also their heterogeneous nature, in this case, R&B being more heterogeneous than rock e.g., blues and funk being highly repetitive, while soul has undergone substantial changes and is now lyrically in pop song form), might also have an impact in the role played by lyrics. For pop, rock, and country, the amount of variance in release date explained by the predictors is lower than for rap and R&B, with an \(R^{2}\) value of 0.09 for pop and rock, 0.11 for country. This indicates that, unlike rap, and to some extent R&B, lyrics might not be a very meaningful indicator of the development of other musical genres.

The results show that lyrics’ view count has a relevant effect in predicting the release years of songs only for R&B and rock music. For R&B, there is a positive relationship between release year and lyrics view count : \(\beta = 0.32\) , \(p=.003\) ; cf. lyrics view count for R&B in Table 4 . This indicates that new songs are more popular than old ones in terms of lyrics views. Differently, for rock, as expected from the outcomes obtained in the multinomial logistic regression, a strong negative relationship between release year and lyrics view is shown: \(\beta = -1.47\) , \(p<.000\) ; cf. lyrics view count for rock in Table 4 . This indicates that old rock songs are more popular than recent ones, which can be interpreted from a sociological perspective. Unlike pop, which can be seen as more “commercial” and “ephemeral”, targeting a young audience and whose value is typically measured by record sales, rock has commonly targeted a middle-class audience more interested in tradition and often (ideologically) defeating commercialism 55 .

Properties of the lyrics related to complexity and readability, i. e., indicators of the repetitiveness and the difficulty associated with the understanding of a written text, respectively, seem to exhibit meaningful changes over time for rap, and to a lesser extent for pop, rock, and R&B. Confirming previous work 11 , the complexity and difficulty of the lyrics seem to decrease with time for some musical genres. Concerning complexity, this is displayed by the positive \(\beta\) for compression ratio (essentially capturing the repeatability of lyrics) shown by rap (cf. \(\beta = 0.70\) in Table 4 ). This indicates that rap lyrics become easier to comprehend over time, something that can be interpreted as a sign of increasing repetitiveness and, therefore, simplicity. However, the opposite trend is shown for R&B (cf. \(\beta = -0.96\) , in Table 4 ), which suggests that the simplification over time might depend on the musical genre; indeed, this descriptor is not relevant neither for pop nor rock nor country. The decline in lyrics’ difficulty observed over time for rap is confirmed by the negative \(\beta\) for Simple Measure of Gobbledygook (SMOG) readability measure (in a sample of 30 sentences, words with three or more syllables are counted and used to compute the final SMOG score). This indicates a detriment in complexity concerning the lyric’s readability (cf. \(\beta = -0.57\) in Table 4 ). The increase in readability over time is also confirmed for rock, as shown by the positive slope for Dale-Chall readability score (cf. \(\beta = 1.18\) in Table 4 ).

As expected, the results also show that lexical descriptors have a more prominent role in rap, i.e., the musical genre for which lyrics are most relevant. Indeed, when calculating the predictors block-wise across feature types, this is the type of feature showing the highest adjusted R 2 : for rap (0.22), followed by R&B (0.13). Block-wise adjusted R 2 per genre for each feature type are as follows. For rap: Complexity (0.04), Readability (0.04), Lexical (0.22), Structure (0.10), Rhyme (0.13), Emotion (0.02); for pop: Readability (0.01), Lexical (0.06), Structure (0.02), Rhyme (0.01), Emotion (0.01); for rock: Readability (0.01), Lexical (0.04), Structure (0.03), Rhyme (0.01); for R&B: Complexity (0.01), Readability (0.01), Lexical (0.13), Structure (0.02), Rhyme (0.02), Emotion (0.04); for country: Readability (0.014), Lexical (0.09), Structure (0.02), Rhyme (0.02), Emotion (0.01). Repeated line ratio is the only descriptor showing a meaningful impact for all the genres, confirming the results of Analysis 1. The relationship between this descriptor and the release year is positive for all of them (cf. positive \(\beta\) in Table 4 ), which indicates that lyrics become more repetitive over time in all the evaluated genres. This trend is confirmed by the negative relationship between release year and the Maas score, a measure for lexical diversity proposed by H.-D. Maas 56 (the score models the type-token ratio (i.e., the ratio of the total number of words and the total number of unique terms) on a log scale), shown for all the genres except country, pop, and rock (for which this descriptor is not included in the model as it did not show a significant contribution), which indicates that vocabulary richness decreases with time (cf. negative \(\beta\) for Maas in Table 4 ). As already mentioned, step-wise backward elimination is used to find the best-fitting model for each musical genre. The trend toward simplicity over time can also be observed in the structure, which shows a decrease in the number of sections , most prominently shown for R&B and rock (cf. \(\beta = -0.72\) and \(\beta = -0.79\) , respectively in Table 4); as well as a general increment (except for country) in the ratio between verses to chorus and verses to sections (cf. positive \(\beta\) for ratio verses to sections and ratio chorus to sections in Table 4 ). Similarly, the results for rhyme-related descriptors further confirm the tendency towards simpler lyrics over time for all musical genres. This is particularly shown by the increment of the rhyme percent in rap in R&B (cf. \(\beta = 1.20\) and \(\beta = 0.68\) , respectively) and by a detriment in the number of rhyme words (cf. negative \(\beta\) for all the genres), which shows a decline in the rhymes’ variety over time. However, for block-wise predictors, slightly higher adjusted R \(^{2}\) for structure and rhyme are only shown for rap (0.10 and 0.13, respectively), but not for the other musical genres.

Concerning emotion descriptors, the musical genre in which these play the most important role is rap, followed by R&B. For R&B the results show that the content of the lyrics becomes more negative with time, as shown by the increase in concepts related to anger and a detriment in positive emotions (cf. \(\beta = 1.91\) and \(\beta =-0.89\) , respectively, in Table 4 ). Differently, for rap, there is a general increase in the use of emotion-related words with time, both negative and positive (cf. positive \(\beta\) for all the emotion descriptors), which indicates a tendency towards the use of more emotional words. Confirming outcomes from previous work 13 , as shown for R&B, also for pop and country, a tendency toward more negative lyrics is displayed over time; for rock, emotion seems to play a negligible role in the evolution of lyrics. As a final note, we would like to emphasize that since both the overall and block-wise adjusted R \(^{2}\) are very low, these results should be interpreted cautiously, and taken as tendencies rather than strong differences and could partly result from partly non-randomness in subsampling.

Result summary for both studies

Regarding RQ1 (Which trends can we observe when correlating multifaceted lyrics descriptors with temporal aspects in an evolution analysis?), we come to the following conclusion: Despite minor contradictory outcomes concerning complexity and readability for rap and R&B, the interpretation of the lyric’s lexical component, structure, and rhyme, for all investigated genres, generally shows that lyrics are becoming simpler over time 11 , as shown both analyses. This is shown by a decline in vocabulary richness for some specific genres, i.e., rap and R&B, and by a general increase in repetitiveness for all the evaluated musical styles. Besides this, lyrics seem to become more emotional with time for rap, and less positive for R&B, pop, and country. Also, we observe a trend towards angrier lyrics across all genres except for rock. Potential reasons for the trend towards simpler lyrics are discussed by Varnum et al. 11 . They speculate that this might also be related to how music is consumed, technological innovation, or the fact that music is mostly listened to in the background. As for RQ2 (Which role does the popularity of songs and lyrics play in this scenario?), we conclude that while song listening counts do not show any effects, lyrics view counts do indeed show effects. This suggests that for rap, rock, and country, lyrics play a more pronounced role than for other genres and that listeners’ interest in lyrics goes beyond musical consumption itself.

Limitations

While our analyses resulted in interesting insights, they have certain limitations, which we would like to discuss in the following. Most of these relate to the various challenges pertaining to data acquisition, and the resulting biases in the data we investigated.

The two main data sources for our investigation are last.fm and Genius. Given the nature and history of these platforms, in particular last.fm, the studied LFM-2b dataset is affected by community bias and popularity bias. As for community bias , while last.fm does not release official statistics of their users, research studies conducted on large amounts of publicly available demographic and listening data have shown that the last.fm’s user base is not representative of the global (or even Western) population of music listeners 28 , 29 . In particular, the last.fm community represents music aficionados who rely on music streaming for everyday music consumption. They are predominantly male and between 20 and 30 years old. The community is also strongly dominated by users from the US, Russia, Germany, and the UK 29 , whose music taste does not generalize to the population at large 57 . The findings of our analyses, in particular Analysis 2, which investigates user-generated music consumption data, are therefore valid only for this particular subset of music listeners. Also directly related to our data sources, and, particularly, the Genius platform is the genre information used in our analyses. Annotators and editors on the Genius platform may assign one of six high-level genres and an arbitrary number of so-called secondary tags (i.e., subgenres) to each song. The alignment of genre and subgenre assignments is quality-checked by the community, no genre hierarchy is used to check the validity of the genre and subgenre assignments, which can introduce malformed genre assignments.

In addition, the last.fm data on listening counts and the Genius data on lyrics view counts are prone to popularity bias . More precisely, these counts for songs released before the emergence of the platforms (2002 and 2009, respectively, for last.fm and Genius) underestimate the true frequencies of listening and lyrics viewing. On the one hand, this is due to the platforms’ demographic structure of users (see above); but also because a majority of vinyl and cassette (and even some CD) releases have never found their way into these digital online platforms. This kind of popularity bias in our investigated data might have significantly influenced the trends identified for the 1970s to 2000s. However, only Analysis 2 might have been affected by this since popularity estimates are not used in Analysis 1. And even for Analysis 2, popularity values are Z-score normalized, which to some extent accounts for this kind of bias. Still, it should also be pointed out that the randomization strategy might have led to a sampling bias in terms of popularity. This might have partly affected the results, eventually introducing a bias for the genres pop and country, which due to the mentioned limitation, should be taken particularly cautiously.

Both limitations, related to demographic bias and popularity bias, could be overcome by resorting to other data sources, notably the often-used Billboard Charts. However, using this data would introduce other distortions, among others, a highly US-centric view of the world, a much more limited sample size, and a lower granularity of the popularity figures (only ranks instead of play counts). In addition, Billboard Charts are only indicative of music consumption, not for lyrics viewing, which we particularly study in this paper.

Another limitation of the work at hand is the restriction to English lyrics . This choice had to be made to ensure a language-coherent sample of songs and, consequently, the comparability of results. While some of the descriptors could have been computed for other languages as well, due to the different characteristics of languages (e.g., different lexical structures), a cross-language comparison of the descriptors would not be meaningful. Also, most of the resources required to compute the readability scores and emotional descriptors are only available for English. Here we note that for assessing the readability of texts, we rely on rather simple metrics. For instance, difficult words are defined as words with three or more syllables. We also note that some of the readability metrics rely on sentences, which might not always be directly extractable from lyrics. Nevertheless, in future work, we could include more languages and conduct analyses on songs within each language class on a limited set of suited descriptors.

Furthermore, we also acknowledge the changing record distribution landscape, a further limitation of this work. These changes are shown, for instance, by the IFPI’s Global Music Report 2023 58 , provides evidence of the decline of physical sales revenue vs. the steady increase in streaming revenue in the last two decades. This not only changed, for instance, the number of songs on an album as this was physically restricted on vinyl or CDs, but also the way songs are sequenced 59 . On streaming platforms, a song is considered consumed if it is played for at least 30 seconds. Hence, artists aim to start their songs with easily identifiable melodies and lyrics.

Regarding the models employed, we note that these models assume that the changes in individual features across the analyzed genres are linear. While the change of some of the descriptors has been shown to be linear (for instance, lyrics simplicity (compressibility) 11 or brightness, happiness, or danceability 23 ), this might not be the case for all of the descriptors we employ in our studies. In fact, for instance, concreteness has been shown to decline until the 1990s and then increase 9 .

Our study examines the evolution of song lyrics over five decades and across five genres. From a dataset of 353,320 songs, we extracted lexical, linguistic, structural, rhyme, emotion, and complexity descriptors and conducted two complementary analyses. In essence, we find that lyrics have become simpler over time regarding multiple aspects of lyrics: vocabulary richness, readability, complexity, and the number of repeated lines. Our results also confirm previous research that found that lyrics have become more negative on the one hand, and more personal on the other. In addition, our experimental outcomes show that listeners’ interest in lyrics varies across musical genres and is related to the songs’ release year. Notably, rock listeners enjoy lyrics from older songs, while country fans prefer lyrics from new songs.

We believe that the role of lyrics has been understudied and that our results can be used to further study and monitor cultural artifacts and shifts in society. For instance, we could combine and compare the studies on the changing sentiment in societies and shifts in the use of emotionally loaded words and the sentiment expressed in the lyrics consumed by the different audiences (age, gender, country/state/region, educational background, economical status, etc.). From a computational perspective, establishing a deeper understanding of lyrics and their evolution can inform further tasks in music information retrieval and recommender systems. For instance, existing user models could be extended to also include the lyric preferences of users, allowing for better capturing of user preferences and intent, and ultimately improving retrieval tasks such as personalized music access and recommender systems.

Data availability

The datasets generated and analyzed during the current study are available on Zenodo: https://doi.org/10.5281/zenodo.7740045 . The source code utilized for our analyses is available at https://github.com/MaximilianMayerl/CorrelatesOfSongLyrics .

Change history

22 may 2024.

A Correction to this paper has been published: https://doi.org/10.1038/s41598-024-62519-9

Donald, R., Kreutz, G., Mitchell, L. & MacDonald, R. What is music health and wellbeing and why is it important? In Music, Health, and Wellbeing 3–11 (Oxford University Press, 2012).

Schäfer, T., Sedlmeier, P., Städtler, C. & Huron, D. The psychological functions of music listening. Front. Psychol. 4 , 1–34. https://doi.org/10.3389/fpsyg.2013.00511 (2013).

Article Google Scholar

Lonsdale, A. J. & North, A. C. Why do we listen to music? A uses and gratifications analysis. Br. J. Psychol. 102 , 108–134. https://doi.org/10.1348/000712610x506831 (2011).

Article PubMed Google Scholar

Gross, J. Emotion regulation: Conceptual and empirical foundations. In Handbook of Emotion Regulation 2nd edn (ed. Gross, J.) 1–19 (The Guilford Press, 2007).

Google Scholar

Demetriou, A. M., Jansson, A., Kumar, A. & Bittner, R. M. Vocals in music matter: The relevance of vocals in the minds of listeners. In Proceedings of the 19th International Society for Music Information Retrieval Conference, ISMIR 514–520 (ISMIR, 2018).

Ali, S. O. & Peynircioğlu, Z. F. Songs and emotions: Are lyrics and melodies equal partners?. Psychol. Music 34 , 511–534 (2006).

Brattico, E. et al. A functional MRI study of happy and sad emotions in music with and without lyrics. Front. Psychol. 2 , 308 (2011).

Article PubMed PubMed Central Google Scholar

Moi, C. M. F. Rock poetry: The literature our students listen to. J. Imagin. Lang. Learn. 2 , 56–59 (1994).

Choi, K. & Stephen Downie, J. A. Trend analysis on concreteness of popular song lyrics. In 6th International Conference on Digital Libraries for Musicology 43–52 (ACM, 2019). https://doi.org/10.1145/3358664.3358673 .

The nobel prize in literature 2016—Bob Dylan. https://www.nobelprize.org/prizes/literature/2016/dylan/facts/ (2016).

Varnum, M. E. W., Krems, J. A., Morris, C., Wormley, A. & Grossmann, I. Why are song lyrics becoming simpler? A time series analysis of lyrical complexity in six decades of American popular music. PLoS ONE 16 , e0244576. https://doi.org/10.1371/journal.pone.0244576 (2021).

Article CAS PubMed PubMed Central Google Scholar

Lambert, B. et al. The pace of modern culture. Nat. Hum. Behav. 4 , 352–360. https://doi.org/10.1038/s41562-019-0802-4 (2020).

Brand, C. O., Acerbi, A. & Mesoudi, A. Cultural evolution of emotional expression in 50 years of song lyrics. Evolut. Hum. Sci. 1 , e11. https://doi.org/10.1017/ehs.2019.11 (2019).

Eastman, J. T. & Pettijohn, T. F. Gone country: An investigation of Billboard country songs of the year across social and economic conditions in the United States. Psychol. Pop. Media Cult. 4 , 155–171. https://doi.org/10.1037/ppm0000019 (2015).

Pettijohn, T. F. & Sacco, D. F. The language of lyrics. J. Lang. Soc. Psychol. 28 , 297–311. https://doi.org/10.1177/0261927x09335259 (2009).

Schedl, M. Genre differences of song lyrics and artist wikis: An analysis of popularity, length, repetitiveness, and readability. In The World Wide Web Conference on—WWW ’19 3201–3207 (ACM, 2019). https://doi.org/10.1145/3308558.3313604 .

Dodds, P. S. & Danforth, C. M. Measuring the happiness of large-scale written expression: Songs, blogs, and presidents. J. Happiness Stud. 11 , 441–456. https://doi.org/10.1007/s10902-009-9150-9 (2009).

DeWall, C. N., Pond, R. S., Campbell, W. K. & Twenge, J. M. Tuning in to psychological change: Linguistic markers of psychological traits and emotions over time in popular U.S. song lyrics. Psychol. Aesthet. Creativity Arts 5 , 200–207. https://doi.org/10.1037/a0023195 (2011).

Berger, J. & Packard, G. Are atypical things more popular?. Psychol. Sci. 29 , 1178–1184. https://doi.org/10.1177/0956797618759465 (2018).

Kim, J., Demetriou, A. M., Manolios, S., Tavella, M. S. & Liem, C. C. S. Butter lyrics over hominy grit: Comparing audio and psychology-based text features in MIR tasks. In Proceedings of the 21th International Society for Music Information Retrieval Conference, ISMIR 861–868 (2020).

Napier, K. & Shamir, L. Quantitative sentiment analysis of lyrics in popular music. J. Pop. Music Stud. 30 , 161–176 (2018).

Christenson, P. G., de Haan-Rietdijk, S., Roberts, D. F. & ter Bogt, T. F. What has America been singing about? Trends in themes in the us top-40 songs: 1960–2010. Psychol. Music 47 , 194–212 (2019).

Interiano, M. et al. Musical trends and predictability of success in contemporary songs in and out of the top charts. R. Soc. Open Sci. 5 , 171274. https://doi.org/10.1098/rsos.171274 (2018).

Article ADS PubMed PubMed Central Google Scholar

Schedl, M. The LFM-1b dataset for music retrieval and recommendation. In Proceedings of the 2016 ACM on International Conference on Multimedia Retrieval (ACM, 2016). https://doi.org/10.1145/2911996.2912004 .

Vigliensoni, G. & Fujinaga, I. The music listening histories dataset. In Proceedings of the 18th International Society for Music Information Retrieval Conference, ISMIR 96–102 (2017).

Huang, J. et al. Taxonomy-aware multi-hop reasoning networks for sequential recommendation. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, WSDM ’19 573–581 (ACM, 2019). https://doi.org/10.1145/3289600.3290972 .

Huang, J., Zhao, W. X., Dou, H., Wen, J. -R. & Chang, E. Y. Improving sequential recommendation with knowledge-enhanced memory networks. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval (2018).

Schedl, M. et al. LFM-2b: A dataset of enriched music listening events for recommender systems research and fairness analysis. In ACM SIGIR Conference on Human Information Interaction and Retrieval 337–341 (2022).

Schedl, M. Investigating country-specific music preferences and music recommendation algorithms with the lfm-1b dataset. Int. J. Multim. Inf. Retr. 6 , 71–84. https://doi.org/10.1007/s13735-017-0118-y (2017).