- En español – ExME

- Em português – EME

Multivariate analysis: an overview

Posted on 9th September 2021 by Vighnesh D

Data analysis is one of the most useful tools when one tries to understand the vast amount of information presented to them and synthesise evidence from it. There are usually multiple factors influencing a phenomenon.

Of these, some can be observed, documented and interpreted thoroughly while others cannot. For example, in order to estimate the burden of a disease in society there may be a lot of factors which can be readily recorded, and a whole lot of others which are unreliable and, therefore, require proper scrutiny. Factors like incidence, age distribution, sex distribution and financial loss owing to the disease can be accounted for more easily when compared to contact tracing, prevalence and institutional support for the same. Therefore, it is of paramount importance that the data which is collected and interpreted must be done thoroughly in order to avoid common pitfalls.

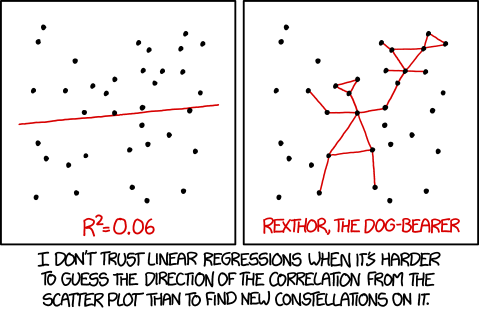

Image from: https://imgs.xkcd.com/comics/useful_geometry_formulas.png under Creative Commons License 2.5 Randall Munroe. xkcd.com.

Why does it sound so important?

Data collection and analysis is emphasised upon in academia because the very same findings determine the policy of a governing body and, therefore, the implications that follow it are the direct product of the information that is fed into the system.

Introduction

In this blog, we will discuss types of data analysis in general and multivariate analysis in particular. It aims to introduce the concept to investigators inclined towards this discipline by attempting to reduce the complexity around the subject.

Analysis of data based on the types of variables in consideration is broadly divided into three categories:

- Univariate analysis: The simplest of all data analysis models, univariate analysis considers only one variable in calculation. Thus, although it is quite simple in application, it has limited use in analysing big data. E.g. incidence of a disease.

- Bivariate analysis: As the name suggests, bivariate analysis takes two variables into consideration. It has a slightly expanded area of application but is nevertheless limited when it comes to large sets of data. E.g. incidence of a disease and the season of the year.

- Multivariate analysis: Multivariate analysis takes a whole host of variables into consideration. This makes it a complicated as well as essential tool. The greatest virtue of such a model is that it considers as many factors into consideration as possible. This results in tremendous reduction of bias and gives a result closest to reality. For example, kindly refer to the factors discussed in the “overview” section of this article.

Multivariate analysis is defined as:

The statistical study of data where multiple measurements are made on each experimental unit and where the relationships among multivariate measurements and their structure are important

Multivariate statistical methods incorporate several techniques depending on the situation and the question in focus. Some of these methods are listed below:

- Regression analysis: Used to determine the relationship between a dependent variable and one or more independent variable.

- Analysis of Variance (ANOVA) : Used to determine the relationship between collections of data by analyzing the difference in the means.

- Interdependent analysis: Used to determine the relationship between a set of variables among themselves.

- Discriminant analysis: Used to classify observations in two or more distinct set of categories.

- Classification and cluster analysis: Used to find similarity in a group of observations.

- Principal component analysis: Used to interpret data in its simplest form by introducing new uncorrelated variables.

- Factor analysis: Similar to principal component analysis, this too is used to crunch big data into small, interpretable forms.

- Canonical correlation analysis: Perhaps one of the most complex models among all of the above, canonical correlation attempts to interpret data by analysing relationships between cross-covariance matrices.

ANOVA remains one of the most widely used statistical models in academia. Of the several types of ANOVA models, there is one subtype that is frequently used because of the factors involved in the studies. Traditionally, it has found its application in behavioural research, i.e. Psychology, Psychiatry and allied disciplines. This model is called the Multivariate Analysis of Variance (MANOVA). It is widely described as the multivariate analogue of ANOVA, used in interpreting univariate data.

Image from: https://imgs.xkcd.com/comics/t_distribution.png under Creative Commons License 2.5 Randall Munroe. xkcd.com.

Interpretation of results

Interpretation of results is probably the most difficult part in the technique. The relevant results are generally summarized in a table with an associated text. Appropriate information must be highlighted regarding:

- Multivariate test statistics used

- Degrees of freedom

- Appropriate test statistics used

- Calculated p-value (p < x)

Reliability and validity of the test are the most important determining factors in such techniques.

Applications

Multivariate analysis is used in several disciplines. One of its most distinguishing features is that it can be used in parametric as well as non-parametric tests.

Quick question: What are parametric and non-parametric tests?

- Parametric tests: Tests which make certain assumptions regarding the distribution of data, i.e. within a fixed parameter.

- Non-parametric tests: Tests which do not make assumptions with respect to distribution. On the contrary, the distribution of data is assumed to be free of distribution.

Uses of Multivariate analysis: Multivariate analyses are used principally for four reasons, i.e. to see patterns of data, to make clear comparisons, to discard unwanted information and to study multiple factors at once. Applications of multivariate analysis are found in almost all the disciplines which make up the bulk of policy-making, e.g. economics, healthcare, pharmaceutical industries, applied sciences, sociology, and so on. Multivariate analysis has particularly enjoyed a traditional stronghold in the field of behavioural sciences like psychology, psychiatry and allied fields because of the complex nature of the discipline.

Multivariate analysis is one of the most useful methods to determine relationships and analyse patterns among large sets of data. It is particularly effective in minimizing bias if a structured study design is employed. However, the complexity of the technique makes it a less sought-out model for novice research enthusiasts. Therefore, although the process of designing the study and interpretation of results is a tedious one, the techniques stand out in finding the relationships in complex situations.

References (pdf)

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

No Comments on Multivariate analysis: an overview

I got good information on multivariate data analysis and using mult variat analysis advantages and patterns.

Great summary. I found this very useful for starters

Thank you so much for the dscussion on multivariate design in research. However, i want to know more about multiple regression analysis. Hope for more learnings to gain from you.

Thank you for letting the author know this was useful, and I will see if there are any students wanting to blog about multiple regression analysis next!

When you want to know what contributed to an outcome what study is done?

Dear Philip, Thank you for bringing this to our notice. Your input regarding the discussion is highly appreciated. However, since this particular blog was meant to be an overview, I consciously avoided the nuances to prevent complicated explanations at an early stage. I am planning to expand on the matter in subsequent blogs and will keep your suggestion in mind while drafting for the same. Many thanks, Vighnesh.

Sorry, I don’t want to be pedantic, but shouldn’t we differentiate between ‘multivariate’ and ‘multivariable’ regression? https://stats.stackexchange.com/questions/447455/multivariable-vs-multivariate-regression https://www.ajgponline.org/article/S1064-7481(18)30579-7/fulltext

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

Data mining or data dredging?

Advances in technology now allow huge amounts of data to be handled simultaneously. Katherine takes a look at how this can be used in healthcare and how it can be exploited.

Nominal, ordinal, or numerical variables?

How can you tell if a variable is nominal, ordinal, or numerical? Why does it even matter? Determining the appropriate variable type used in a study is essential to determining the correct statistical method to use when obtaining your results. It is important not to take the variables out of context because more often than not, the same variable that can be ordinal can also be numerical, depending on how the data was recorded and analyzed. This post will give you a specific example that may help you better grasp this concept.

Data analysis methods

A description of the two types of data analysis – “As Treated” and “Intention to Treat” – using a hypothetical trial as an example

An Introduction to Multivariate Analysis

Data analytics is all about looking at various factors to see how they impact certain situations and outcomes. When dealing with data that contains more than two variables, you’ll use multivariate analysis.

Multivariate analysis isn’t just one specific method—rather, it encompasses a whole range of statistical techniques. These techniques allow you to gain a deeper understanding of your data in relation to specific business or real-world scenarios.

So, if you’re an aspiring data analyst or data scientist, multivariate analysis is an important concept to get to grips with.

In this post, we’ll provide a complete introduction to multivariate analysis. We’ll delve deeper into defining what multivariate analysis actually is, and we’ll introduce some key techniques you can use when analyzing your data. We’ll also give some examples of multivariate analysis in action.

Want to skip ahead to a particular section? Just use the clickable menu.

- What is multivariate analysis?

- Multivariate data analysis techniques (with examples)

- What are the advantages of multivariate analysis?

- Key takeaways and further reading

Ready to demystify multivariate analysis? Let’s do it.

1. What is multivariate analysis?

In data analytics, we look at different variables (or factors) and how they might impact certain situations or outcomes.

For example, in marketing, you might look at how the variable “money spent on advertising” impacts the variable “number of sales.” In the healthcare sector, you might want to explore whether there’s a correlation between “weekly hours of exercise” and “cholesterol level.” This helps us to understand why certain outcomes occur, which in turn allows us to make informed predictions and decisions for the future.

There are three categories of analysis to be aware of:

- Univariate analysis , which looks at just one variable

- Bivariate analysis , which analyzes two variables

- Multivariate analysis , which looks at more than two variables

As you can see, multivariate analysis encompasses all statistical techniques that are used to analyze more than two variables at once. The aim is to find patterns and correlations between several variables simultaneously—allowing for a much deeper, more complex understanding of a given scenario than you’ll get with bivariate analysis.

An example of multivariate analysis

Let’s imagine you’re interested in the relationship between a person’s social media habits and their self-esteem. You could carry out a bivariate analysis, comparing the following two variables:

- How many hours a day a person spends on Instagram

- Their self-esteem score (measured using a self-esteem scale)

You may or may not find a relationship between the two variables; however, you know that, in reality, self-esteem is a complex concept. It’s likely impacted by many different factors—not just how many hours a person spends on Instagram. You might also want to consider factors such as age, employment status, how often a person exercises, and relationship status (for example). In order to deduce the extent to which each of these variables correlates with self-esteem, and with each other, you’d need to run a multivariate analysis.

So we know that multivariate analysis is used when you want to explore more than two variables at once. Now let’s consider some of the different techniques you might use to do this.

2. Multivariate data analysis techniques and examples

There are many different techniques for multivariate analysis, and they can be divided into two categories:

- Dependence techniques

- Interdependence techniques

So what’s the difference? Let’s take a look.

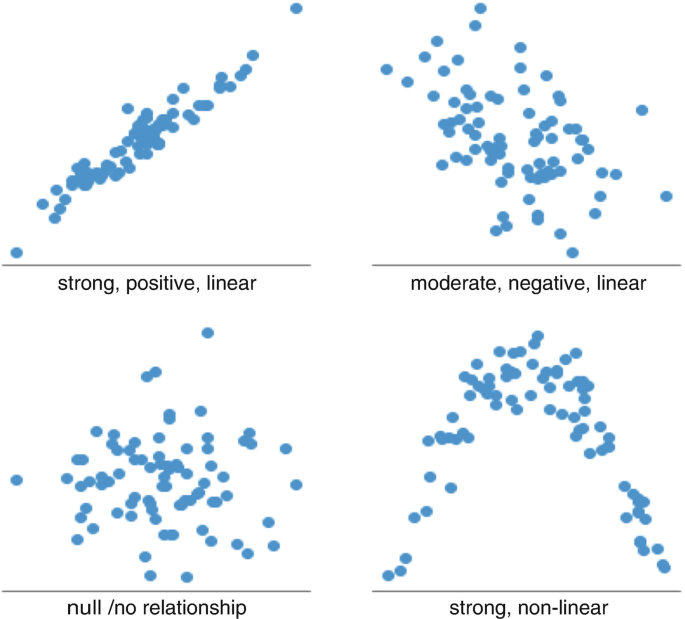

Multivariate analysis techniques: Dependence vs. interdependence

When we use the terms “dependence” and “interdependence,” we’re referring to different types of relationships within the data. To give a brief explanation:

Dependence methods

Dependence methods are used when one or some of the variables are dependent on others. Dependence looks at cause and effect; in other words, can the values of two or more independent variables be used to explain, describe, or predict the value of another, dependent variable? To give a simple example, the dependent variable of “weight” might be predicted by independent variables such as “height” and “age.”

In machine learning, dependence techniques are used to build predictive models. The analyst enters input data into the model, specifying which variables are independent and which ones are dependent—in other words, which variables they want the model to predict, and which variables they want the model to use to make those predictions.

Interdependence methods

Interdependence methods are used to understand the structural makeup and underlying patterns within a dataset. In this case, no variables are dependent on others, so you’re not looking for causal relationships. Rather, interdependence methods seek to give meaning to a set of variables or to group them together in meaningful ways.

So: One is about the effect of certain variables on others, while the other is all about the structure of the dataset.

With that in mind, let’s consider some useful multivariate analysis techniques. We’ll look at:

Multiple linear regression

Multiple logistic regression, multivariate analysis of variance (manova), factor analysis, cluster analysis.

Multiple linear regression is a dependence method which looks at the relationship between one dependent variable and two or more independent variables. A multiple regression model will tell you the extent to which each independent variable has a linear relationship with the dependent variable. This is useful as it helps you to understand which factors are likely to influence a certain outcome, allowing you to estimate future outcomes.

Example of multiple regression:

As a data analyst, you could use multiple regression to predict crop growth. In this example, crop growth is your dependent variable and you want to see how different factors affect it. Your independent variables could be rainfall, temperature, amount of sunlight, and amount of fertilizer added to the soil. A multiple regression model would show you the proportion of variance in crop growth that each independent variable accounts for.

Source: Public domain via Wikimedia Commons

Logistic regression analysis is used to calculate (and predict) the probability of a binary event occurring. A binary outcome is one where there are only two possible outcomes; either the event occurs (1) or it doesn’t (0). So, based on a set of independent variables, logistic regression can predict how likely it is that a certain scenario will arise. It is also used for classification. You can learn about the difference between regression and classification here .

Example of logistic regression:

Let’s imagine you work as an analyst within the insurance sector and you need to predict how likely it is that each potential customer will make a claim. You might enter a range of independent variables into your model, such as age, whether or not they have a serious health condition, their occupation, and so on. Using these variables, a logistic regression analysis will calculate the probability of the event (making a claim) occurring. Another oft-cited example is the filters used to classify email as “spam” or “not spam.” You’ll find a more detailed explanation in this complete guide to logistic regression .

Multivariate analysis of variance (MANOVA) is used to measure the effect of multiple independent variables on two or more dependent variables. With MANOVA, it’s important to note that the independent variables are categorical, while the dependent variables are metric in nature. A categorical variable is a variable that belongs to a distinct category—for example, the variable “employment status” could be categorized into certain units, such as “employed full-time,” “employed part-time,” “unemployed,” and so on. A metric variable is measured quantitatively and takes on a numerical value.

In MANOVA analysis, you’re looking at various combinations of the independent variables to compare how they differ in their effects on the dependent variable.

Example of MANOVA:

Let’s imagine you work for an engineering company that is on a mission to build a super-fast, eco-friendly rocket. You could use MANOVA to measure the effect that various design combinations have on both the speed of the rocket and the amount of carbon dioxide it emits. In this scenario, your categorical independent variables could be:

- Engine type, categorized as E1, E2, or E3

- Material used for the rocket exterior, categorized as M1, M2, or M3

- Type of fuel used to power the rocket, categorized as F1, F2, or F3

Your metric dependent variables are speed in kilometers per hour, and carbon dioxide measured in parts per million. Using MANOVA, you’d test different combinations (e.g. E1, M1, and F1 vs. E1, M2, and F1, vs. E1, M3, and F1, and so on) to calculate the effect of all the independent variables. This should help you to find the optimal design solution for your rocket.

Factor analysis is an interdependence technique which seeks to reduce the number of variables in a dataset. If you have too many variables, it can be difficult to find patterns in your data. At the same time, models created using datasets with too many variables are susceptible to overfitting. Overfitting is a modeling error that occurs when a model fits too closely and specifically to a certain dataset, making it less generalizable to future datasets, and thus potentially less accurate in the predictions it makes.

Factor analysis works by detecting sets of variables which correlate highly with each other. These variables may then be condensed into a single variable. Data analysts will often carry out factor analysis to prepare the data for subsequent analyses.

Factor analysis example:

Let’s imagine you have a dataset containing data pertaining to a person’s income, education level, and occupation. You might find a high degree of correlation among each of these variables, and thus reduce them to the single factor “socioeconomic status.” You might also have data on how happy they were with customer service, how much they like a certain product, and how likely they are to recommend the product to a friend. Each of these variables could be grouped into the single factor “customer satisfaction” (as long as they are found to correlate strongly with one another). Even though you’ve reduced several data points to just one factor, you’re not really losing any information—these factors adequately capture and represent the individual variables concerned. With your “streamlined” dataset, you’re now ready to carry out further analyses.

Another interdependence technique, cluster analysis is used to group similar items within a dataset into clusters.

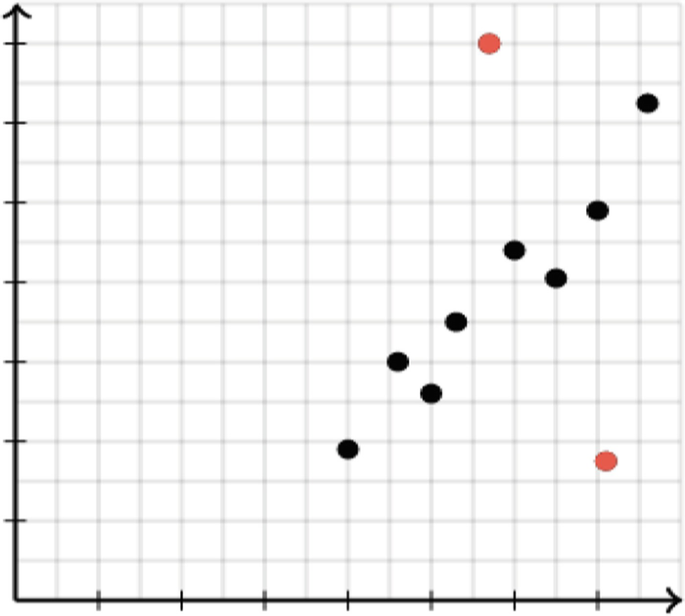

When grouping data into clusters, the aim is for the variables in one cluster to be more similar to each other than they are to variables in other clusters. This is measured in terms of intracluster and intercluster distance. Intracluster distance looks at the distance between data points within one cluster. This should be small. Intercluster distance looks at the distance between data points in different clusters. This should ideally be large. Cluster analysis helps you to understand how data in your sample is distributed, and to find patterns.

Learn more: What is Cluster Analysis? A Complete Beginner’s Guide

Cluster analysis example:

A prime example of cluster analysis is audience segmentation. If you were working in marketing, you might use cluster analysis to define different customer groups which could benefit from more targeted campaigns. As a healthcare analyst , you might use cluster analysis to explore whether certain lifestyle factors or geographical locations are associated with higher or lower cases of certain illnesses. Because it’s an interdependence technique, cluster analysis is often carried out in the early stages of data analysis.

Source: Chire, CC BY-SA 3.0 via Wikimedia Commons

More multivariate analysis techniques

This is just a handful of multivariate analysis techniques used by data analysts and data scientists to understand complex datasets. If you’re keen to explore further, check out discriminant analysis, conjoint analysis, canonical correlation analysis, structural equation modeling, and multidimensional scaling.

3. What are the advantages of multivariate analysis?

The one major advantage of multivariate analysis is the depth of insight it provides. In exploring multiple variables, you’re painting a much more detailed picture of what’s occurring—and, as a result, the insights you uncover are much more applicable to the real world.

Remember our self-esteem example back in section one? We could carry out a bivariate analysis, looking at the relationship between self-esteem and just one other factor; and, if we found a strong correlation between the two variables, we might be inclined to conclude that this particular variable is a strong determinant of self-esteem. However, in reality, we know that self-esteem can’t be attributed to one single factor. It’s a complex concept; in order to create a model that we could really trust to be accurate, we’d need to take many more factors into account. That’s where multivariate analysis really shines; it allows us to analyze many different factors and get closer to the reality of a given situation.

4. Key takeaways and further reading

In this post, we’ve learned that multivariate analysis is used to analyze data containing more than two variables. To recap, here are some key takeaways:

- The aim of multivariate analysis is to find patterns and correlations between several variables simultaneously

- Multivariate analysis is especially useful for analyzing complex datasets, allowing you to gain a deeper understanding of your data and how it relates to real-world scenarios

- There are two types of multivariate analysis techniques: Dependence techniques, which look at cause-and-effect relationships between variables, and interdependence techniques, which explore the structure of a dataset

- Key multivariate analysis techniques include multiple linear regression, multiple logistic regression, MANOVA, factor analysis, and cluster analysis—to name just a few

So what now? For a hands-on introduction to data analytics, try this free five-day data analytics short course . And, if you’d like to learn more about the different methods used by data analysts, check out the following:

- What is data cleaning and why does it matter?

- SQL cheatsheet: Learn your first 8 commands

- A step-by-step guide to the data analysis process

- +1 415-349-0105 +44 800-088-5450 +1 844-822-8378 +61 1-800-614-417

- VWO Engage Login

- EN DE ES BR

Multivariate Testing

What is multivariate testing.

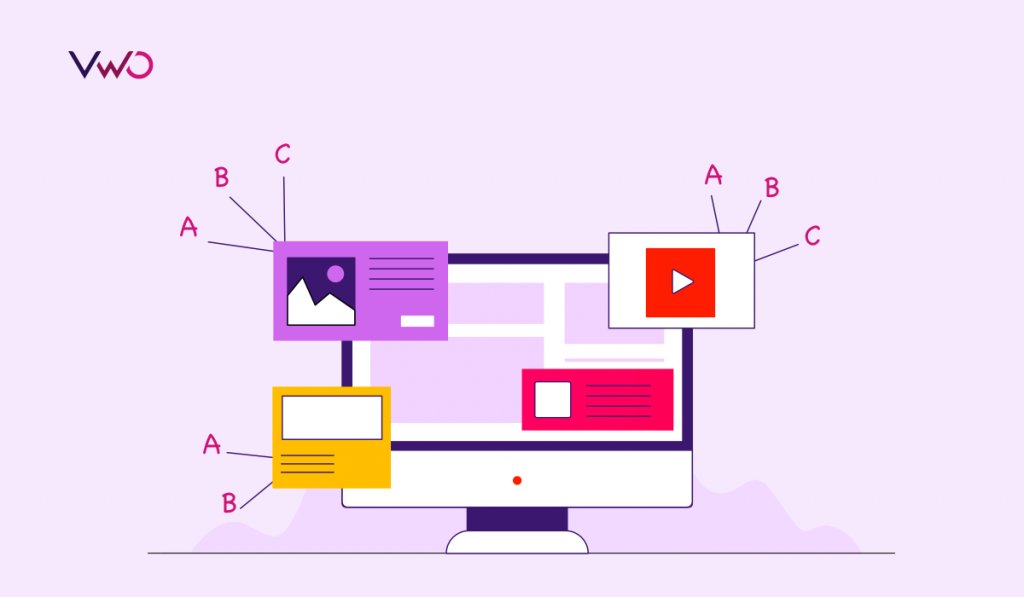

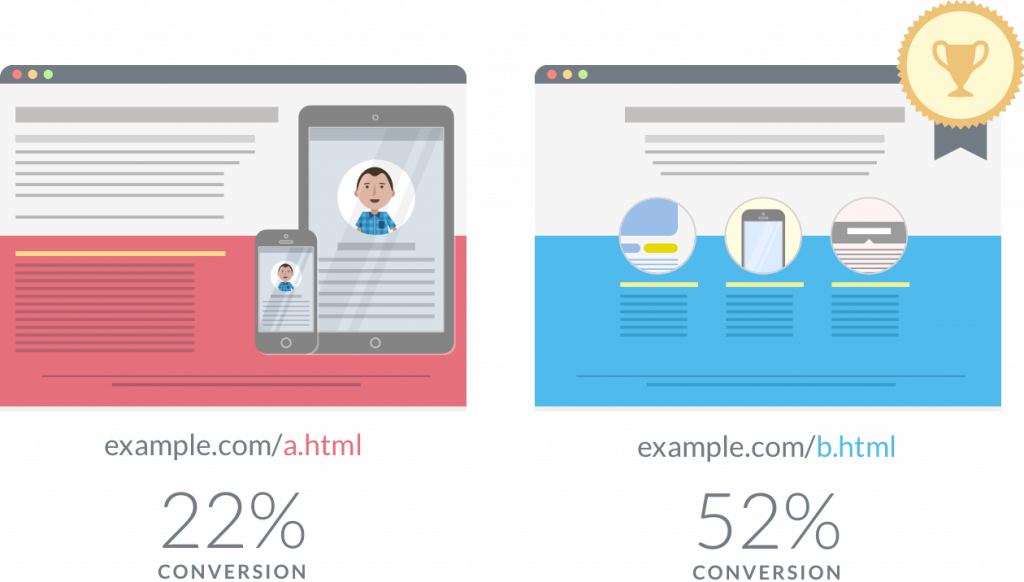

Multivariate testing (MVT) is a form of A/B testing wherein a combination of multiple page elements are modified and tested against the original version (called the control) to determine which permutation leaves the highest impact on the business metrics you’re tracking. This form of testing is recommended if you want to test the impact of radical changes on a webpage as compared to analyzing the impact of one particular element.

Unlike a traditional A/B test, MVT is more complex and best suited for advanced marketing, product, and development professionals. Let’s consider an example to give you a more comprehensive explanation of this testing methodology and see how it aids in conversion rate optimization .

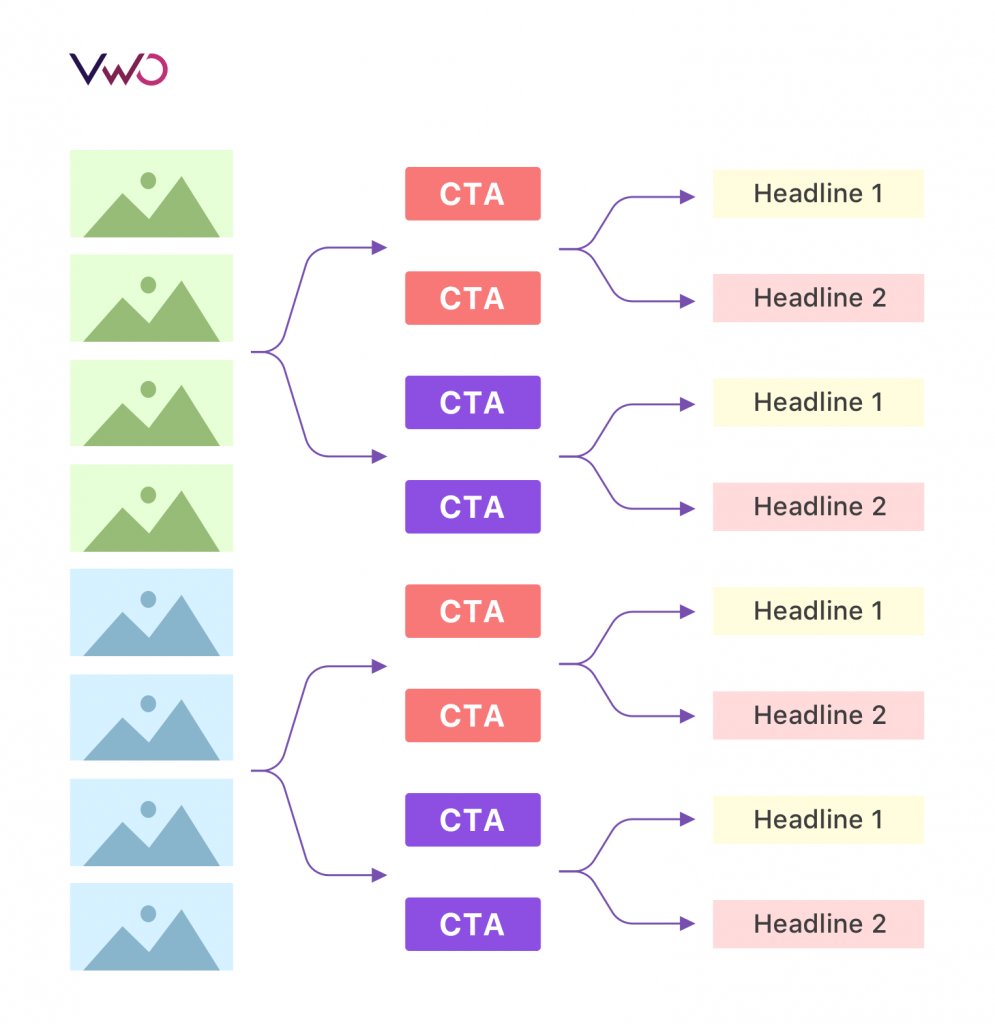

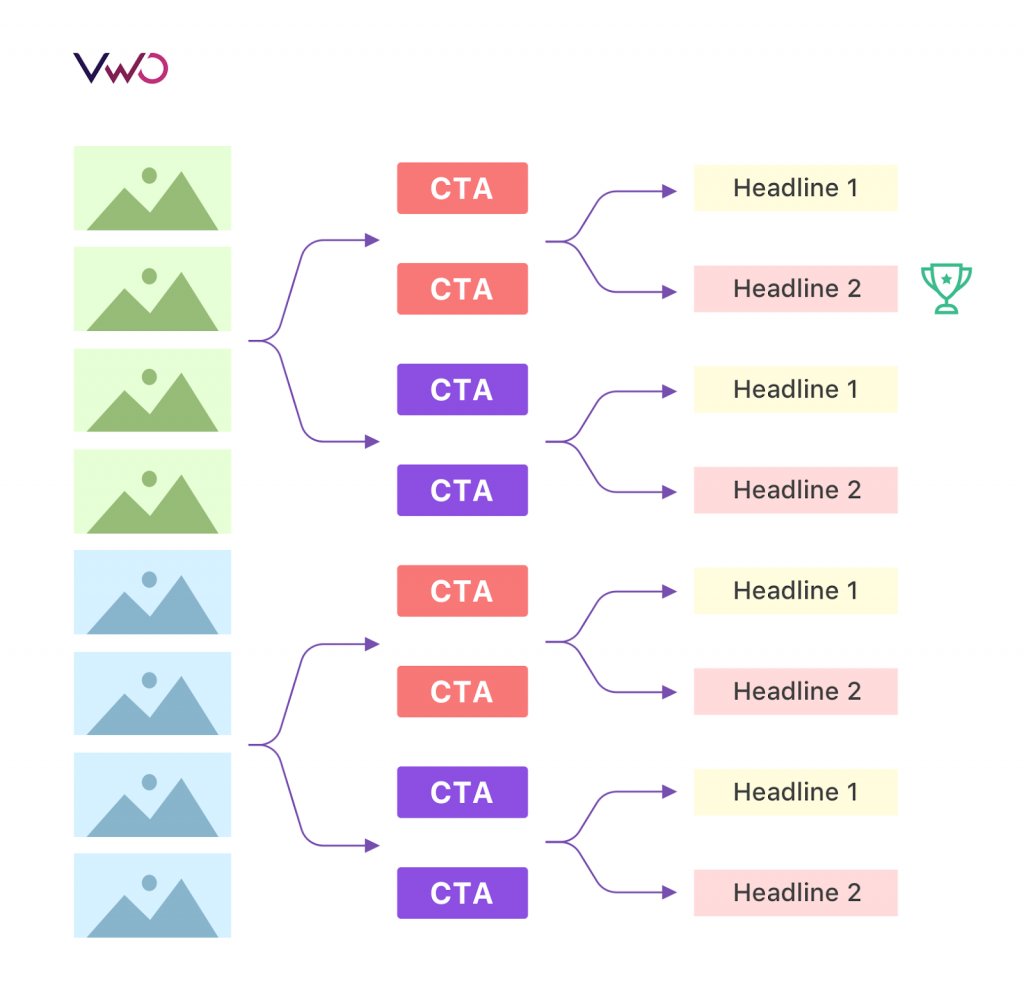

Let’s say you have an online business of homemade chocolates. Your product landing page typically has three important elements to attract visitors and push them down the conversion funnel – product images, call-to-action button color, and product headline. You decide to test 2 versions of all the 3 elements to understand which combination performs the best and increases your conversion rate. This would make your test a Multivariate Test (MVT).

A set of 2 variations for 3 page elements means a total of 8 variation combinations. The formula to calculate the total number of versions in MVT is as follows:

[No. of variations of element A] x [No. of variations of element B] x [No. of variations of element C]… = [Total No. of variations]

Now, variation combinations would be:

2 (Product image) x 2 (CTA button color) x 2 (Product headline) = 8

Each of these combinations will now be concurrently tested to analyze which combination helps get maximum conversions on your product landing page .

Note, a multivariate test eliminates the need to run multiple A/B tests and subsequent A/B tests to find the winning variation. Running concurrent tests with greater variation combinations not only helps you save time, money, and effort, but also draw conclusions in the shortest possible time.

Here’s a real-life example of multivariate testing to testify the benefits of this experimentation methodology.

Hyundai.io found that while the traffic on its car model landing pages was significant, not many people were requesting a test drive or downloading car brochures. Using VWO’s qualitative and quantitative tools, they analyzed that each of their landing pages has many different elements, including car headline, car visuals, car specifications, testimonials, and so on which might be causing friction. They decided to run a multivariate test to understand which elements were influencing a visitor’s decision to request a test drive or download a car brochure.

They created variations of the following sections of the car landing page:

- New SEO friendly text vs old text: They hypothesized that by making the text more SEO friendly, they could reap more SEO benefits.

- Extra CTA buttons vs no extra CTA buttons: They hypothesized that by adding extra and more prominent CTA buttons on the page, they’ll be able to nudge visitors in the right direction.

- Large photo of the car versus thumbnails: They hypothesized that it’s better to have larger photographs on the page than thumbnails to create better visitor traction

Hyundai.io tested a total of 8 combinations (3 sections, 2 variations each = 2*2*2) on their website.

Here’s a screenshot of the original page and the winning variation:

The variation with more SEO-friendly, extra CTA buttons and larger images increased Hyundai.io’s conversion rates for both, request for test drive and download brochure, by a total of 62%. They also saw a 208% increase in their click-through rate .

MVT is not just restricted to testing the performance of your webpages. You can use it across a range of fields. For instance, you can test your PPC ads, server-side codes, and so on. But, MVT should only be used on sufficiently sized visitor segments. More in-depth analysis means longer completion time. You must also not test too many variables at once as the test will take a longer time to complete and may or may not achieve statistical significance.

Understanding some basic multivariate testing terminologies

Although an integral part of A/B testing, there are a couple of terminologies specific to multivariate testing that anyone getting into this experimentation arena should know.

- Combination: It refers to a number of possible arrangements or unions you can create to test a collection of variable options in multiple locations. The order of selection or permutation does not matter. For instance, if you’re testing three elements on your home page, each with three variable options, then there are a total of 27 possible combinations (3x3x3) that you’ll test. When a visitor becomes a part of your test, they’ll see one combination, also referred to as an experience, when they visit your website.

- Content: Text, image, or any element that becomes a part of an experiment. In multivariate testing, several content options spread across a web page are compared in tandem to analyze which combination shows the best results. Content is also sometimes referred to as a level in MVT..

- Location: Ideally, a location refers to a page on the website or a specific area where you run optimizations. It’s essential to website activities and experiences, and display content to visitors or track visitor behavior.

- Control: Ideally, control refers to the original page, element, or content against which you’re planning to run a test. It also represents the “A” in the A/B testing scenario. For instance, if you want to test the performance of your homepage’s banner image, the original or existing banner image will be the “control.” Control is often also referred to as “Champion” by many seasoned optimizers.

- Goal: An event or a combination of events that help measure the success of a test or an experiment. For instance, a content writer’s goal is to increase visitor engagement on their content pieces and even generate content leads.

- Confidence Level: How positive or assertive you are about the success of your experiment.

- Conversion Rate: The percentage of unique visitors entering your conversion funnel and converting it into paying customers.

- Element: A discrete page component such as a form, block of text, an image, call-to-action button, etc.

- Experiment: It’s another way of assessing or evaluating the performance of one or more page elements.

- Hypothesis: A tentative assumption made to draft and test a logical or empirical consequence of a particular problem. An example of a hypothesis could be: Based on the previously run experiments and qualitative data gathered through heatmap and scrollmap analysis, I expect that adding banner and text CTAs on the guide page at regular intervals will help generate more content leads and MQLs.

- Non-Conclusive Results: Not deriving any solid conclusion from the experiment(s) you’ve run. Non-conclusive results do not point to a test’s failure—instead, just the failure of deriving a learning curve.

- Qualitative Research: A technique of gathering and analyzing non-numerical data from existing and potential customers to understand a concept, opinion, or experience.

- Quantitative Research: Digging through numerical data derived from analytics to find insights around the behavior of your website visitors and draw statistical analysis.

- Visitors: A person or a unique entity visiting your site or landing pages. They’re termed unique because no matter how many times a person visits your site or page, they’re counted only once.

What are the different types of multivariate testing methods?

MVT is in itself an umbrella methodology. There are several different types of multivariate tests that you can choose to run. We’ve defined each of these in detail below.

1. Full factorial testing

This is the most basic and frequently used MVT method. Using this testing methodology, you basically distribute your website traffic equally among all testing combinations. For instance, if you’re planning to test 8 different types of combinations on your homepage, each testing variation will receive one-eighth of all the website traffic.

Since each variation gets the same amount of traffic, the full factorial testing method offers all the necessary data you’d need to determine which testing variation and page elements perform the best. You’ll be able to discover which element had no effect on your targeted business metrics and which ones influenced them the most.

Because this MVT methodology makes no assumptions with respect to statistics or testing mathematics used in the background, our seasoned optimizers highly recommend it for people running or planning to run multivariate tests.

Increase your recurring revenue by optimizing your website using VWO’s Multivariate testing methodology. Sign up and start your 1-month free trial today !

2. Partial or fractional factorial testing

Partial or fractional factorial MVT methodology exposes only a fraction of all testing variations to the website’s total traffic. The conversion rate of the unexposed testing variations is interpreted from the ones that were included in the test.

Say you want to test 16 variations or combinations of your website’s homepage. In a regular test (or full factorial test), traffic is split equally between all variations. However, in the case of fractional factorial testing, traffic is divided between only 8 variations. The conversion rate of the remaining 8 variations is calculated or statistically deduced based on those actually tested.

This method involves the use of advanced mathematical techniques and the use of multiple assumptions to gather insights, and has many disadvantages. One pro point of this MVT methodology is that it requires less traffic. It’s a good option for websites or pages with low traffic.

However, regardless of how advanced mathematics techniques you use to draw statistically significant results using fractional factorial testing, hard data is any day better than speculation.

3. Taguchi testing

This is an old and esoteric MVT method. If you run a Google search, you’ll find that most tools on the market today claim to cut down on your testing time and traffic requirement by using the Taguchi testing technique. It’s more of an “off-line quality control” technique as it helps test and ensure good performance of products or processes in their design stage.

While some optimizers consider this a good MVT methodology, we at VWO believe that this is an old-school practice which is not theoretically sound. It was initially used in the manufacturing industry to reduce the number of combinations required to be tested for QA and other experiments.

Taguchi testing is not applicable or suitable for online testing and hence, not recommended. Use the full factorial or partial factorial MVT approach.

How is multivariate testing different?

A/b testing vs multivariate testing.

Ask an experience optimizer and they’ll say the ideal use of A/B testing is to analyze the performance of two or more radically different website elements. Meanwhile, MVT is a perfect technique to test which combination of page element(s) gets maximum conversions.

In testing terms, it’s often recommended to use A/B testing to find what’s called the “global maximum,” and MVT to refine your way towards the “local maximum.”

Let’s take an example to understand the concept of global maximum and local maximum.

Imagine for a second that you’ve never tasted even a single piece of chocolate in your life, and you’re standing in a chocolate shop looking at 25 different types of chocolates, confused about which one to purchase.

There are probably 5 different kinds of caramel chocolates, 10 different varieties of truffles, 6 different variations of lollipops, and 4 different types of exotic fruit chocolates. Are you going to taste all these 25 flavors before deciding which one to buy?

You may try one kind of chocolate from each of the above-mentioned categories, but surely not all. If you find that you like truffles the most over lollipops, caramel chocolates and exotic fruit chocolates, you’ll start tasting more truffle flavors like “coconut truffles,” “Oreo truffles,” “chocolate-fudge truffles,” and so on to decide which among the truffle flavors you like the most.

In statistical terms, we’d say that the category of chocolates you like the most will become the global maximum. This is the type of chocolate that spoke to your taste buds and tasted the best among the lot. When you get down to the specific flavors of truffles, i.e., coconut truffle, Oreo truffle, chocolate fudge, and more, you’ll discover the local maximum – the best version of the variety that you chose.

As an experience optimizer, you must approach testing in a similar manner. Find the webpage that gives you maximum conversions (global maximum), and then test combinations of specific elements on that webpage to understand which one improves your page’s performance and makes the highest-converting page (local maximum). What you’re looking for, global maximum or local maximum will define which testing methodology you must use.

Here’s a list of pros and cons of using A/B testing and multivariate testing .

| A/B testing | Multivariate testing |

| : 1. A comparatively simple method to design and execute. 2. Helps conclude debates around campaign tactics when there’s one hypothesis in question. 3. Helps generate statistically significant results even with lesser traffic samples. 4. Provides clear and detailed result reports which are easy for even non-technical teams to interpret and implement. 1. Limited to testing a single element with a few variations, typically 2 to 3. 2. Not possible to analyze the interaction between various page elements within the same testing campaign. | 1. Gives insights regarding the interaction between multiple page elements. 2. Provides a granular picture around which element poses impact on the performance of a page. 3. Enables optimizers to compare many versions of a campaign and conclude which one has the maximum impact. 1. A comparatively complex experimentation methodology to design and execute. 2. It requires more traffic than an A/B test to show statistically significant results. 3. Too many combinations make result interpretation difficult. 4. Can serve as an overkill when an A/B test could have been sufficient to show results. |

Split URL testing vs multivariate testing

Assuming you’re fairly clear with the definition and concept of MVT, we’ll begin by breaking down the concept of Split URL testing . Rather than testing page elements at a granular level such as in the case of MVT, a split URL test enables you to run a test on a page level. Meaning, variations in the case of Split URL test are dramatically different and hosted on separate URLs but have the same end goal.

Let’s continue on the example of you running an online business of homemade chocolates. Imagine your current homepage has a banner that shows different offers running on your website along with a section displaying your featured products, another section highlighting different chocolate categories, brand story, and related recipes. According to your gut feeling, the page looks attractive and has the potential to convert.

However, after looking at the qualitative results, viewing heatmaps , session recording, etc. you find that many elements on your homepage are not showing the results they should.

If you decide to run a Split URL test, you can create an entirely new page design with elements placed in a different manner and compare the performance of this variation with the control to analyze which one’s generating more conversions.

Meanwhile, if you decide to run a multivariate test, you can create permutations of different page elements that you want to examine, maybe test different colors of your homepage’s CTA button, banner image, sub headings, and so on, and check which combination generates maximum conversion. There can be ‘n’ number of permutations that you can test with MVT.

One of the primary reasons MVT is better than split URL testing is that the latter demands a lot of design and development team’s bandwidth and is a lengthy process. MVT, on the other hand, is comparatively less complex to run and demands lesser bandwidth as well.

Here’s a comparison table between Split URL testing and MVT:

| Split URL testing | Multivariate testing |

| : 1. Sizeable changes such as completely new page designs are tested to check which gets maximum traction and conversions. 2. Variations are created on separate URLs to maintain distinction and clarity. 3. Helps examine different page flows and complex changes such as a complete redesign of your website’s homepage, product page, etc. 4. With Split URL testing, you test a completely new webpage. : 1. A comparatively complex test to design and execute. 2. Requires a lot of design and development team bandwidth. 3. Assess the performance of a website as a whole while ignoring the performance of individual page elements. | : 1. A combination of web page elements are modified and tested to check which permutation gets maximum conversions. 2. Runs on a granular level to understand the performance of each page element. 3. Comparatively test more variations.Requires less changes in terms of design and layout. : 1. Usually requires more traffic to reach statistical significance. 2. Demands more variable combinations to run and show results. 3. The traffic spread across variations is too thin. This sometimes makes the test results unreliable. 4. Since more subtle changes are tested, the impact on conversion rate may not be significant or dramatic. |

Multipage testing vs multivariate testing

As the name suggests, multipage testing is a form of experimentation method wherein changes in particular elements are tested across multiple pages . For instance, you can modify your homemade chocolate eCommerce website’s primary CTA buttons (Add to Cart and Become a Member) on the homepage, replicate the change across the entire site, and run a multipage test to analyze results.

Compared to MVT, optimizers suggest it’s best to use multipage testing when you want to provide a consistent experience to your traffic, when you’re redesigning your website, or you want to improve your conversion funnel . Meanwhile, if you want to map the performance of certain elements on one particular web page, go with A/B testing or MVT.

Here’s a clear distinction between multipage testing and multivariate testing.

| Multipage testing | Multivariate testing |

| 1. Create one test to map the performance of a particular element, say site-wide navigation, across the entire website. 2. Run funnel tests to examine different voices and tones on web pages. 3. Experiment different design theories and analyze which one’s the best. 4. Helps map site-wide conversion rate. 1. Requires huge traffic to show statistically significant results. 2. Can take longer than usual to conclude.Gaining results from this form of experimentation method can be tricky. | 1. 2. You can validate even the minutest of choices, such as the color of a CTA button. 3. Gives in-depth insights about how different page elements play together. 4. Determine the contribution of individual page elements. 5. Eliminates the need to run multiple A/B tests. 1. More permutations or variations means longer time for a test to reach the stage of statistical significance. 2. Unlike multipage testing, you can test changes only on one particular page at a time in MVT. |

How to run a multivariate test?

The process of setting up and running MVT is not very different from a regular A/B test, except for a couple of steps in between. But we’ll start from the beginning so that the process stays afresh. Let’s deep dive.

1. Identify a problem

The first step to running MVT and improving the performance of your web page is to dig into data and identify all the loopholes causing visitors to drop off. For instance, the link attached to your “download guide” button may be broken or the form on your product page may be asking for information more than necessary. To spot these points of ambiguities, take the following steps.

- Conduct informal research: Take a look at customer support feedback and examine product reviews to understand how people are reacting to your products and services. Speak to your sales, support, and design teams to get honest feedback about your website from a customer’s point of view.

- Quantitative tools such Google Analytics to analyze bounce rate, page time spent, exit percentage, and similar metrics.

- Qualitative tools such as heatmaps to see where the majority of your website visitors are concentrating their attention, scrollmaps to analyze how far they’re scrolling down the page, and session recordings to visually see their entire journey.

- Explore the option of usability testing: This tool offers an insight into how people are actually using or navigating through your website. With usability testing you can gather direct feedback about visitor issues and draft necessary solutions.

VWO Insights offers you a full suite of qualitative and quantitative tools such as heatmaps, scroll maps, click maps, session recordings, form analytics, etc. for quick and thorough analysis.

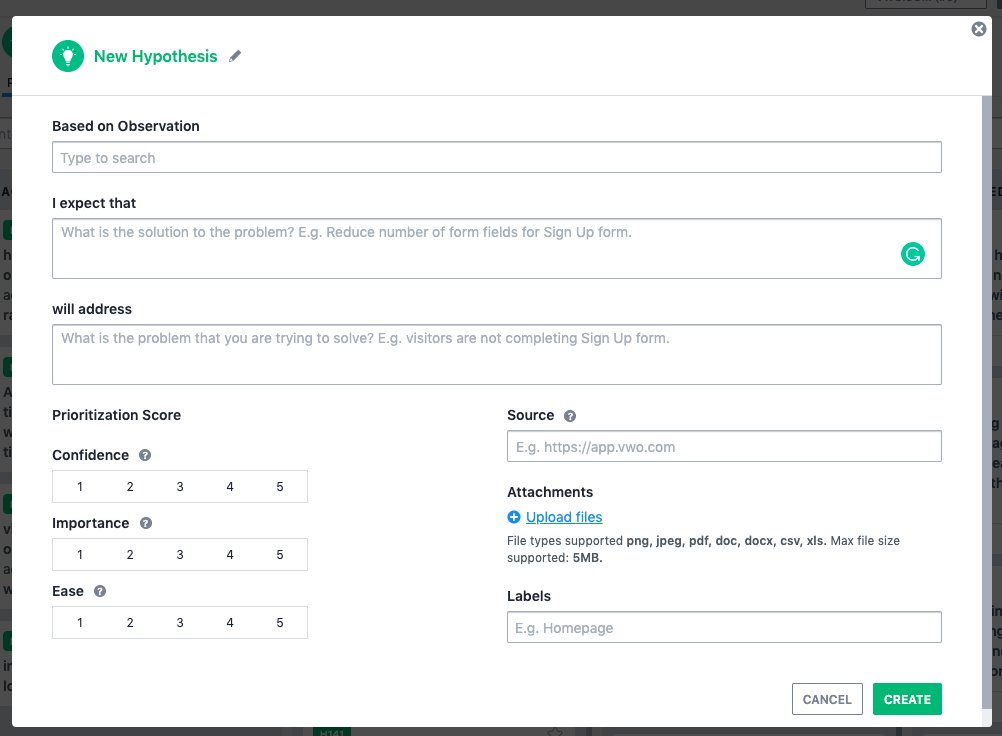

2. Define your goals and formulate a hypothesis

Successful experimentation begins by clearly defining goals. It is these goals or metrics that help prepare smart testing strategies around element selection and their variations. For example, if you’re trying to increase your average order value (AOV) or revenue per visitor (RPV) , you may select elements that directly aid these metrics and create different variations.

Once you’ve defined your goals and selected your page elements to test, it’s time to formulate a hypothesis. A hypothesis is basically a suggested solution to a problem. For instance, after looking at the heatmap of your product page you analyze that your “Add to Cart” button is not prominently visible, you’ll perhaps form a hypothesis that “ based on observations gathered through heatmap and other qualitative tools, I expect that if we put the “Add to Cart” button in a colored box, we will see high visitor interaction with the button and more conversions.

Here’s a hypothesis format that we at VWO use:

If you don’t have a good heatmap tool in your arsenal, use VWO’s AI-powered free heatmap generator to know how visitors are likely to engage on your webpage. You can also invest in VWO Insights to generate actual visitor behavior data and use it to your leverage.

3. Create variations

Post forming a hypothesis and having a good idea around which page elements you want to test, the next step is to create variations. Infuse your site with the new web elements or variations such as clearer and more prominently visible call-to-action buttons, enhanced images, text that’s more factual and resonates with what visitors expect, and so on.

VWO provides an excellent and highly user-friendly platform to run a multivariate test. Using VWO’s Visual Editor , you can play with your website and its elements and create as many variations as you want without the help of a developer. Do watch this video on ‘Introduction to VWO Visual Editor’ to learn more.

4. Determine your sample size

As stated in the above sections, it’s best to run MVT on a page with high traffic volume. If you choose to run it on a small traffic volume, your test is most likely to fail or take longer than usual to reach its statistically significant stage. The higher the traffic volume, the better will be the traffic split between variations, and hence, the better shall be the test results.

You can use our A/B and multivariate test duration calculator to find out how much traffic, and how long you need to run MVT based on your website’s current traffic volume, number of variations including control, and your statistical significance.

5. Review your test setup

The next step to running an effective and successful MVT is to review your test setup in your testing app. You may be confident that you’ve taken all the necessary steps and added the variations correctly in your testing app, but there’s no harm thoroughly reviewing it one or two times more.

One of the clearest advantages of conducting a review is that it gives you an opportunity to ensure every element has been added correctly and all the necessary test selections have been made. Taking the time to quality check your test is a critical step to ensure its success.

6. Start driving traffic

If you think your webpage doesn’t have enough traffic to support the MVT experiment, it’s time to look for ways to do so. Your job is to ensure your page has as much traffic as possible to make sure your testing efforts don’t fail. Use paid advertising, social media promotion, and other traffic generation methods to prove or disprove the hypothesis you’re currently playing with.

7. Analyze your results

Once your test has run its due course and reached its statistically significant stage, it’s time to access it’s results and see if your hypothesis was right or wrong.

Since you’ve tested multiple page elements at once, take some time to interpret your results. Examine each variation and analyze their performance. Note that it’s not necessary a variation that won which may be the best one to implement on your website permanently. Sometimes, these results can be inconclusive. Use qualitative tools such as heatmaps, clickmaps, session recordings, form analytics, etc. to examine the performance of each variation and draw a conclusion.

After all, it’s important to ensure the validity of your test and implement changes on your webpage as per the preference of your audience and deliver the experience they want.

How to run a multivariate test on a low-traffic website?

We’ve concluded time and again that MVT requires more traffic than an A/B test to show statistically significant results. But, does that mean websites with low traffic volume cannot do multivariate testing? Absolutely not!

The theory behind MVT asking for high traffic is pretty obvious. The higher the number of variations, the higher shall be the traffic split between the variations, and hence, the longer it will take to draw conclusive results. If you’re planning to run MVT on a website with low traffic volume, all you need to do is make some modifications.

1. Only test high-impact changes

Let’s say, there are 6 elements on your product page which you believe have the potential to improve the performance of your page and even increase conversions. Do all of these have equal potential? Probably not. Some may be more impactful and have more noticeable effects than others.

When you’re planning to run MVT on a low traffic website, focus your energies on testing those site elements that can have a significant impact on your page’s performance and goals rather than testing small modifications with low impact intensity.

Although it may be intimidating to test radical changes, when you do, the likelihood of them showing a dramatic difference in conversion rate is also high. No matter the outcome, the learnings and valuable insights about your customer’s behavior and perception of your brand, can help run informed future tests and business decisions.

2. Use fewer variations

Needless to say, low traffic volume means testing less number of variations. We understand that it’s tempting to test different optimization ideas to solve visitor problems. But, with every added variation in your test, the time to achieve statistical significance also increases . Don’t take that risk. Go small and go slow. This may cost you some extra efforts and resources, it will surely save you much time compared to running MVT with more number of variations.

Use tools like heatmaps, scrollmaps, session recordings, usability testing , etc. to find high-impact page elements. Use the ICE model (impact, confidence, ease) to create a testing pipeline and follow it.

3. Focus on micro-conversions

Your primary goal may be to increase page sign-ups, click-through rate or overall conversions, but does it make sense to use these as your primary metrics when you know it would take you much longer to gather enough conversions and even verify the test results? Surely not.

The better thing to do is test conversions on a micro-level . A level at which conversions are plentiful and can help you optimize your page quickly. For instance, focus your efforts on increasing your page engagement rate, clicks on add to cart button, clicks on images, etc.

Other goals could be setting up a conversion goal that fires when a visitor fills up an exit-intent pop-up form, stays on your website for more than 30 minutes, or scrolls down a certain depth/folds through your long-copy page. You can also use a quantitative tool like Google Analytics to analyze which conversions to map or use as goals to optimize your website .

4. Consider lowering down your statistical significance setting

When you don’t have the leverage to run a test on a large sample size , resort to using other methods to measure the performance of your control and variation. Do not wait for your test to reach its statistically significant level. If your testing tool allows, you can also lower your statistical significance levels. So, for instance, if you set your significance level to 70%, any version that reaches this mark will become the winner. In this case, you would also require a much smaller sample size than going for 99% significance.

Optimizers across the industry recommend many ways to measure the performance of a test version, but the ones we recommend are as follows:

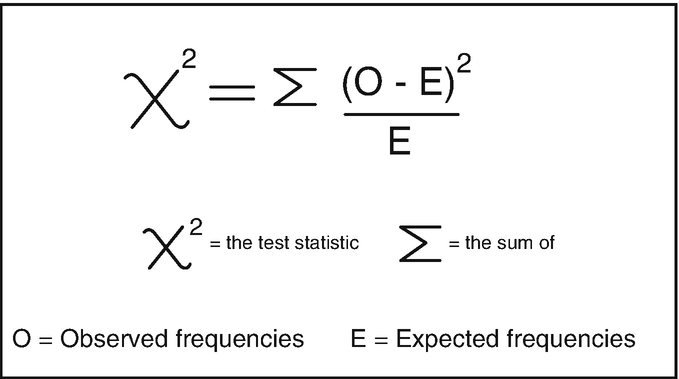

- The 2-sample t-test: Also known as the independent sample t-test, it’s a method used to examine whether the means of two or more unknown samples are equal or not. If your sample distribution is unequal, you can use a different estimate of the standard deviation to check results.

- B. The Chi-Squared Test: The primary objective of this test is to examine the statistical significance of the observed relationship between two variants with respect to the expected outcome. In other words, it helps you analyze which version of your test is most likely to reach statistical significance and has better chances of winning.

- Confidence Interval : This method simply measures the degree of certainty or uncertainty of a variation to reach its statistical significance by observing the data at hand.

- Measuring sessions : This is another way to test the statistical significance of a variation. Rather than measuring your test’s performance by counting users, take into account sessions. This means that your test treats each individual as a participant in an experiment only once.

5. Avoid niche testing

Avoid testing those sections or elements of your site that get very few hits. Instead, target page elements that get more traction. Site-wide CTA tests, landing page tests , and the like will help you take advantage of your site’s incoming traffic . Such tests are also likely to show statistically significant results in a shorter time span.

What are the advantages and disadvantages of multivariate testing?

Instances in which multivariate testing is valuable, 1. mvt helps measure the interaction between multiple page elements .

Let’s come back to evaluating the performance of your homemade chocolate eCommerce website. You’re confident that two sequential A/B tests can produce the same results as an MVT. So, you decide to first run an A/B test to compare a static banner image with a video banner, and the latter wins. Next, on the winning variation, i.e., the video banner, you do another A/B test between two possible CTAs, ‘Explore More’ and ‘Buy Now,’ and the former proved better. Don’t you think you could’ve come to the same conclusion with an MVT test?

Unlike an A/B test, a multivariate test gives you the leverage to test and measure how multiple page elements interact with each other. Just by testing a combination of various elements (let’s say your product page’s hero image, a CTA, and headline) you can not only figure out which variation performs better than others and helps increase conversions but also discover the best possible combinations of the tested page elements.

2. Provides an in-depth visitor behavior analysis

MVT enables you to conduct an in-depth analysis on visitor behavior and their preference patterns. It provides you with statistics on the performance of the variations vs their conversion effects. This helps re-orienting the visitor connection of your website. The better the re-orientation as per a visitor’s intent, the more are the chances of high conversions.

3. Sheds light on dead or least contributing page elements.

A multivariate test doesn’t just help you find the right combination of page elements that will help increase your conversions. It also sheds light on those that are contributing least or nothing to your site’s conversions and occupying huge page space. These elements can be anything, textual content, images, banner, etc.

Relocate or replace them with elements that catch the attention of your visitors and channelize some conversions as well. It’s always better to have your page elements contribute something to your goals than absolutely nothing.

4. Guides you through page structurization

The importance of placing elements at the right location can be understood from the fact that visitors today have a short view span. They devote maximum time reading and taking in information mentioned in the first fold of your web page. So, if you’re not placing the relevant content at the top, you’re reducing the chances of getting conversions by a great margin. MVT allows you to study the placement of various page elements and locate them at their right place in order to facilitate conversions for your business and make it easy for visitors to find what they came to look for on your page.

5. Test a wide range of combinations

Unlike a regular A/B test that gives you the leverage to test one or more variations of a particular element in singularity, MVT allows you to test multiple elements at the same time by applying the concept of permutations and combinations. Such an experimentation method not only increases your testing options that you can use to tap on conversions but helps save time by avoiding running sequential A/B tests.

Instances where multivariate testing is not valuable

MVT is a highly sophisticated testing methodology. A single MVT test helps answer multiple questions at once. But, just because it’s a complicated testing technique doesn’t mean it’s better than other techniques or that the data it generates is more useful. Every coin has two sides. We listed the pros of using a multivariate test in the above section. It’s time to know the cons as well.

1. Requires more traffic to show statistically significant results

Unlike a traditional A/B test, MVT demands high traffic inflow. This means that it only works or shows statistically significant results for sites which have a ton of traffic. Even if you do run it on a site with low traffic, you’ll have to compromise on something or the other such as test fewer combinations, use other methods to calculate a winner, etc. Read the section above on ‘How to run a multivariate test on low traffic websites’ for better clarity.

2. They’re comparatively tricky to set up

One thing that makes people opt for an A/B test over MVT is that the former is comparatively very simple to set up. After all, you just need to change one or two elements and add variations while keeping the rest of the page design the same. Anyone who has an understanding of web design can easily set up an A/B test, and even complex A/B tests today rarely require more than a couple of minutes of a developer’s time. Tools like VWO enable even non-technical folks to set up an A/B test within a matter of minutes .

On the contrary, MVT requires more efforts even if you’re creating a basic one, and it’s also very easy for them to go off the rails. A minute mistake in the design or while creating the variations can hamper the test results. MVT is a good option for optimizers who have a lot of experience in the arena of experimentation.

3. There’s a hidden opportunity cost

Time is always of the essence and a valuable commodity for any business. When you run a test on your website, you’re investing time and playing with your conversion rate. There’s a hidden cost that you put on the line. Multivariate tests are comparatively complex and slow to set up, and slower to run. All the time lost during its setup phase and course of running creates an opportunity cost.

Amidst the time MVT takes to show meaningful results, you could have run dozens of A/B tests and drawn conclusions. A/B tests are quick to set up and also provide definite answers to many specific problems.

4. The chances of failure are comparatively high

Needless to say that testing allows you to move fast and break things to optimize your website and make it more user friendly. You get the leverage to try crazy ideas and even fail spectacularly without facing any real risk or consequences. While this approach seems effective in the case of an A/B test, we can’t say the same for multivariate testing.

While each A/B test, despite its success or failure, provides a series of learning points to refer back, the same fails in the case of a multivariate test. It’s comparatively difficult to draw meaningful learnings as you play with a lot of elements that too in combination. More so, MVT is so slow and tedious that it doesn’t really make any sense to take such a risk in the first place and fail in the end.

5. MVT is biased towards design

Another MVT con that most optimizers have realized over the years is that the testing method often provides answers to problems related to design. Some of the strongest advocates or supporters of MVT are also UI and UX professionals.

Design is obviously important, but it’s surely not everything. UI and UX elements represent only a small part of all the total variables you use to enhance the performance of your website. Copy, promotional offers, and even site functionality are essential to ensure your website is liked by your target audience. These elements are often underestimated and overlooked in the case of MVT despite the fact that they have a huge impact on the conversion rate of your site.

Machine learning and multivariate testing

The advancement in the field of technology, especially artificial intelligence, is now prominently visible in the testing arena as well. For many years, a program called a neural network was enabling computers to learn as they gather data and take necessary actions that were more accurate than humans while using less data and time. However, neural networks were able to help humans solve some specific problems only.

Looking at the capabilities of these neural networks, many software companies thought to use their potential to develop solutions which could enhance the entire multivariate testing process. This solution is called the evolutionary neural network or a generic neural network.

The approach uses the capabilities of machine learning to select which website elements to test and creates all possible variations on its own. It restricts the need to test all combinations and enables \ optimizers to test only those which have the ability to show highest conversions.

The algorithms behind the solution prune the poor performing combinations to pave the way for more likely winners to participate in the test. Over time, the highest performing combination emerges as the winner and then becomes the new control.

Once that happens, these algorithms then introduce variables called mutations in the test. Variants that were previously pruned are reintroduced as new combinations to analyze whether any one of these might turn successful amidst the better-performing combinations.

This approach has proved to show better and faster results even with less traffic.

Evolutionary neural networks enable testing tools to learn which set of combinations will show positive results without testing all possible multivariate combinations. With machine learning, websites that have too little traffic to opt for MVT can also consider this option now without making compromises.

Best practices to follow when running a multivariate test

MVT has the ability to empower optimizers to discover effective content that helps drive KPIs and enhance the performance of a website, but only when they follow best practices.

1. Create a learning agenda

Before getting started, be sure that MVT is the best testing approach to your identified problems or whether a simple A/B test would best suit your needs. We, at VWO, believe that it’s important to first draft a learning agenda. This will help you define what exactly you want to test and what you hope to learn from this experiment.

The agenda basically acts as a blueprint, helping you establish a hypothesis, define the page elements you want to test and for which audience segment, and prioritize learning objectives accordingly. It also comes in handy when you begin to set up your test and ensures that all adjustments have been made correctly.

2. Avoid testing all possible combinations

For most people, running a multivariate test means testing everything that comes under their radar. That should not be the case. Restrict yourself and test only those variables that you believe can have a high impact on your conversion rate. Also, the more the number of elements, the more shall be the number of permutations, the more it will be to run and gauge the final results.

Say for example, you want to test the performance of your display ad. You decide to test four possible images, two possible CTA button colors, and three possible headlines. This totals up to 24 variations of your display ad. When you test all the variations at once, each gets 1/24th traffic of the total incoming traffic.

With such a high traffic split, the chances of any variation reaching its statistical significance is quite low. And, even if one or some of them do, the time they take will make the test insignificant.

Furthermore, it’s not necessary that all combinations may sense from a design standpoint. For instance, an image with a blue background and blue CTA button color will make it hard for visitors to identify the CTA, especially on a mobile screen. Use good judgment and select only those variations which can show some results.

3. Generate ideas from data for greater experimental variety and relevancy

While it’s a great practice to limit yourself from testing every possible idea that pops in your head, it’s also important to not ignore possibilities that could impact your conversion rate. To know if a variation is worth sampling, generate ideas from various data sources . These could include:

- First-hand data collected on the basis of visitor behavior, segment demographics, and interests.

- Third-party data extracted from multiple data providers for additional visitor information such as purchase behavior, transactional data, or industry-specific data.

- Historical performance data extracted from previously run campaigns targeting similar traffic.

4. Start eliminating low performers once your test reaches minimum sample size

It’s not necessary to end your multivariate test the moment it achieves adequate sample size . Rather, you should begin eliminating the non-performing variations. Shut down variations that have negligible movement compared to control once they’ve achieved the needed representative sample size. This means that more traffic will flow towards variations that are performing well, enabling you to optimize your test for higher quality results, faster.

5. Use high-performing combinations for further testing

Once you’ve discovered a few potential variations, restructure your test and fine-tune the variable elements. If a certain headline on a product page is outperforming others, come up with a fresh set of variations around that headline.

You can even opt to run a discrete A/B/n test with limited experimental groups to analyze the performance of the new variations in a shorter time. Most experience optimizers suggest that when you learn something from an experiment, use the knowledge to enhance the performance of other page elements. Testing is not just about increasing revenue, but about understanding visitor behavior and serving to their needs.

Best multivariate testing tools

The market today is swamping with A/B testing tools . Not all have the required capabilities to help you run successful experiments without a hassle. Neither can you take the risk of developing an in-house experimentation suite. Here’s a list of the top 5 multivariate testing tools for experience optimizers who have a passion for testing:

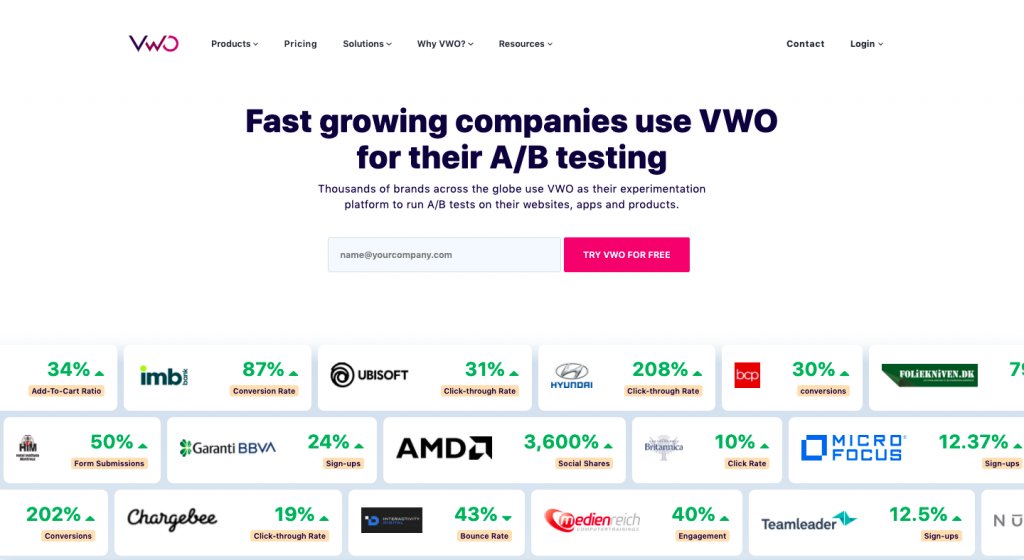

VWO is an all-in-one, cloud-based experimentation tool that helps optimizers run multiple tests on their website and optimize it to ensure better conversions. Besides laying an easy-to-use experimentation platform, the tool allows you to conduct qualitative and quantitative research work, create test variations, and even analyze the performance of your tests via its robust dashboard.

VWO also offers the SmartStats feature that runs on Bayesian statistics. It gives you more control of your tests and helps derive conclusions sooner. Sign up today for a free trial and get into the habit of experimentation.

2. Optimizely

Optimizely platform offers a comprehensive suite of CRO tools and generally entertain enterprise-level customers only.

Essentially, Optimizely primarily provides web experimentation and personalization services. However, you can use its capabilities to run experiments on mobile apps and messaging platforms as well. You can even opt to run multiple tests on the same page and rest assured of accurate results.

3. A/B Tasty

A/B Tasty is another experimentation platform that offers a holistic range of testing features. Besides the usual A/B and Split URL testing option, it also allows you to run multivariate tests. It has an integrated platform that’s easy-to-use and offers a real-time view of your tests and their respective confidence levels.

4. Oracle Maxymiser

An advanced A/B testing and personalization tool, Oracle Maxymiser enables you to design and run sophisticated experiments. The platform offers many powerful features and capabilities such as multivariate testing, funnel optimization, advanced targeting and segmentation, and predictive analytics. Such features make it a perfect match for data-driven optimizers with an in-house IT support team.

5. Google Optimize 360

Google Optimize 360 is a Google product that offers a broad range of services besides experimentation. Some of these include native Google Analytics integration, URL targeting, and Geo-targeting. If you opt for Google Optimize 360’s premium version, you get to explore the tool in-depth. With Google Optimize 360 you can:

- test up to 36 combinations when running MVT

- run 100+ test simultaneously

- make 100+ personalizations at a time

- get access to Google Analytics 360 Suite administration

Multivariate testing is an arm of A/B testing that uses the same experimentation mechanics, but compares more than one variable on a website in a live environment. It opposes the traditional scientific notion and enables you to, in a way, run multiple A/B/n tests on the same page simultaneously. At the core, it’s a highly complex process that requires more time and effort, but provides comprehensive information around how different page elements interact with each other and which combinations work the best magic on your site.

If you still have questions around what is multivariate testing or how it can benefit your website, request a demo today ! Or, get into the habit of experimentation and start A/B testing today! Sign up for VWO’s free trial .

Download this Guide

Deliver great experiences. grow faster, starting today..

Talk to a sales representative

Get in touch

Thank you for writing to us.

One of our representatives will get in touch with you shortly.

Signup for a full-featured trial

Free for 30 days. No credit card required

Set up your password to get started

Awesome! Your meeting is confirmed for at

Thank you, for sharing your details.

Hi 👋 Let's schedule your demo

To begin, tell us a bit about yourself

While we will deliver a demo that covers the entire VWO platform, please share a few details for us to personalize the demo for you.

Select the capabilities that you would like us to emphasise on during the demo., which of these sounds like you, please share the use cases, goals or needs that you are trying to solve., please share the url of your website..

We will come prepared with a demo environment for this specific website.

I can't wait to meet you on at

, thank you for sharing the details. Your dedicated VWO representative, will be in touch shortly to set up a time for this demo.

We're satisfied and glad we picked VWO. We're getting the ROI from our experiments. Christoffer Kjellberg CRO Manager

VWO has been so helpful in our optimization efforts. Testing opportunities are endless and it has allowed us to easily identify, set up, and run multiple tests at a time. Elizabeth Levitan Digital Optimization Specialist

As the project manager for our experimentation process, I love how the functionality of VWO allows us to get up and going quickly but also gives us the flexibility to be more complex with our testing. Tara Rowe Marketing Technology Manager

You don't need a website development background to make VWO work for you. The VWO support team is amazing Elizabeth Romanski Consumer Marketing & Analytics Manager

Trusted by thousands of leading brands

A Guide on Data Analysis

22 multivariate methods.

\(y_1,...,y_p\) are possibly correlated random variables with means \(\mu_1,...,\mu_p\)

\[ \mathbf{y} = \left( \begin{array} {c} y_1 \\ . \\ y_p \\ \end{array} \right) \]

\[ E(\mathbf{y}) = \left( \begin{array} {c} \mu_1 \\ . \\ \mu_p \\ \end{array} \right) \]

Let \(\sigma_{ij} = cov(y_i, y_j)\) for \(i,j = 1,…,p\)

\[ \mathbf{\Sigma} = (\sigma_{ij}) = \left( \begin{array} {cccc} \sigma_{11} & \sigma_{22} & ... & \sigma_{1p} \\ \sigma_{21} & \sigma_{22} & ... & \sigma_{2p} \\ . & . & . & . \\ \sigma_{p1} & \sigma_{p2} & ... & \sigma_{pp} \end{array} \right) \]

where \(\mathbf{\Sigma}\) (symmetric) is the variance-covariance or dispersion matrix

Let \(\mathbf{u}_{p \times 1}\) and \(\mathbf{v}_{q \times 1}\) be random vectors with means \(\mu_u\) and \(\mu_v\) . Then

\[ \mathbf{\Sigma}_{uv} = cov(\mathbf{u,v}) = E[(\mathbf{u} - \mu_u)(\mathbf{v} - \mu_v)'] \]

in which \(\mathbf{\Sigma}_{uv} \neq \mathbf{\Sigma}_{vu}\) and \(\mathbf{\Sigma}_{uv} = \mathbf{\Sigma}_{vu}'\)

Properties of Covariance Matrices

- Symmetric \(\mathbf{\Sigma}' = \mathbf{\Sigma}\)

- Non-negative definite \(\mathbf{a'\Sigma a} \ge 0\) for any \(\mathbf{a} \in R^p\) , which is equivalent to eigenvalues of \(\mathbf{\Sigma}\) , \(\lambda_1 \ge \lambda_2 \ge ... \ge \lambda_p \ge 0\)

- \(|\mathbf{\Sigma}| = \lambda_1 \lambda_2 ... \lambda_p \ge 0\) ( generalized variance ) (the bigger this number is, the more variation there is

- \(trace(\mathbf{\Sigma}) = tr(\mathbf{\Sigma}) = \lambda_1 + ... + \lambda_p = \sigma_{11} + ... + \sigma_{pp} =\) sum of variance ( total variance )

- \(\mathbf{\Sigma}\) is typically required to be positive definite, which means all eigenvalues are positive, and \(\mathbf{\Sigma}\) has an inverse \(\mathbf{\Sigma}^{-1}\) such that \(\mathbf{\Sigma}^{-1}\mathbf{\Sigma} = \mathbf{I}_{p \times p} = \mathbf{\Sigma \Sigma}^{-1}\)

Correlation Matrices

\[ \rho_{ij} = \frac{\sigma_{ij}}{\sqrt{\sigma_{ii} \sigma_{jj}}} \]

\[ \mathbf{R} = \left( \begin{array} {cccc} \rho_{11} & \rho_{12} & ... & \rho_{1p} \\ \rho_{21} & \rho_{22} & ... & \rho_{2p} \\ . & . & . &. \\ \rho_{p1} & \rho_{p2} & ... & \rho_{pp} \\ \end{array} \right) \]

where \(\rho_{ij}\) is the correlation, and \(\rho_{ii} = 1\) for all i

Alternatively,

\[ \mathbf{R} = [diag(\mathbf{\Sigma})]^{-1/2}\mathbf{\Sigma}[diag(\mathbf{\Sigma})]^{-1/2} \]

where \(diag(\mathbf{\Sigma})\) is the matrix which has the \(\sigma_{ii}\) ’s on the diagonal and 0’s elsewhere

and \(\mathbf{A}^{1/2}\) (the square root of a symmetric matrix) is a symmetric matrix such as \(\mathbf{A} = \mathbf{A}^{1/2}\mathbf{A}^{1/2}\)

\(\mathbf{x}\) and \(\mathbf{y}\) be random vectors with means \(\mu_x\) and \(\mu_y\) and variance -variance matrices \(\mathbf{\Sigma}_x\) and \(\mathbf{\Sigma}_y\) .

\(\mathbf{A}\) and \(\mathbf{B}\) be matrices of constants and \(\mathbf{c}\) and \(\mathbf{d}\) be vectors of constants

\(E(\mathbf{Ay + c} ) = \mathbf{A} \mu_y + c\)

\(var(\mathbf{Ay + c}) = \mathbf{A} var(\mathbf{y})\mathbf{A}' = \mathbf{A \Sigma_y A}'\)

\(cov(\mathbf{Ay + c, By+ d}) = \mathbf{A\Sigma_y B}'\)

\(E(\mathbf{Ay + Bx + c}) = \mathbf{A \mu_y + B \mu_x + c}\)

\(var(\mathbf{Ay + Bx + c}) = \mathbf{A \Sigma_y A' + B \Sigma_x B' + A \Sigma_{yx}B' + B\Sigma'_{yx}A'}\)

Multivariate Normal Distribution