Statistics Made Easy

Introduction to Hypothesis Testing

A statistical hypothesis is an assumption about a population parameter .

For example, we may assume that the mean height of a male in the U.S. is 70 inches.

The assumption about the height is the statistical hypothesis and the true mean height of a male in the U.S. is the population parameter .

A hypothesis test is a formal statistical test we use to reject or fail to reject a statistical hypothesis.

The Two Types of Statistical Hypotheses

To test whether a statistical hypothesis about a population parameter is true, we obtain a random sample from the population and perform a hypothesis test on the sample data.

There are two types of statistical hypotheses:

The null hypothesis , denoted as H 0 , is the hypothesis that the sample data occurs purely from chance.

The alternative hypothesis , denoted as H 1 or H a , is the hypothesis that the sample data is influenced by some non-random cause.

Hypothesis Tests

A hypothesis test consists of five steps:

1. State the hypotheses.

State the null and alternative hypotheses. These two hypotheses need to be mutually exclusive, so if one is true then the other must be false.

2. Determine a significance level to use for the hypothesis.

Decide on a significance level. Common choices are .01, .05, and .1.

3. Find the test statistic.

Find the test statistic and the corresponding p-value. Often we are analyzing a population mean or proportion and the general formula to find the test statistic is: (sample statistic – population parameter) / (standard deviation of statistic)

4. Reject or fail to reject the null hypothesis.

Using the test statistic or the p-value, determine if you can reject or fail to reject the null hypothesis based on the significance level.

The p-value tells us the strength of evidence in support of a null hypothesis. If the p-value is less than the significance level, we reject the null hypothesis.

5. Interpret the results.

Interpret the results of the hypothesis test in the context of the question being asked.

The Two Types of Decision Errors

There are two types of decision errors that one can make when doing a hypothesis test:

Type I error: You reject the null hypothesis when it is actually true. The probability of committing a Type I error is equal to the significance level, often called alpha , and denoted as α.

Type II error: You fail to reject the null hypothesis when it is actually false. The probability of committing a Type II error is called the Power of the test or Beta , denoted as β.

One-Tailed and Two-Tailed Tests

A statistical hypothesis can be one-tailed or two-tailed.

A one-tailed hypothesis involves making a “greater than” or “less than ” statement.

For example, suppose we assume the mean height of a male in the U.S. is greater than or equal to 70 inches. The null hypothesis would be H0: µ ≥ 70 inches and the alternative hypothesis would be Ha: µ < 70 inches.

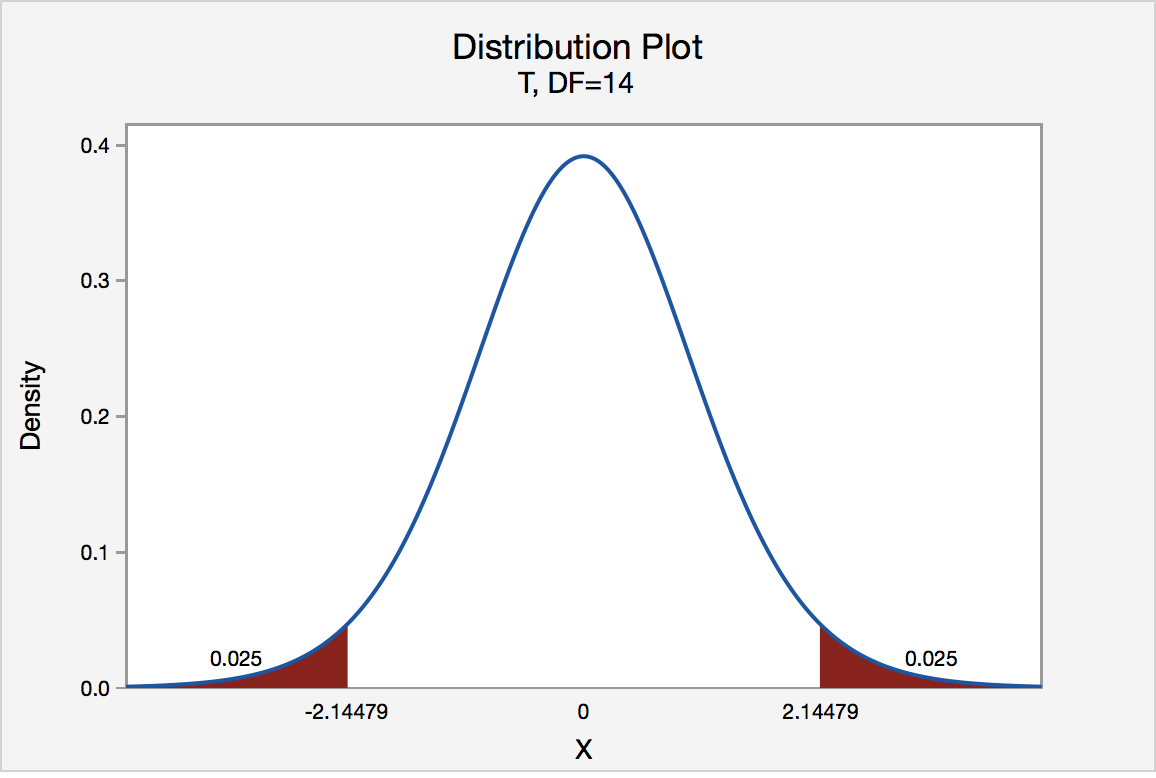

A two-tailed hypothesis involves making an “equal to” or “not equal to” statement.

For example, suppose we assume the mean height of a male in the U.S. is equal to 70 inches. The null hypothesis would be H0: µ = 70 inches and the alternative hypothesis would be Ha: µ ≠ 70 inches.

Note: The “equal” sign is always included in the null hypothesis, whether it is =, ≥, or ≤.

Related: What is a Directional Hypothesis?

Types of Hypothesis Tests

There are many different types of hypothesis tests you can perform depending on the type of data you’re working with and the goal of your analysis.

The following tutorials provide an explanation of the most common types of hypothesis tests:

Introduction to the One Sample t-test Introduction to the Two Sample t-test Introduction to the Paired Samples t-test Introduction to the One Proportion Z-Test Introduction to the Two Proportion Z-Test

Published by Zach

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

7.1: Basics of Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 16360

- Kathryn Kozak

- Coconino Community College

To understand the process of a hypothesis tests, you need to first have an understanding of what a hypothesis is, which is an educated guess about a parameter. Once you have the hypothesis, you collect data and use the data to make a determination to see if there is enough evidence to show that the hypothesis is true. However, in hypothesis testing you actually assume something else is true, and then you look at your data to see how likely it is to get an event that your data demonstrates with that assumption. If the event is very unusual, then you might think that your assumption is actually false. If you are able to say this assumption is false, then your hypothesis must be true. This is known as a proof by contradiction. You assume the opposite of your hypothesis is true and show that it can’t be true. If this happens, then your hypothesis must be true. All hypothesis tests go through the same process. Once you have the process down, then the concept is much easier. It is easier to see the process by looking at an example. Concepts that are needed will be detailed in this example.

Example \(\PageIndex{1}\) basics of hypothesis testing

Suppose a manufacturer of the XJ35 battery claims the mean life of the battery is 500 days with a standard deviation of 25 days. You are the buyer of this battery and you think this claim is inflated. You would like to test your belief because without a good reason you can’t get out of your contract.

What do you do?

Well first, you should know what you are trying to measure. Define the random variable.

Let x = life of a XJ35 battery

Now you are not just trying to find different x values. You are trying to find what the true mean is. Since you are trying to find it, it must be unknown. You don’t think it is 500 days. If you did, you wouldn’t be doing any testing. The true mean, \(\mu\), is unknown. That means you should define that too.

Let \(\mu\)= mean life of a XJ35 battery

You may want to collect a sample. What kind of sample?

You could ask the manufacturers to give you batteries, but there is a chance that there could be some bias in the batteries they pick. To reduce the chance of bias, it is best to take a random sample.

How big should the sample be?

A sample of size 30 or more means that you can use the central limit theorem. Pick a sample of size 30.

Example \(\PageIndex{1}\) contains the data for the sample you collected:

Now what should you do? Looking at the data set, you see some of the times are above 500 and some are below. But looking at all of the numbers is too difficult. It might be helpful to calculate the mean for this sample.

The sample mean is \(\overline{x} = 490\) days. Looking at the sample mean, one might think that you are right. However, the standard deviation and the sample size also plays a role, so maybe you are wrong.

Before going any farther, it is time to formalize a few definitions.

You have a guess that the mean life of a battery is less than 500 days. This is opposed to what the manufacturer claims. There really are two hypotheses, which are just guesses here – the one that the manufacturer claims and the one that you believe. It is helpful to have names for them.

Definition \(\PageIndex{1}\)

Null Hypothesis : historical value, claim, or product specification. The symbol used is \(H_{o}\).

Definition \(\PageIndex{2}\)

Alternate Hypothesis : what you want to prove. This is what you want to accept as true when you reject the null hypothesis. There are two symbols that are commonly used for the alternative hypothesis: \(H_{A}\) or \(H_{I}\). The symbol \(H_{A}\) will be used in this book.

In general, the hypotheses look something like this:

\(H_{o} : \mu=\mu_{o}\)

\(H_{A} : \mu<\mu_{o}\)

where \(\mu_{o}\) just represents the value that the claim says the population mean is actually equal to.

Also, \(H_{A}\) can be less than, greater than, or not equal to.

For this problem:

\(H_{o} : \mu=500\) days, since the manufacturer says the mean life of a battery is 500 days.

\(H_{A} : \mu<500\) days, since you believe that the mean life of the battery is less than 500 days.

Now back to the mean. You have a sample mean of 490 days. Is this small enough to believe that you are right and the manufacturer is wrong? How small does it have to be?

If you calculated a sample mean of 235, you would definitely believe the population mean is less than 500. But even if you had a sample mean of 435 you would probably believe that the true mean was less than 500. What about 475? Or 483? There is some point where you would stop being so sure that the population mean is less than 500. That point separates the values of where you are sure or pretty sure that the mean is less than 500 from the area where you are not so sure. How do you find that point?

Well it depends on how much error you want to make. Of course you don’t want to make any errors, but unfortunately that is unavoidable in statistics. You need to figure out how much error you made with your sample. Take the sample mean, and find the probability of getting another sample mean less than it, assuming for the moment that the manufacturer is right. The idea behind this is that you want to know what is the chance that you could have come up with your sample mean even if the population mean really is 500 days.

You want to find \(P\left(\overline{x}<490 | H_{o} \text { is true }\right)=P(\overline{x}<490 | \mu=500)\)

To compute this probability, you need to know how the sample mean is distributed. Since the sample size is at least 30, then you know the sample mean is approximately normally distributed. Remember \(\mu_{\overline{x}}=\mu\) and \(\sigma_{\overline{x}}=\dfrac{\sigma}{\sqrt{n}}\)

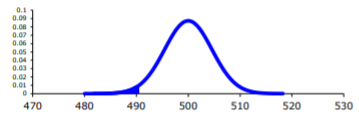

A picture is always useful.

.png?revision=1)

Before calculating the probability, it is useful to see how many standard deviations away from the mean the sample mean is. Using the formula for the z-score from chapter 6, you find

\(z=\dfrac{\overline{x}-\mu_{o}}{\sigma / \sqrt{n}}=\dfrac{490-500}{25 / \sqrt{30}}=-2.19\)

This sample mean is more than two standard deviations away from the mean. That seems pretty far, but you should look at the probability too.

On TI-83/84:

\(P(\overline{x}<490 | \mu=500)=\text { normalcdf }(-1 E 99,490,500,25 \div \sqrt{30}) \approx 0.0142\)

\(P(\overline{x}<490 \mu=500)=\text { pnorm }(490,500,25 / \operatorname{sqrt}(30)) \approx 0.0142\)

There is a 1.42% chance that you could find a sample mean less than 490 when the population mean is 500 days. This is really small, so the chances are that the assumption that the population mean is 500 days is wrong, and you can reject the manufacturer’s claim. But how do you quantify really small? Is 5% or 10% or 15% really small? How do you decide?

Before you answer that question, a couple more definitions are needed.

Definition \(\PageIndex{3}\)

Test Statistic : \(z=\dfrac{\overline{x}-\mu_{o}}{\sigma / \sqrt{n}}\) since it is calculated as part of the testing of the hypothesis.

Definition \(\PageIndex{4}\)

p – value : probability that the test statistic will take on more extreme values than the observed test statistic, given that the null hypothesis is true. It is the probability that was calculated above.

Now, how small is small enough? To answer that, you really want to know the types of errors you can make.

There are actually only two errors that can be made. The first error is if you say that \(H_{o}\) is false, when in fact it is true. This means you reject \(H_{o}\) when \(H_{o}\) was true. The second error is if you say that \(H_{o}\) is true, when in fact it is false. This means you fail to reject \(H_{o}\) when \(H_{o}\) is false. The following table organizes this for you:

Type of errors:

Definition \(\PageIndex{5}\)

Type I Error is rejecting \(H_{o}\) when \(H_{o}\) is true, and

Definition \(\PageIndex{6}\)

Type II Error is failing to reject \(H_{o}\) when \(H_{o}\) is false.

Since these are the errors, then one can define the probabilities attached to each error.

Definition \(\PageIndex{7}\)

\(\alpha\) = P(type I error) = P(rejecting \(H_{o} / H_{o}\) is true)

Definition \(\PageIndex{8}\)

\(\beta\) = P(type II error) = P(failing to reject \(H_{o} / H_{o}\) is false)

\(\alpha\) is also called the level of significance .

Another common concept that is used is Power = \(1-\beta \).

Now there is a relationship between \(\alpha\) and \(\beta\). They are not complements of each other. How are they related?

If \(\alpha\) increases that means the chances of making a type I error will increase. It is more likely that a type I error will occur. It makes sense that you are less likely to make type II errors, only because you will be rejecting \(H_{o}\) more often. You will be failing to reject \(H_{o}\) less, and therefore, the chance of making a type II error will decrease. Thus, as \(\alpha\) increases, \(\beta\) will decrease, and vice versa. That makes them seem like complements, but they aren’t complements. What gives? Consider one more factor – sample size.

Consider if you have a larger sample that is representative of the population, then it makes sense that you have more accuracy then with a smaller sample. Think of it this way, which would you trust more, a sample mean of 490 if you had a sample size of 35 or sample size of 350 (assuming a representative sample)? Of course the 350 because there are more data points and so more accuracy. If you are more accurate, then there is less chance that you will make any error. By increasing the sample size of a representative sample, you decrease both \(\alpha\) and \(\beta\).

Summary of all of this:

- For a certain sample size, n , if \(\alpha\) increases, \(\beta\) decreases.

- For a certain level of significance, \(\alpha\), if n increases, \(\beta\) decreases.

Now how do you find \(\alpha\) and \(\beta\)? Well \(\alpha\) is actually chosen. There are only three values that are usually picked for \(\alpha\): 0.01, 0.05, and 0.10. \(\beta\) is very difficult to find, so usually it isn’t found. If you want to make sure it is small you take as large of a sample as you can afford provided it is a representative sample. This is one use of the Power. You want \(\beta\) to be small and the Power of the test is large. The Power word sounds good.

Which pick of \(\alpha\) do you pick? Well that depends on what you are working on. Remember in this example you are the buyer who is trying to get out of a contract to buy these batteries. If you create a type I error, you said that the batteries are bad when they aren’t, most likely the manufacturer will sue you. You want to avoid this. You might pick \(\alpha\) to be 0.01. This way you have a small chance of making a type I error. Of course this means you have more of a chance of making a type II error. No big deal right? What if the batteries are used in pacemakers and you tell the person that their pacemaker’s batteries are good for 500 days when they actually last less, that might be bad. If you make a type II error, you say that the batteries do last 500 days when they last less, then you have the possibility of killing someone. You certainly do not want to do this. In this case you might want to pick \(\alpha\) as 0.10. If both errors are equally bad, then pick \(\alpha\) as 0.05.

The above discussion is why the choice of \(\alpha\) depends on what you are researching. As the researcher, you are the one that needs to decide what \(\alpha\) level to use based on your analysis of the consequences of making each error is.

If a type I error is really bad, then pick \(\alpha\) = 0.01.

If a type II error is really bad, then pick \(\alpha\) = 0.10

If neither error is bad, or both are equally bad, then pick \(\alpha\) = 0.05

The main thing is to always pick the \(\alpha\) before you collect the data and start the test.

The above discussion was long, but it is really important information. If you don’t know what the errors of the test are about, then there really is no point in making conclusions with the tests. Make sure you understand what the two errors are and what the probabilities are for them.

Now it is time to go back to the example and put this all together. This is the basic structure of testing a hypothesis, usually called a hypothesis test. Since this one has a test statistic involving z, it is also called a z-test. And since there is only one sample, it is usually called a one-sample z-test.

Example \(\PageIndex{2}\) battery example revisited

- State the random variable and the parameter in words.

- State the null and alternative hypothesis and the level of significance.

- A random sample of size n is taken.

- The population standard derivation is known.

- The sample size is at least 30 or the population of the random variable is normally distributed.

- Find the sample statistic, test statistic, and p-value.

- Interpretation

1. x = life of battery

\(\mu\) = mean life of a XJ35 battery

2. \(H_{o} : \mu=500\) days

\(H_{A} : \mu<500\) days

\(\alpha = 0.10\) (from above discussion about consequences)

3. Every hypothesis has some assumptions that be met to make sure that the results of the hypothesis are valid. The assumptions are different for each test. This test has the following assumptions.

- This occurred in this example, since it was stated that a random sample of 30 battery lives were taken.

- This is true, since it was given in the problem.

- The sample size was 30, so this condition is met.

4. The test statistic depends on how many samples there are, what parameter you are testing, and assumptions that need to be checked. In this case, there is one sample and you are testing the mean. The assumptions were checked above.

Sample statistic:

\(\overline{x} = 490\)

Test statistic:

.png?revision=1)

Using TI-83/84:

\(P(\overline{x}<490 | \mu=500)=\text { normalcdf }(-1 \mathrm{E} 99,490,500,25 / \sqrt{30}) \approx 0.0142\)

\(P(\overline{x}<490 | \mu=500)=\operatorname{pnorm}(490,500,25 / \operatorname{sqrt}(30)) \approx 0.0142\)

5. Now what? Well, this p-value is 0.0142. This is a lot smaller than the amount of error you would accept in the problem -\(\alpha\) = 0.10. That means that finding a sample mean less than 490 days is unusual to happen if \(H_{o}\) is true. This should make you think that \(H_{o}\) is not true. You should reject \(H_{o}\).

In fact, in general:

Reject \(H_{o}\) if the p-value < \(\alpha\) and

Fail to reject \(H_{o}\) if the p-value \(\geq \alpha\).

6. Since you rejected \(H_{o}\), what does this mean in the real world? That is what goes in the interpretation. Since you rejected the claim by the manufacturer that the mean life of the batteries is 500 days, then you now can believe that your hypothesis was correct. In other words, there is enough evidence to show that the mean life of the battery is less than 500 days.

Now that you know that the batteries last less than 500 days, should you cancel the contract? Statistically, there is evidence that the batteries do not last as long as the manufacturer says they should. However, based on this sample there are only ten days less on average that the batteries last. There may not be practical significance in this case. Ten days do not seem like a large difference. In reality, if the batteries are used in pacemakers, then you would probably tell the patient to have the batteries replaced every year. You have a large buffer whether the batteries last 490 days or 500 days. It seems that it might not be worth it to break the contract over ten days. What if the 10 days was practically significant? Are there any other things you should consider? You might look at the business relationship with the manufacturer. You might also look at how much it would cost to find a new manufacturer. These are also questions to consider before making any changes. What this discussion should show you is that just because a hypothesis has statistical significance does not mean it has practical significance. The hypothesis test is just one part of a research process. There are other pieces that you need to consider.

That’s it. That is what a hypothesis test looks like. All hypothesis tests are done with the same six steps. Those general six steps are outlined below.

- State the random variable and the parameter in words. This is where you are defining what the unknowns are in this problem. x = random variable \(\mu\) = mean of random variable, if the parameter of interest is the mean. There are other parameters you can test, and you would use the appropriate symbol for that parameter.

- State the null and alternative hypotheses and the level of significance \(H_{o} : \mu=\mu_{o}\), where \(\mu_{o}\) is the known mean \(H_{A} : \mu<\mu_{o}\) \(H_{A} : \mu>\mu_{o}\), use the appropriate one for your problem \(H_{A} : \mu \neq \mu_{o}\) Also, state your \(\alpha\) level here.

- State and check the assumptions for a hypothesis test. Each hypothesis test has its own assumptions. They will be stated when the different hypothesis tests are discussed.

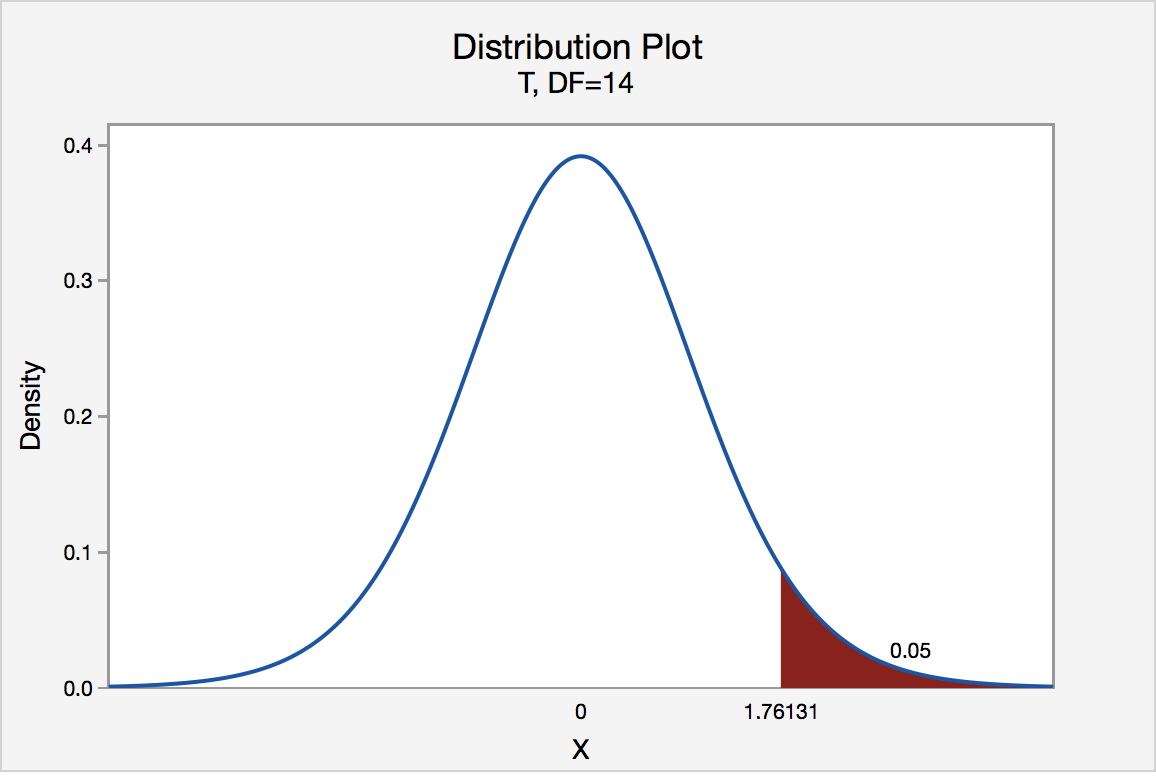

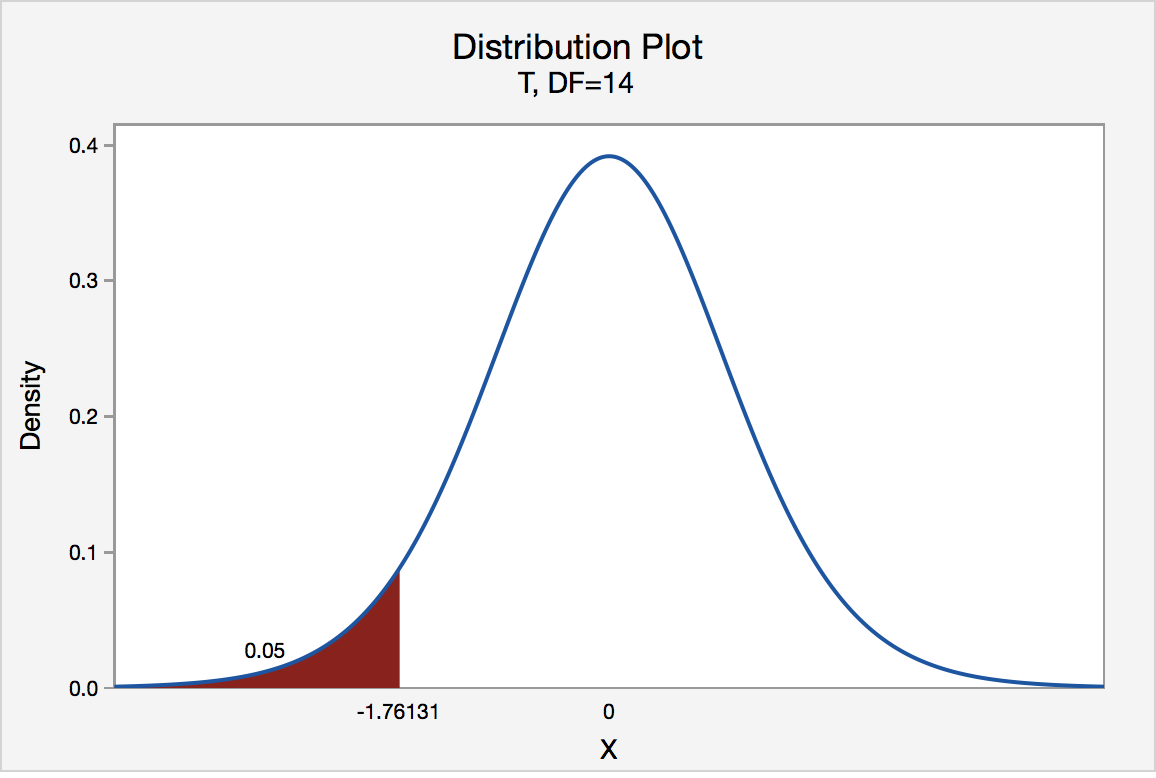

- Find the sample statistic, test statistic, and p-value. This depends on what parameter you are working with, how many samples, and the assumptions of the test. The p-value depends on your \(H_{A}\). If you are doing the \(H_{A}\) with the less than, then it is a left-tailed test, and you find the probability of being in that left tail. If you are doing the \(H_{A}\) with the greater than, then it is a right-tailed test, and you find the probability of being in the right tail. If you are doing the \(H_{A}\) with the not equal to, then you are doing a two-tail test, and you find the probability of being in both tails. Because of symmetry, you could find the probability in one tail and double this value to find the probability in both tails.

- Conclusion This is where you write reject \(H_{o}\) or fail to reject \(H_{o}\). The rule is: if the p-value < \(\alpha\), then reject \(H_{o}\). If the p-value \(\geq \alpha\), then fail to reject \(H_{o}\).

- Interpretation This is where you interpret in real world terms the conclusion to the test. The conclusion for a hypothesis test is that you either have enough evidence to show \(H_{A}\) is true, or you do not have enough evidence to show \(H_{A}\) is true.

Sorry, one more concept about the conclusion and interpretation. First, the conclusion is that you reject \(H_{o}\) or you fail to reject \(H_{o}\). Why was it said like this? It is because you never accept the null hypothesis. If you wanted to accept the null hypothesis, then why do the test in the first place? In the interpretation, you either have enough evidence to show \(H_{A}\) is true, or you do not have enough evidence to show \(H_{A}\) is true. You wouldn’t want to go to all this work and then find out you wanted to accept the claim. Why go through the trouble? You always want to show that the alternative hypothesis is true. Sometimes you can do that and sometimes you can’t. It doesn’t mean you proved the null hypothesis; it just means you can’t prove the alternative hypothesis. Here is an example to demonstrate this.

Example \(\PageIndex{3}\) conclusion in hypothesis tests

In the U.S. court system a jury trial could be set up as a hypothesis test. To really help you see how this works, let’s use OJ Simpson as an example. In the court system, a person is presumed innocent until he/she is proven guilty, and this is your null hypothesis. OJ Simpson was a football player in the 1970s. In 1994 his ex-wife and her friend were killed. OJ Simpson was accused of the crime, and in 1995 the case was tried. The prosecutors wanted to prove OJ was guilty of killing his wife and her friend, and that is the alternative hypothesis

\(H_{0}\): OJ is innocent of killing his wife and her friend

\(H_{A}\): OJ is guilty of killing his wife and her friend

In this case, a verdict of not guilty was given. That does not mean that he is innocent of this crime. It means there was not enough evidence to prove he was guilty. Many people believe that OJ was guilty of this crime, but the jury did not feel that the evidence presented was enough to show there was guilt. The verdict in a jury trial is always guilty or not guilty!

The same is true in a hypothesis test. There is either enough or not enough evidence to show that alternative hypothesis. It is not that you proved the null hypothesis true.

When identifying hypothesis, it is important to state your random variable and the appropriate parameter you want to make a decision about. If count something, then the random variable is the number of whatever you counted. The parameter is the proportion of what you counted. If the random variable is something you measured, then the parameter is the mean of what you measured. (Note: there are other parameters you can calculate, and some analysis of those will be presented in later chapters.)

Example \(\PageIndex{4}\) stating hypotheses

Identify the hypotheses necessary to test the following statements:

- The average salary of a teacher is more than $30,000.

- The proportion of students who like math is less than 10%.

- The average age of students in this class differs from 21.

a. x = salary of teacher

\(\mu\) = mean salary of teacher

The guess is that \(\mu>\$ 30,000\) and that is the alternative hypothesis.

The null hypothesis has the same parameter and number with an equal sign.

\(\begin{array}{l}{H_{0} : \mu=\$ 30,000} \\ {H_{A} : \mu>\$ 30,000}\end{array}\)

b. x = number od students who like math

p = proportion of students who like math

The guess is that p < 0.10 and that is the alternative hypothesis.

\(\begin{array}{l}{H_{0} : p=0.10} \\ {H_{A} : p<0.10}\end{array}\)

c. x = age of students in this class

\(\mu\) = mean age of students in this class

The guess is that \(\mu \neq 21\) and that is the alternative hypothesis.

\(\begin{array}{c}{H_{0} : \mu=21} \\ {H_{A} : \mu \neq 21}\end{array}\)

Example \(\PageIndex{5}\) Stating Type I and II Errors and Picking Level of Significance

- The plant-breeding department at a major university developed a new hybrid raspberry plant called YumYum Berry. Based on research data, the claim is made that from the time shoots are planted 90 days on average are required to obtain the first berry with a standard deviation of 9.2 days. A corporation that is interested in marketing the product tests 60 shoots by planting them and recording the number of days before each plant produces its first berry. The sample mean is 92.3 days. The corporation wants to know if the mean number of days is more than the 90 days claimed. State the type I and type II errors in terms of this problem, consequences of each error, and state which level of significance to use.

- A concern was raised in Australia that the percentage of deaths of Aboriginal prisoners was higher than the percent of deaths of non-indigenous prisoners, which is 0.27%. State the type I and type II errors in terms of this problem, consequences of each error, and state which level of significance to use.

a. x = time to first berry for YumYum Berry plant

\(\mu\) = mean time to first berry for YumYum Berry plant

\(\begin{array}{l}{H_{0} : \mu=90} \\ {H_{A} : \mu>90}\end{array}\)

Type I Error: If the corporation does a type I error, then they will say that the plants take longer to produce than 90 days when they don’t. They probably will not want to market the plants if they think they will take longer. They will not market them even though in reality the plants do produce in 90 days. They may have loss of future earnings, but that is all.

Type II error: The corporation do not say that the plants take longer then 90 days to produce when they do take longer. Most likely they will market the plants. The plants will take longer, and so customers might get upset and then the company would get a bad reputation. This would be really bad for the company.

Level of significance: It appears that the corporation would not want to make a type II error. Pick a 10% level of significance, \(\alpha = 0.10\).

b. x = number of Aboriginal prisoners who have died

p = proportion of Aboriginal prisoners who have died

\(\begin{array}{l}{H_{o} : p=0.27 \%} \\ {H_{A} : p>0.27 \%}\end{array}\)

Type I error: Rejecting that the proportion of Aboriginal prisoners who died was 0.27%, when in fact it was 0.27%. This would mean you would say there is a problem when there isn’t one. You could anger the Aboriginal community, and spend time and energy researching something that isn’t a problem.

Type II error: Failing to reject that the proportion of Aboriginal prisoners who died was 0.27%, when in fact it is higher than 0.27%. This would mean that you wouldn’t think there was a problem with Aboriginal prisoners dying when there really is a problem. You risk causing deaths when there could be a way to avoid them.

Level of significance: It appears that both errors may be issues in this case. You wouldn’t want to anger the Aboriginal community when there isn’t an issue, and you wouldn’t want people to die when there may be a way to stop it. It may be best to pick a 5% level of significance, \(\alpha = 0.05\).

Hypothesis testing is really easy if you follow the same recipe every time. The only differences in the various problems are the assumptions of the test and the test statistic you calculate so you can find the p-value. Do the same steps, in the same order, with the same words, every time and these problems become very easy.

Exercise \(\PageIndex{1}\)

For the problems in this section, a question is being asked. This is to help you understand what the hypotheses are. You are not to run any hypothesis tests and come up with any conclusions in this section.

- Eyeglassomatic manufactures eyeglasses for different retailers. They test to see how many defective lenses they made in a given time period and found that 11% of all lenses had defects of some type. Looking at the type of defects, they found in a three-month time period that out of 34,641 defective lenses, 5865 were due to scratches. Are there more defects from scratches than from all other causes? State the random variable, population parameter, and hypotheses.

- According to the February 2008 Federal Trade Commission report on consumer fraud and identity theft, 23% of all complaints in 2007 were for identity theft. In that year, Alaska had 321 complaints of identity theft out of 1,432 consumer complaints ("Consumer fraud and," 2008). Does this data provide enough evidence to show that Alaska had a lower proportion of identity theft than 23%? State the random variable, population parameter, and hypotheses.

- The Kyoto Protocol was signed in 1997, and required countries to start reducing their carbon emissions. The protocol became enforceable in February 2005. In 2004, the mean CO2 emission was 4.87 metric tons per capita. Is there enough evidence to show that the mean CO2 emission is lower in 2010 than in 2004? State the random variable, population parameter, and hypotheses.

- The FDA regulates that fish that is consumed is allowed to contain 1.0 mg/kg of mercury. In Florida, bass fish were collected in 53 different lakes to measure the amount of mercury in the fish. The data for the average amount of mercury in each lake is in Example \(\PageIndex{5}\) ("Multi-disciplinary niser activity," 2013). Do the data provide enough evidence to show that the fish in Florida lakes has more mercury than the allowable amount? State the random variable, population parameter, and hypotheses.

- Eyeglassomatic manufactures eyeglasses for different retailers. They test to see how many defective lenses they made in a given time period and found that 11% of all lenses had defects of some type. Looking at the type of defects, they found in a three-month time period that out of 34,641 defective lenses, 5865 were due to scratches. Are there more defects from scratches than from all other causes? State the type I and type II errors in this case, consequences of each error type for this situation from the perspective of the manufacturer, and the appropriate alpha level to use. State why you picked this alpha level.

- According to the February 2008 Federal Trade Commission report on consumer fraud and identity theft, 23% of all complaints in 2007 were for identity theft. In that year, Alaska had 321 complaints of identity theft out of 1,432 consumer complaints ("Consumer fraud and," 2008). Does this data provide enough evidence to show that Alaska had a lower proportion of identity theft than 23%? State the type I and type II errors in this case, consequences of each error type for this situation from the perspective of the state of Arizona, and the appropriate alpha level to use. State why you picked this alpha level.

- The Kyoto Protocol was signed in 1997, and required countries to start reducing their carbon emissions. The protocol became enforceable in February 2005. In 2004, the mean CO2 emission was 4.87 metric tons per capita. Is there enough evidence to show that the mean CO2 emission is lower in 2010 than in 2004? State the type I and type II errors in this case, consequences of each error type for this situation from the perspective of the agency overseeing the protocol, and the appropriate alpha level to use. State why you picked this alpha level.

- The FDA regulates that fish that is consumed is allowed to contain 1.0 mg/kg of mercury. In Florida, bass fish were collected in 53 different lakes to measure the amount of mercury in the fish. The data for the average amount of mercury in each lake is in Example \(\PageIndex{5}\) ("Multi-disciplinary niser activity," 2013). Do the data provide enough evidence to show that the fish in Florida lakes has more mercury than the allowable amount? State the type I and type II errors in this case, consequences of each error type for this situation from the perspective of the FDA, and the appropriate alpha level to use. State why you picked this alpha level.

1. \(H_{o} : p=0.11, H_{A} : p>0.11\)

3. \(H_{o} : \mu=4.87 \text { metric tons per capita, } H_{A} : \mu<4.87 \text { metric tons per capita }\)

5. See solutions

7. See solutions

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Crit Care Med

- v.23(Suppl 3); 2019 Sep

An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors

Priya ranganathan.

1 Department of Anesthesiology, Critical Care and Pain, Tata Memorial Hospital, Mumbai, Maharashtra, India

2 Department of Surgical Oncology, Tata Memorial Centre, Mumbai, Maharashtra, India

The second article in this series on biostatistics covers the concepts of sample, population, research hypotheses and statistical errors.

How to cite this article

Ranganathan P, Pramesh CS. An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors. Indian J Crit Care Med 2019;23(Suppl 3):S230–S231.

Two papers quoted in this issue of the Indian Journal of Critical Care Medicine report. The results of studies aim to prove that a new intervention is better than (superior to) an existing treatment. In the ABLE study, the investigators wanted to show that transfusion of fresh red blood cells would be superior to standard-issue red cells in reducing 90-day mortality in ICU patients. 1 The PROPPR study was designed to prove that transfusion of a lower ratio of plasma and platelets to red cells would be superior to a higher ratio in decreasing 24-hour and 30-day mortality in critically ill patients. 2 These studies are known as superiority studies (as opposed to noninferiority or equivalence studies which will be discussed in a subsequent article).

SAMPLE VERSUS POPULATION

A sample represents a group of participants selected from the entire population. Since studies cannot be carried out on entire populations, researchers choose samples, which are representative of the population. This is similar to walking into a grocery store and examining a few grains of rice or wheat before purchasing an entire bag; we assume that the few grains that we select (the sample) are representative of the entire sack of grains (the population).

The results of the study are then extrapolated to generate inferences about the population. We do this using a process known as hypothesis testing. This means that the results of the study may not always be identical to the results we would expect to find in the population; i.e., there is the possibility that the study results may be erroneous.

HYPOTHESIS TESTING

A clinical trial begins with an assumption or belief, and then proceeds to either prove or disprove this assumption. In statistical terms, this belief or assumption is known as a hypothesis. Counterintuitively, what the researcher believes in (or is trying to prove) is called the “alternate” hypothesis, and the opposite is called the “null” hypothesis; every study has a null hypothesis and an alternate hypothesis. For superiority studies, the alternate hypothesis states that one treatment (usually the new or experimental treatment) is superior to the other; the null hypothesis states that there is no difference between the treatments (the treatments are equal). For example, in the ABLE study, we start by stating the null hypothesis—there is no difference in mortality between groups receiving fresh RBCs and standard-issue RBCs. We then state the alternate hypothesis—There is a difference between groups receiving fresh RBCs and standard-issue RBCs. It is important to note that we have stated that the groups are different, without specifying which group will be better than the other. This is known as a two-tailed hypothesis and it allows us to test for superiority on either side (using a two-sided test). This is because, when we start a study, we are not 100% certain that the new treatment can only be better than the standard treatment—it could be worse, and if it is so, the study should pick it up as well. One tailed hypothesis and one-sided statistical testing is done for non-inferiority studies, which will be discussed in a subsequent paper in this series.

STATISTICAL ERRORS

There are two possibilities to consider when interpreting the results of a superiority study. The first possibility is that there is truly no difference between the treatments but the study finds that they are different. This is called a Type-1 error or false-positive error or alpha error. This means falsely rejecting the null hypothesis.

The second possibility is that there is a difference between the treatments and the study does not pick up this difference. This is called a Type 2 error or false-negative error or beta error. This means falsely accepting the null hypothesis.

The power of the study is the ability to detect a difference between groups and is the converse of the beta error; i.e., power = 1-beta error. Alpha and beta errors are finalized when the protocol is written and form the basis for sample size calculation for the study. In an ideal world, we would not like any error in the results of our study; however, we would need to do the study in the entire population (infinite sample size) to be able to get a 0% alpha and beta error. These two errors enable us to do studies with realistic sample sizes, with the compromise that there is a small possibility that the results may not always reflect the truth. The basis for this will be discussed in a subsequent paper in this series dealing with sample size calculation.

Conventionally, type 1 or alpha error is set at 5%. This means, that at the end of the study, if there is a difference between groups, we want to be 95% certain that this is a true difference and allow only a 5% probability that this difference has occurred by chance (false positive). Type 2 or beta error is usually set between 10% and 20%; therefore, the power of the study is 90% or 80%. This means that if there is a difference between groups, we want to be 80% (or 90%) certain that the study will detect that difference. For example, in the ABLE study, sample size was calculated with a type 1 error of 5% (two-sided) and power of 90% (type 2 error of 10%) (1).

Table 1 gives a summary of the two types of statistical errors with an example

Statistical errors

In the next article in this series, we will look at the meaning and interpretation of ‘ p ’ value and confidence intervals for hypothesis testing.

Source of support: Nil

Conflict of interest: None

Tutorial Playlist

Statistics tutorial, everything you need to know about the probability density function in statistics, the best guide to understand central limit theorem, an in-depth guide to measures of central tendency : mean, median and mode, the ultimate guide to understand conditional probability.

A Comprehensive Look at Percentile in Statistics

The Best Guide to Understand Bayes Theorem

Everything you need to know about the normal distribution, an in-depth explanation of cumulative distribution function, a complete guide to chi-square test, a complete guide on hypothesis testing in statistics, understanding the fundamentals of arithmetic and geometric progression, the definitive guide to understand spearman’s rank correlation, a comprehensive guide to understand mean squared error, all you need to know about the empirical rule in statistics, the complete guide to skewness and kurtosis, a holistic look at bernoulli distribution.

All You Need to Know About Bias in Statistics

A Complete Guide to Get a Grasp of Time Series Analysis

The Key Differences Between Z-Test Vs. T-Test

The Complete Guide to Understand Pearson's Correlation

A complete guide on the types of statistical studies, everything you need to know about poisson distribution, your best guide to understand correlation vs. regression, the most comprehensive guide for beginners on what is correlation, what is hypothesis testing in statistics types and examples.

Lesson 10 of 24 By Avijeet Biswal

Table of Contents

In today’s data-driven world , decisions are based on data all the time. Hypothesis plays a crucial role in that process, whether it may be making business decisions, in the health sector, academia, or in quality improvement. Without hypothesis & hypothesis tests, you risk drawing the wrong conclusions and making bad decisions. In this tutorial, you will look at Hypothesis Testing in Statistics.

What Is Hypothesis Testing in Statistics?

Hypothesis Testing is a type of statistical analysis in which you put your assumptions about a population parameter to the test. It is used to estimate the relationship between 2 statistical variables.

Let's discuss few examples of statistical hypothesis from real-life -

- A teacher assumes that 60% of his college's students come from lower-middle-class families.

- A doctor believes that 3D (Diet, Dose, and Discipline) is 90% effective for diabetic patients.

Now that you know about hypothesis testing, look at the two types of hypothesis testing in statistics.

Hypothesis Testing Formula

Z = ( x̅ – μ0 ) / (σ /√n)

- Here, x̅ is the sample mean,

- μ0 is the population mean,

- σ is the standard deviation,

- n is the sample size.

How Hypothesis Testing Works?

An analyst performs hypothesis testing on a statistical sample to present evidence of the plausibility of the null hypothesis. Measurements and analyses are conducted on a random sample of the population to test a theory. Analysts use a random population sample to test two hypotheses: the null and alternative hypotheses.

The null hypothesis is typically an equality hypothesis between population parameters; for example, a null hypothesis may claim that the population means return equals zero. The alternate hypothesis is essentially the inverse of the null hypothesis (e.g., the population means the return is not equal to zero). As a result, they are mutually exclusive, and only one can be correct. One of the two possibilities, however, will always be correct.

Your Dream Career is Just Around The Corner!

Null Hypothesis and Alternate Hypothesis

The Null Hypothesis is the assumption that the event will not occur. A null hypothesis has no bearing on the study's outcome unless it is rejected.

H0 is the symbol for it, and it is pronounced H-naught.

The Alternate Hypothesis is the logical opposite of the null hypothesis. The acceptance of the alternative hypothesis follows the rejection of the null hypothesis. H1 is the symbol for it.

Let's understand this with an example.

A sanitizer manufacturer claims that its product kills 95 percent of germs on average.

To put this company's claim to the test, create a null and alternate hypothesis.

H0 (Null Hypothesis): Average = 95%.

Alternative Hypothesis (H1): The average is less than 95%.

Another straightforward example to understand this concept is determining whether or not a coin is fair and balanced. The null hypothesis states that the probability of a show of heads is equal to the likelihood of a show of tails. In contrast, the alternate theory states that the probability of a show of heads and tails would be very different.

Become a Data Scientist with Hands-on Training!

Hypothesis Testing Calculation With Examples

Let's consider a hypothesis test for the average height of women in the United States. Suppose our null hypothesis is that the average height is 5'4". We gather a sample of 100 women and determine that their average height is 5'5". The standard deviation of population is 2.

To calculate the z-score, we would use the following formula:

z = ( x̅ – μ0 ) / (σ /√n)

z = (5'5" - 5'4") / (2" / √100)

z = 0.5 / (0.045)

We will reject the null hypothesis as the z-score of 11.11 is very large and conclude that there is evidence to suggest that the average height of women in the US is greater than 5'4".

Steps of Hypothesis Testing

Step 1: specify your null and alternate hypotheses.

It is critical to rephrase your original research hypothesis (the prediction that you wish to study) as a null (Ho) and alternative (Ha) hypothesis so that you can test it quantitatively. Your first hypothesis, which predicts a link between variables, is generally your alternate hypothesis. The null hypothesis predicts no link between the variables of interest.

Step 2: Gather Data

For a statistical test to be legitimate, sampling and data collection must be done in a way that is meant to test your hypothesis. You cannot draw statistical conclusions about the population you are interested in if your data is not representative.

Step 3: Conduct a Statistical Test

Other statistical tests are available, but they all compare within-group variance (how to spread out the data inside a category) against between-group variance (how different the categories are from one another). If the between-group variation is big enough that there is little or no overlap between groups, your statistical test will display a low p-value to represent this. This suggests that the disparities between these groups are unlikely to have occurred by accident. Alternatively, if there is a large within-group variance and a low between-group variance, your statistical test will show a high p-value. Any difference you find across groups is most likely attributable to chance. The variety of variables and the level of measurement of your obtained data will influence your statistical test selection.

Step 4: Determine Rejection Of Your Null Hypothesis

Your statistical test results must determine whether your null hypothesis should be rejected or not. In most circumstances, you will base your judgment on the p-value provided by the statistical test. In most circumstances, your preset level of significance for rejecting the null hypothesis will be 0.05 - that is, when there is less than a 5% likelihood that these data would be seen if the null hypothesis were true. In other circumstances, researchers use a lower level of significance, such as 0.01 (1%). This reduces the possibility of wrongly rejecting the null hypothesis.

Step 5: Present Your Results

The findings of hypothesis testing will be discussed in the results and discussion portions of your research paper, dissertation, or thesis. You should include a concise overview of the data and a summary of the findings of your statistical test in the results section. You can talk about whether your results confirmed your initial hypothesis or not in the conversation. Rejecting or failing to reject the null hypothesis is a formal term used in hypothesis testing. This is likely a must for your statistics assignments.

Types of Hypothesis Testing

To determine whether a discovery or relationship is statistically significant, hypothesis testing uses a z-test. It usually checks to see if two means are the same (the null hypothesis). Only when the population standard deviation is known and the sample size is 30 data points or more, can a z-test be applied.

A statistical test called a t-test is employed to compare the means of two groups. To determine whether two groups differ or if a procedure or treatment affects the population of interest, it is frequently used in hypothesis testing.

Chi-Square

You utilize a Chi-square test for hypothesis testing concerning whether your data is as predicted. To determine if the expected and observed results are well-fitted, the Chi-square test analyzes the differences between categorical variables from a random sample. The test's fundamental premise is that the observed values in your data should be compared to the predicted values that would be present if the null hypothesis were true.

Hypothesis Testing and Confidence Intervals

Both confidence intervals and hypothesis tests are inferential techniques that depend on approximating the sample distribution. Data from a sample is used to estimate a population parameter using confidence intervals. Data from a sample is used in hypothesis testing to examine a given hypothesis. We must have a postulated parameter to conduct hypothesis testing.

Bootstrap distributions and randomization distributions are created using comparable simulation techniques. The observed sample statistic is the focal point of a bootstrap distribution, whereas the null hypothesis value is the focal point of a randomization distribution.

A variety of feasible population parameter estimates are included in confidence ranges. In this lesson, we created just two-tailed confidence intervals. There is a direct connection between these two-tail confidence intervals and these two-tail hypothesis tests. The results of a two-tailed hypothesis test and two-tailed confidence intervals typically provide the same results. In other words, a hypothesis test at the 0.05 level will virtually always fail to reject the null hypothesis if the 95% confidence interval contains the predicted value. A hypothesis test at the 0.05 level will nearly certainly reject the null hypothesis if the 95% confidence interval does not include the hypothesized parameter.

Simple and Composite Hypothesis Testing

Depending on the population distribution, you can classify the statistical hypothesis into two types.

Simple Hypothesis: A simple hypothesis specifies an exact value for the parameter.

Composite Hypothesis: A composite hypothesis specifies a range of values.

A company is claiming that their average sales for this quarter are 1000 units. This is an example of a simple hypothesis.

Suppose the company claims that the sales are in the range of 900 to 1000 units. Then this is a case of a composite hypothesis.

One-Tailed and Two-Tailed Hypothesis Testing

The One-Tailed test, also called a directional test, considers a critical region of data that would result in the null hypothesis being rejected if the test sample falls into it, inevitably meaning the acceptance of the alternate hypothesis.

In a one-tailed test, the critical distribution area is one-sided, meaning the test sample is either greater or lesser than a specific value.

In two tails, the test sample is checked to be greater or less than a range of values in a Two-Tailed test, implying that the critical distribution area is two-sided.

If the sample falls within this range, the alternate hypothesis will be accepted, and the null hypothesis will be rejected.

Become a Data Scientist With Real-World Experience

Right Tailed Hypothesis Testing

If the larger than (>) sign appears in your hypothesis statement, you are using a right-tailed test, also known as an upper test. Or, to put it another way, the disparity is to the right. For instance, you can contrast the battery life before and after a change in production. Your hypothesis statements can be the following if you want to know if the battery life is longer than the original (let's say 90 hours):

- The null hypothesis is (H0 <= 90) or less change.

- A possibility is that battery life has risen (H1) > 90.

The crucial point in this situation is that the alternate hypothesis (H1), not the null hypothesis, decides whether you get a right-tailed test.

Left Tailed Hypothesis Testing

Alternative hypotheses that assert the true value of a parameter is lower than the null hypothesis are tested with a left-tailed test; they are indicated by the asterisk "<".

Suppose H0: mean = 50 and H1: mean not equal to 50

According to the H1, the mean can be greater than or less than 50. This is an example of a Two-tailed test.

In a similar manner, if H0: mean >=50, then H1: mean <50

Here the mean is less than 50. It is called a One-tailed test.

Type 1 and Type 2 Error

A hypothesis test can result in two types of errors.

Type 1 Error: A Type-I error occurs when sample results reject the null hypothesis despite being true.

Type 2 Error: A Type-II error occurs when the null hypothesis is not rejected when it is false, unlike a Type-I error.

Suppose a teacher evaluates the examination paper to decide whether a student passes or fails.

H0: Student has passed

H1: Student has failed

Type I error will be the teacher failing the student [rejects H0] although the student scored the passing marks [H0 was true].

Type II error will be the case where the teacher passes the student [do not reject H0] although the student did not score the passing marks [H1 is true].

Level of Significance

The alpha value is a criterion for determining whether a test statistic is statistically significant. In a statistical test, Alpha represents an acceptable probability of a Type I error. Because alpha is a probability, it can be anywhere between 0 and 1. In practice, the most commonly used alpha values are 0.01, 0.05, and 0.1, which represent a 1%, 5%, and 10% chance of a Type I error, respectively (i.e. rejecting the null hypothesis when it is in fact correct).

Future-Proof Your AI/ML Career: Top Dos and Don'ts

A p-value is a metric that expresses the likelihood that an observed difference could have occurred by chance. As the p-value decreases the statistical significance of the observed difference increases. If the p-value is too low, you reject the null hypothesis.

Here you have taken an example in which you are trying to test whether the new advertising campaign has increased the product's sales. The p-value is the likelihood that the null hypothesis, which states that there is no change in the sales due to the new advertising campaign, is true. If the p-value is .30, then there is a 30% chance that there is no increase or decrease in the product's sales. If the p-value is 0.03, then there is a 3% probability that there is no increase or decrease in the sales value due to the new advertising campaign. As you can see, the lower the p-value, the chances of the alternate hypothesis being true increases, which means that the new advertising campaign causes an increase or decrease in sales.

Why is Hypothesis Testing Important in Research Methodology?

Hypothesis testing is crucial in research methodology for several reasons:

- Provides evidence-based conclusions: It allows researchers to make objective conclusions based on empirical data, providing evidence to support or refute their research hypotheses.

- Supports decision-making: It helps make informed decisions, such as accepting or rejecting a new treatment, implementing policy changes, or adopting new practices.

- Adds rigor and validity: It adds scientific rigor to research using statistical methods to analyze data, ensuring that conclusions are based on sound statistical evidence.

- Contributes to the advancement of knowledge: By testing hypotheses, researchers contribute to the growth of knowledge in their respective fields by confirming existing theories or discovering new patterns and relationships.

Limitations of Hypothesis Testing

Hypothesis testing has some limitations that researchers should be aware of:

- It cannot prove or establish the truth: Hypothesis testing provides evidence to support or reject a hypothesis, but it cannot confirm the absolute truth of the research question.

- Results are sample-specific: Hypothesis testing is based on analyzing a sample from a population, and the conclusions drawn are specific to that particular sample.

- Possible errors: During hypothesis testing, there is a chance of committing type I error (rejecting a true null hypothesis) or type II error (failing to reject a false null hypothesis).

- Assumptions and requirements: Different tests have specific assumptions and requirements that must be met to accurately interpret results.

After reading this tutorial, you would have a much better understanding of hypothesis testing, one of the most important concepts in the field of Data Science . The majority of hypotheses are based on speculation about observed behavior, natural phenomena, or established theories.

If you are interested in statistics of data science and skills needed for such a career, you ought to explore Simplilearn’s Post Graduate Program in Data Science.

If you have any questions regarding this ‘Hypothesis Testing In Statistics’ tutorial, do share them in the comment section. Our subject matter expert will respond to your queries. Happy learning!

1. What is hypothesis testing in statistics with example?

Hypothesis testing is a statistical method used to determine if there is enough evidence in a sample data to draw conclusions about a population. It involves formulating two competing hypotheses, the null hypothesis (H0) and the alternative hypothesis (Ha), and then collecting data to assess the evidence. An example: testing if a new drug improves patient recovery (Ha) compared to the standard treatment (H0) based on collected patient data.

2. What is hypothesis testing and its types?

Hypothesis testing is a statistical method used to make inferences about a population based on sample data. It involves formulating two hypotheses: the null hypothesis (H0), which represents the default assumption, and the alternative hypothesis (Ha), which contradicts H0. The goal is to assess the evidence and determine whether there is enough statistical significance to reject the null hypothesis in favor of the alternative hypothesis.

Types of hypothesis testing:

- One-sample test: Used to compare a sample to a known value or a hypothesized value.

- Two-sample test: Compares two independent samples to assess if there is a significant difference between their means or distributions.

- Paired-sample test: Compares two related samples, such as pre-test and post-test data, to evaluate changes within the same subjects over time or under different conditions.

- Chi-square test: Used to analyze categorical data and determine if there is a significant association between variables.

- ANOVA (Analysis of Variance): Compares means across multiple groups to check if there is a significant difference between them.

3. What are the steps of hypothesis testing?

The steps of hypothesis testing are as follows:

- Formulate the hypotheses: State the null hypothesis (H0) and the alternative hypothesis (Ha) based on the research question.

- Set the significance level: Determine the acceptable level of error (alpha) for making a decision.

- Collect and analyze data: Gather and process the sample data.

- Compute test statistic: Calculate the appropriate statistical test to assess the evidence.

- Make a decision: Compare the test statistic with critical values or p-values and determine whether to reject H0 in favor of Ha or not.

- Draw conclusions: Interpret the results and communicate the findings in the context of the research question.

4. What are the 2 types of hypothesis testing?

- One-tailed (or one-sided) test: Tests for the significance of an effect in only one direction, either positive or negative.

- Two-tailed (or two-sided) test: Tests for the significance of an effect in both directions, allowing for the possibility of a positive or negative effect.

The choice between one-tailed and two-tailed tests depends on the specific research question and the directionality of the expected effect.

5. What are the 3 major types of hypothesis?

The three major types of hypotheses are:

- Null Hypothesis (H0): Represents the default assumption, stating that there is no significant effect or relationship in the data.

- Alternative Hypothesis (Ha): Contradicts the null hypothesis and proposes a specific effect or relationship that researchers want to investigate.

- Nondirectional Hypothesis: An alternative hypothesis that doesn't specify the direction of the effect, leaving it open for both positive and negative possibilities.

Find our Data Analyst Online Bootcamp in top cities:

About the author.

Avijeet is a Senior Research Analyst at Simplilearn. Passionate about Data Analytics, Machine Learning, and Deep Learning, Avijeet is also interested in politics, cricket, and football.

Recommended Resources

Free eBook: Top Programming Languages For A Data Scientist

Normality Test in Minitab: Minitab with Statistics

Machine Learning Career Guide: A Playbook to Becoming a Machine Learning Engineer

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Choosing the Right Statistical Test | Types & Examples

Choosing the Right Statistical Test | Types & Examples

Published on January 28, 2020 by Rebecca Bevans . Revised on June 22, 2023.

Statistical tests are used in hypothesis testing . They can be used to:

- determine whether a predictor variable has a statistically significant relationship with an outcome variable.

- estimate the difference between two or more groups.

Statistical tests assume a null hypothesis of no relationship or no difference between groups. Then they determine whether the observed data fall outside of the range of values predicted by the null hypothesis.

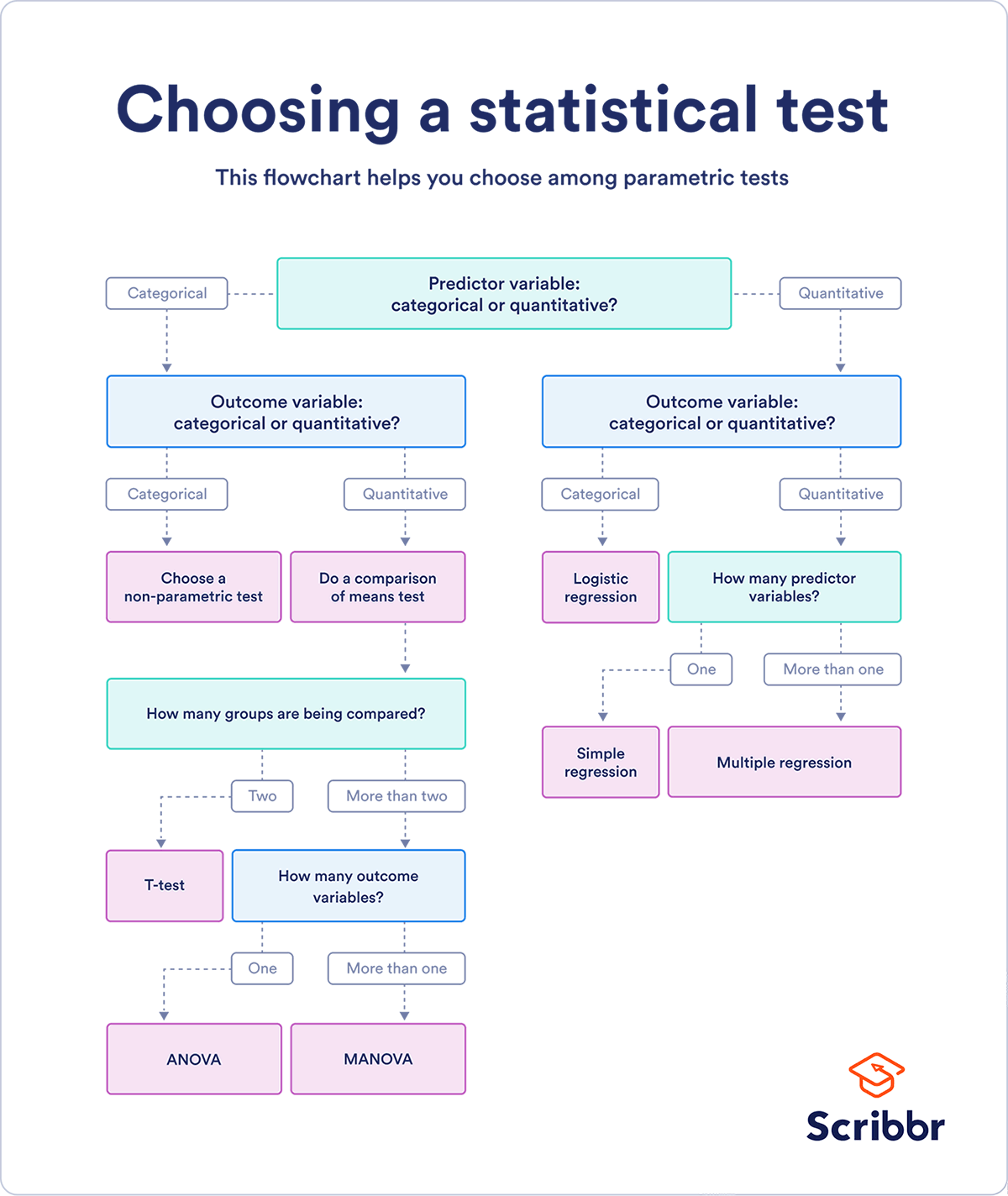

If you already know what types of variables you’re dealing with, you can use the flowchart to choose the right statistical test for your data.

Statistical tests flowchart

Table of contents

What does a statistical test do, when to perform a statistical test, choosing a parametric test: regression, comparison, or correlation, choosing a nonparametric test, flowchart: choosing a statistical test, other interesting articles, frequently asked questions about statistical tests.

Statistical tests work by calculating a test statistic – a number that describes how much the relationship between variables in your test differs from the null hypothesis of no relationship.

It then calculates a p value (probability value). The p -value estimates how likely it is that you would see the difference described by the test statistic if the null hypothesis of no relationship were true.

If the value of the test statistic is more extreme than the statistic calculated from the null hypothesis, then you can infer a statistically significant relationship between the predictor and outcome variables.

If the value of the test statistic is less extreme than the one calculated from the null hypothesis, then you can infer no statistically significant relationship between the predictor and outcome variables.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

You can perform statistical tests on data that have been collected in a statistically valid manner – either through an experiment , or through observations made using probability sampling methods .

For a statistical test to be valid , your sample size needs to be large enough to approximate the true distribution of the population being studied.

To determine which statistical test to use, you need to know:

- whether your data meets certain assumptions.

- the types of variables that you’re dealing with.

Statistical assumptions

Statistical tests make some common assumptions about the data they are testing:

- Independence of observations (a.k.a. no autocorrelation): The observations/variables you include in your test are not related (for example, multiple measurements of a single test subject are not independent, while measurements of multiple different test subjects are independent).

- Homogeneity of variance : the variance within each group being compared is similar among all groups. If one group has much more variation than others, it will limit the test’s effectiveness.

- Normality of data : the data follows a normal distribution (a.k.a. a bell curve). This assumption applies only to quantitative data .

If your data do not meet the assumptions of normality or homogeneity of variance, you may be able to perform a nonparametric statistical test , which allows you to make comparisons without any assumptions about the data distribution.

If your data do not meet the assumption of independence of observations, you may be able to use a test that accounts for structure in your data (repeated-measures tests or tests that include blocking variables).

Types of variables

The types of variables you have usually determine what type of statistical test you can use.

Quantitative variables represent amounts of things (e.g. the number of trees in a forest). Types of quantitative variables include:

- Continuous (aka ratio variables): represent measures and can usually be divided into units smaller than one (e.g. 0.75 grams).

- Discrete (aka integer variables): represent counts and usually can’t be divided into units smaller than one (e.g. 1 tree).

Categorical variables represent groupings of things (e.g. the different tree species in a forest). Types of categorical variables include:

- Ordinal : represent data with an order (e.g. rankings).

- Nominal : represent group names (e.g. brands or species names).

- Binary : represent data with a yes/no or 1/0 outcome (e.g. win or lose).

Choose the test that fits the types of predictor and outcome variables you have collected (if you are doing an experiment , these are the independent and dependent variables ). Consult the tables below to see which test best matches your variables.

Parametric tests usually have stricter requirements than nonparametric tests, and are able to make stronger inferences from the data. They can only be conducted with data that adheres to the common assumptions of statistical tests.

The most common types of parametric test include regression tests, comparison tests, and correlation tests.

Regression tests

Regression tests look for cause-and-effect relationships . They can be used to estimate the effect of one or more continuous variables on another variable.

Comparison tests

Comparison tests look for differences among group means . They can be used to test the effect of a categorical variable on the mean value of some other characteristic.

T-tests are used when comparing the means of precisely two groups (e.g., the average heights of men and women). ANOVA and MANOVA tests are used when comparing the means of more than two groups (e.g., the average heights of children, teenagers, and adults).

Correlation tests

Correlation tests check whether variables are related without hypothesizing a cause-and-effect relationship.

These can be used to test whether two variables you want to use in (for example) a multiple regression test are autocorrelated.

Non-parametric tests don’t make as many assumptions about the data, and are useful when one or more of the common statistical assumptions are violated. However, the inferences they make aren’t as strong as with parametric tests.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

This flowchart helps you choose among parametric tests. For nonparametric alternatives, check the table above.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

- Null hypothesis

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Statistical tests commonly assume that:

- the data are normally distributed

- the groups that are being compared have similar variance

- the data are independent

If your data does not meet these assumptions you might still be able to use a nonparametric statistical test , which have fewer requirements but also make weaker inferences.

A test statistic is a number calculated by a statistical test . It describes how far your observed data is from the null hypothesis of no relationship between variables or no difference among sample groups.

The test statistic tells you how different two or more groups are from the overall population mean , or how different a linear slope is from the slope predicted by a null hypothesis . Different test statistics are used in different statistical tests.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

Quantitative variables are any variables where the data represent amounts (e.g. height, weight, or age).

Categorical variables are any variables where the data represent groups. This includes rankings (e.g. finishing places in a race), classifications (e.g. brands of cereal), and binary outcomes (e.g. coin flips).

You need to know what type of variables you are working with to choose the right statistical test for your data and interpret your results .

Discrete and continuous variables are two types of quantitative variables :

- Discrete variables represent counts (e.g. the number of objects in a collection).

- Continuous variables represent measurable amounts (e.g. water volume or weight).

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Choosing the Right Statistical Test | Types & Examples. Scribbr. Retrieved April 8, 2024, from https://www.scribbr.com/statistics/statistical-tests/

Is this article helpful?

Rebecca Bevans

Other students also liked, hypothesis testing | a step-by-step guide with easy examples, test statistics | definition, interpretation, and examples, normal distribution | examples, formulas, & uses, what is your plagiarism score.

Statistics Tutorial

Descriptive statistics, inferential statistics, stat reference, statistics - hypothesis testing.

Hypothesis testing is a formal way of checking if a hypothesis about a population is true or not.

Hypothesis Testing

A hypothesis is a claim about a population parameter .

A hypothesis test is a formal procedure to check if a hypothesis is true or not.

Examples of claims that can be checked:

The average height of people in Denmark is more than 170 cm.

The share of left handed people in Australia is not 10%.

The average income of dentists is less the average income of lawyers.

The Null and Alternative Hypothesis

Hypothesis testing is based on making two different claims about a population parameter.

The null hypothesis (\(H_{0} \)) and the alternative hypothesis (\(H_{1}\)) are the claims.

The two claims needs to be mutually exclusive , meaning only one of them can be true.

The alternative hypothesis is typically what we are trying to prove.

For example, we want to check the following claim:

"The average height of people in Denmark is more than 170 cm."

In this case, the parameter is the average height of people in Denmark (\(\mu\)).

The null and alternative hypothesis would be:

Null hypothesis : The average height of people in Denmark is 170 cm.

Alternative hypothesis : The average height of people in Denmark is more than 170 cm.

The claims are often expressed with symbols like this:

\(H_{0}\): \(\mu = 170 \: cm \)

\(H_{1}\): \(\mu > 170 \: cm \)

If the data supports the alternative hypothesis, we reject the null hypothesis and accept the alternative hypothesis.

If the data does not support the alternative hypothesis, we keep the null hypothesis.

Note: The alternative hypothesis is also referred to as (\(H_{A} \)).

The Significance Level

The significance level (\(\alpha\)) is the uncertainty we accept when rejecting the null hypothesis in the hypothesis test.

The significance level is a percentage probability of accidentally making the wrong conclusion.

Typical significance levels are:

- \(\alpha = 0.1\) (10%)

- \(\alpha = 0.05\) (5%)

- \(\alpha = 0.01\) (1%)

A lower significance level means that the evidence in the data needs to be stronger to reject the null hypothesis.

There is no "correct" significance level - it only states the uncertainty of the conclusion.

Note: A 5% significance level means that when we reject a null hypothesis:

We expect to reject a true null hypothesis 5 out of 100 times.

Advertisement

The Test Statistic

The test statistic is used to decide the outcome of the hypothesis test.

The test statistic is a standardized value calculated from the sample.

Standardization means converting a statistic to a well known probability distribution .

The type of probability distribution depends on the type of test.

Common examples are:

- Standard Normal Distribution (Z): used for Testing Population Proportions

- Student's T-Distribution (T): used for Testing Population Means

Note: You will learn how to calculate the test statistic for each type of test in the following chapters.

The Critical Value and P-Value Approach

There are two main approaches used for hypothesis tests:

- The critical value approach compares the test statistic with the critical value of the significance level.

- The p-value approach compares the p-value of the test statistic and with the significance level.

The Critical Value Approach

The critical value approach checks if the test statistic is in the rejection region .

The rejection region is an area of probability in the tails of the distribution.

The size of the rejection region is decided by the significance level (\(\alpha\)).

The value that separates the rejection region from the rest is called the critical value .

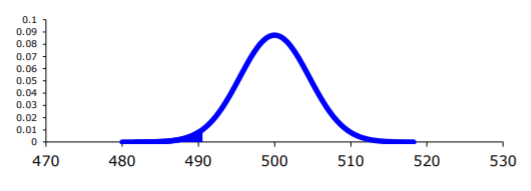

Here is a graphical illustration:

If the test statistic is inside this rejection region, the null hypothesis is rejected .