We have a new app!

Take the Access library with you wherever you go—easy access to books, videos, images, podcasts, personalized features, and more.

Download the Access App here: iOS and Android . Learn more here!

- Remote Access

- Save figures into PowerPoint

- Download tables as PDFs

Chapter 14: Drug Evaluation Monographs

Patrick M. Malone; Mark A. Malesker; Indrani Kar; Jason E. Glowczewski; Devin J. Fox

- Download Chapter PDF

Disclaimer: These citations have been automatically generated based on the information we have and it may not be 100% accurate. Please consult the latest official manual style if you have any questions regarding the format accuracy.

Download citation file:

- Search Book

Jump to a Section

Learning objectives, key concepts, introduction.

- Acknowledgment

- Full Chapter

- Supplementary Content

Describe and perform an evaluation of a drug product for a drug formulary.

List the sections included in a drug evaluation monograph.

Describe the overall highlights included in a monograph summary.

Describe the recommendations and restrictions that are made in a monograph.

Describe the purpose and format of a drug class review.

Describe and perform an evaluation of a therapeutic interchange.

1 The establishment and maintenance of a drug formulary requires that drugs or drug classes be objectively assessed based on scientific information (e.g., efficacy, safety, uniqueness, cost), not anecdotal prescriber experience.

2 The drug evaluation monograph provides a structured method to review the major features of a drug product.

3 A definite recommendation must be made based on need, therapeutics, side effects, cost, and other items specific to the particular agent (e.g., evidence-based treatment guidelines, dosage forms, convenience, dosage interval, inclusion on the formulary of third-party payers, hospital antibiotic resistance patterns, potential for causing medication errors), usually in that order.

4 The recommendation must be supported by objective evidence.

5 The most logical decision to benefit the patient and the institution should be recommended to the pharmacy and therapeutics (P&T) committee.

6 Cost is heavily emphasized in formulary decisions and must be properly accounted for given the complexities of drug acquisition pricing and reimbursement.

7 Preparation of a drug evaluation monograph requires a great amount of time and effort, using many of the skills discussed throughout this text to obtain, evaluate, collate, and provide information. However, the value of having all of the issues evaluated and discussed can be invaluable in providing quality care.

1 The establishment and maintenance of a drug formulary requires that drugs or drug classes be objectively assessed based on scientific information (e.g., efficacy, safety, 1 uniqueness, cost), not anecdotal prescriber experience . A rational evaluation of all aspects of a drug in relation to similar agents provides the most effective and evidence-based method in deciding which drug is appropriate for formulary addition. In particular, it is necessary to consider need, effectiveness, risk, and cost (overall, including monitoring costs, discounts, rebates, and so forth)—often in that order. Some other issues that are evaluated include dosage forms, packaging, requirements of accrediting or quality assurance bodies, evidence-based treatment guidelines, prescriber preferences, regulatory issues, patient/nursing convenience, advertising, possible discrimination issues, 2 and consumer expectations. 3 There is increasingly more emphasis on evaluating clinical outcomes from high-quality trials, continuous quality assurance information, comparative efficacies, 4 pharmacogenomics, and quality of life. 5

PURPOSE OF DRUG EVALUATION MONOGRAPH

Sign in or create a free Access profile below to access even more exclusive content.

With an Access profile, you can save and manage favorites from your personal dashboard, complete case quizzes, review Q&A, and take these feature on the go with our Access app.

Pop-up div Successfully Displayed

This div only appears when the trigger link is hovered over. Otherwise it is hidden from view.

Please Wait

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Am J Pharm Educ

- v.74(9); 2010 Nov 10

A Standardized Rubric to Evaluate Student Presentations

Michael j. peeters.

a University of Toledo College of Pharmacy

Eric G. Sahloff

Gregory e. stone.

b University of Toledo College of Education

To design, implement, and assess a rubric to evaluate student presentations in a capstone doctor of pharmacy (PharmD) course.

A 20-item rubric was designed and used to evaluate student presentations in a capstone fourth-year course in 2007-2008, and then revised and expanded to 25 items and used to evaluate student presentations for the same course in 2008-2009. Two faculty members evaluated each presentation.

The Many-Facets Rasch Model (MFRM) was used to determine the rubric's reliability, quantify the contribution of evaluator harshness/leniency in scoring, and assess grading validity by comparing the current grading method with a criterion-referenced grading scheme. In 2007-2008, rubric reliability was 0.98, with a separation of 7.1 and 4 rating scale categories. In 2008-2009, MFRM analysis suggested 2 of 98 grades be adjusted to eliminate evaluator leniency, while a further criterion-referenced MFRM analysis suggested 10 of 98 grades should be adjusted.

The evaluation rubric was reliable and evaluator leniency appeared minimal. However, a criterion-referenced re-analysis suggested a need for further revisions to the rubric and evaluation process.

INTRODUCTION

Evaluations are important in the process of teaching and learning. In health professions education, performance-based evaluations are identified as having “an emphasis on testing complex, ‘higher-order’ knowledge and skills in the real-world context in which they are actually used.” 1 Objective structured clinical examinations (OSCEs) are a common, notable example. 2 On Miller's pyramid, a framework used in medical education for measuring learner outcomes, “knows” is placed at the base of the pyramid, followed by “knows how,” then “shows how,” and finally, “does” is placed at the top. 3 Based on Miller's pyramid, evaluation formats that use multiple-choice testing focus on “knows” while an OSCE focuses on “shows how.” Just as performance evaluations remain highly valued in medical education, 4 authentic task evaluations in pharmacy education may be better indicators of future pharmacist performance. 5 Much attention in medical education has been focused on reducing the unreliability of high-stakes evaluations. 6 Regardless of educational discipline, high-stakes performance-based evaluations should meet educational standards for reliability and validity. 7

PharmD students at University of Toledo College of Pharmacy (UTCP) were required to complete a course on presentations during their final year of pharmacy school and then give a presentation that served as both a capstone experience and a performance-based evaluation for the course. Pharmacists attending the presentations were given Accreditation Council for Pharmacy Education (ACPE)-approved continuing education credits. An evaluation rubric for grading the presentations was designed to allow multiple faculty evaluators to objectively score student performances in the domains of presentation delivery and content. Given the pass/fail grading procedure used in advanced pharmacy practice experiences, passing this presentation-based course and subsequently graduating from pharmacy school were contingent upon this high-stakes evaluation. As a result, the reliability and validity of the rubric used and the evaluation process needed to be closely scrutinized.

Each year, about 100 students completed presentations and at least 40 faculty members served as evaluators. With the use of multiple evaluators, a question of evaluator leniency often arose (ie, whether evaluators used the same criteria for evaluating performances or whether some evaluators graded easier or more harshly than others). At UTCP, opinions among some faculty evaluators and many PharmD students implied that evaluator leniency in judging the students' presentations significantly affected specific students' grades and ultimately their graduation from pharmacy school. While it was plausible that evaluator leniency was occurring, the magnitude of the effect was unknown. Thus, this study was initiated partly to address this concern over grading consistency and scoring variability among evaluators.

Because both students' presentation style and content were deemed important, each item of the rubric was weighted the same across delivery and content. However, because there were more categories related to delivery than content, an additional faculty concern was that students feasibly could present poor content but have an effective presentation delivery and pass the course.

The objectives for this investigation were: (1) to describe and optimize the reliability of the evaluation rubric used in this high-stakes evaluation; (2) to identify the contribution and significance of evaluator leniency to evaluation reliability; and (3) to assess the validity of this evaluation rubric within a criterion-referenced grading paradigm focused on both presentation delivery and content.

The University of Toledo's Institutional Review Board approved this investigation. This study investigated performance evaluation data for an oral presentation course for final-year PharmD students from 2 consecutive academic years (2007-2008 and 2008-2009). The course was taken during the fourth year (P4) of the PharmD program and was a high-stakes, performance-based evaluation. The goal of the course was to serve as a capstone experience, enabling students to demonstrate advanced drug literature evaluation and verbal presentations skills through the development and delivery of a 1-hour presentation. These presentations were to be on a current pharmacy practice topic and of sufficient quality for ACPE-approved continuing education. This experience allowed students to demonstrate their competencies in literature searching, literature evaluation, and application of evidence-based medicine, as well as their oral presentation skills. Students worked closely with a faculty advisor to develop their presentation. Each class (2007-2008 and 2008-2009) was randomly divided, with half of the students taking the course and completing their presentation and evaluation in the fall semester and the other half in the spring semester. To accommodate such a large number of students presenting for 1 hour each, it was necessary to use multiple rooms with presentations taking place concurrently over 2.5 days for both the fall and spring sessions of the course. Two faculty members independently evaluated each student presentation using the provided evaluation rubric. The 2007-2008 presentations involved 104 PharmD students and 40 faculty evaluators, while the 2008-2009 presentations involved 98 students and 46 faculty evaluators.

After vetting through the pharmacy practice faculty, the initial rubric used in 2007-2008 focused on describing explicit, specific evaluation criteria such as amounts of eye contact, voice pitch/volume, and descriptions of study methods. The evaluation rubric used in 2008-2009 was similar to the initial rubric, but with 5 items added (Figure (Figure1). 1 ). The evaluators rated each item (eg, eye contact) based on their perception of the student's performance. The 25 rubric items had equal weight (ie, 4 points each), but each item received a rating from the evaluator of 1 to 4 points. Thus, only 4 rating categories were included as has been recommended in the literature. 8 However, some evaluators created an additional 3 rating categories by marking lines in between the 4 ratings to signify half points ie, 1.5, 2.5, and 3.5. For example, for the “notecards/notes” item in Figure Figure1, 1 , a student looked at her notes sporadically during her presentation, but not distractingly nor enough to warrant a score of 3 in the faculty evaluator's opinion, so a 3.5 was given. Thus, a 7-category rating scale (1, 1.5, 2, 2.5. 3, 3.5, and 4) was analyzed. Each independent evaluator's ratings for the 25 items were summed to form a score (0-100%). The 2 evaluators' scores then were averaged and a letter grade was assigned based on the following scale: >90% = A, 80%-89% = B, 70%-79% = C, <70% = F.

Rubric used to evaluate student presentations given in a 2008-2009 capstone PharmD course.

EVALUATION AND ASSESSMENT

Rubric reliability.

To measure rubric reliability, iterative analyses were performed on the evaluations using the Many-Facets Rasch Model (MFRM) following the 2007-2008 data collection period. While Cronbach's alpha is the most commonly reported coefficient of reliability, its single number reporting without supplementary information can provide incomplete information about reliability. 9 - 11 Due to its formula, Cronbach's alpha can be increased by simply adding more repetitive rubric items or having more rating scale categories, even when no further useful information has been added. The MFRM reports separation , which is calculated differently than Cronbach's alpha, is another source of reliability information. Unlike Cronbach's alpha, separation does not appear enhanced by adding further redundant items. From a measurement perspective, a higher separation value is better than a lower one because students are being divided into meaningful groups after measurement error has been accounted for. Separation can be thought of as the number of units on a ruler where the more units the ruler has, the larger the range of performance levels that can be measured among students. For example, a separation of 4.0 suggests 4 graduations such that a grade of A is distinctly different from a grade of B, which in turn is different from a grade of C or of F. In measuring performances, a separation of 9.0 is better than 5.5, just as a separation of 7.0 is better than a 6.5; a higher separation coefficient suggests that student performance potentially could be divided into a larger number of meaningfully separate groups.

The rating scale can have substantial effects on reliability, 8 while description of how a rating scale functions is a unique aspect of the MFRM. With analysis iterations of the 2007-2008 data, the number of rating scale categories were collapsed consecutively until improvements in reliability and/or separation were no longer found. The last positive iteration that led to positive improvements in reliability or separation was deemed an optimal rating scale for this evaluation rubric.

In the 2007-2008 analysis, iterations of the data where run through the MFRM. While only 4 rating scale categories had been included on the rubric, because some faculty members inserted 3 in-between categories, 7 categories had to be included in the analysis. This initial analysis based on a 7-category rubric provided a reliability coefficient (similar to Cronbach's alpha) of 0.98, while the separation coefficient was 6.31. The separation coefficient denoted 6 distinctly separate groups of students based on the items. Rating scale categories were collapsed, with “in-between” categories included in adjacent full-point categories. Table Table1 1 shows the reliability and separation for the iterations as the rating scale was collapsed. As shown, the optimal evaluation rubric maintained a reliability of 0.98, but separation improved the reliability to 7.10 or 7 distinctly separate groups of students based on the items. Another distinctly separate group was added through a reduction in the rating scale while no change was seen to Cronbach's alpha, even though the number of rating scale categories was reduced. Table Table1 1 describes the stepwise, sequential pattern across the final 4 rating scale categories analyzed. Informed by the 2007-2008 results, the 2008-2009 evaluation rubric (Figure (Figure1) 1 ) used 4 rating scale categories and reliability remained high.

Evaluation Rubric Reliability and Separation with Iterations While Collapsing Rating Scale Categories.

a Reliability coefficient of variance in rater response that is reproducible (ie, Cronbach's alpha).

b Separation is a coefficient of item standard deviation divided by average measurement error and is an additional reliability coefficient.

c Optimal number of rating scale categories based on the highest reliability (0.98) and separation (7.1) values.

Evaluator Leniency

Described by Fleming and colleagues over half a century ago, 6 harsh raters (ie, hawks) or lenient raters (ie, doves) have also been demonstrated in more recent studies as an issue as well. 12 - 14 Shortly after 2008-2009 data were collected, those evaluations by multiple faculty evaluators were collated and analyzed in the MFRM to identify possible inconsistent scoring. While traditional interrater reliability does not deal with this issue, the MFRM had been used previously to illustrate evaluator leniency on licensing examinations for medical students and medical residents in the United Kingdom. 13 Thus, accounting for evaluator leniency may prove important to grading consistency (and reliability) in a course using multiple evaluators. Along with identifying evaluator leniency, the MFRM also corrected for this variability. For comparison, course grades were calculated by summing the evaluators' actual ratings (as discussed in the Design section) and compared with the MFRM-adjusted grades to quantify the degree of evaluator leniency occurring in this evaluation.

Measures created from the data analysis in the MFRM were converted to percentages using a common linear test-equating procedure involving the mean and standard deviation of the dataset. 15 To these percentages, student letter grades were assigned using the same traditional method used in 2007-2008 (ie, 90% = A, 80% - 89% = B, 70% - 79% = C, <70% = F). Letter grades calculated using the revised rubric and the MFRM then were compared to letter grades calculated using the previous rubric and course grading method.

In the analysis of the 2008-2009 data, the interrater reliability for the letter grades when comparing the 2 independent faculty evaluations for each presentation was 0.98 by Cohen's kappa. However, using the 3-facet MRFM revealed significant variation in grading. The interaction of evaluator leniency on student ability and item difficulty was significant, with a chi-square of p < 0.01. As well, the MFRM showed a reliability of 0.77, with a separation of 1.85 (ie, almost 2 groups of evaluators). The MFRM student ability measures were scaled to letter grades and compared with course letter grades. As a result, 2 B's became A's and so evaluator leniency accounted for a 2% change in letter grades (ie, 2 of 98 grades).

Validity and Grading

Explicit criterion-referenced standards for grading are recommended for higher evaluation validity. 3 , 16 - 18 The course coordinator completed 3 additional evaluations of a hypothetical student presentation rating the minimal criteria expected to describe each of an A, B, or C letter grade performance. These evaluations were placed with the other 196 evaluations (2 evaluators × 98 students) from 2008-2009 into the MFRM, with the resulting analysis report giving specific cutoff percentage scores for each letter grade. Unlike the traditional scoring method of assigning all items an equal weight, the MFRM ordered evaluation items from those more difficult for students (given more weight) to those less difficult for students (given less weight). These criterion-referenced letter grades were compared with the grades generated using the traditional grading process.

When the MFRM data were rerun with the criterion-referenced evaluations added into the dataset, a 10% change was seen with letter grades (ie, 10 of 98 grades). When the 10 letter grades were lowered, 1 was below a C, the minimum standard, and suggested a failing performance. Qualitative feedback from faculty evaluators agreed with this suggested criterion-referenced performance failure.

Measurement Model

Within modern test theory, the Rasch Measurement Model maps examinee ability with evaluation item difficulty. Items are not arbitrarily given the same value (ie, 1 point) but vary based on how difficult or easy the items were for examinees. The Rasch measurement model has been used frequently in educational research, 19 by numerous high-stakes testing professional bodies such as the National Board of Medical Examiners, 20 and also by various state-level departments of education for standardized secondary education examinations. 21 The Rasch measurement model itself has rigorous construct validity and reliability. 22 A 3-facet MFRM model allows an evaluator variable to be added to the student ability and item difficulty variables that are routine in other Rasch measurement analyses. Just as multiple regression accounts for additional variables in analysis compared to a simple bivariate regression, the MFRM is a multiple variable variant of the Rasch measurement model and was applied in this study using the Facets software (Linacre, Chicago, IL). The MFRM is ideal for performance-based evaluations with the addition of independent evaluator/judges. 8 , 23 From both yearly cohorts in this investigation, evaluation rubric data were collated and placed into the MFRM for separate though subsequent analyses. Within the MFRM output report, a chi-square for a difference in evaluator leniency was reported with an alpha of 0.05.

The presentation rubric was reliable. Results from the 2007-2008 analysis illustrated that the number of rating scale categories impacted the reliability of this rubric and that use of only 4 rating scale categories appeared best for measurement. While a 10-point Likert-like scale may commonly be used in patient care settings, such as in quantifying pain, most people cannot process more then 7 points or categories reliably. 24 Presumably, when more than 7 categories are used, the categories beyond 7 either are not used or are collapsed by respondents into fewer than 7 categories. Five-point scales commonly are encountered, but use of an odd number of categories can be problematic to interpretation and is not recommended. 25 Responses using the middle category could denote a true perceived average or neutral response or responder indecisiveness or even confusion over the question. Therefore, removing the middle category appears advantageous and is supported by our results.

With 2008-2009 data, the MFRM identified evaluator leniency with some evaluators grading more harshly while others were lenient. Evaluator leniency was indeed found in the dataset but only a couple of changes were suggested based on the MFRM-corrected evaluator leniency and did not appear to play a substantial role in the evaluation of this course at this time.

Performance evaluation instruments are either holistic or analytic rubrics. 26 The evaluation instrument used in this investigation exemplified an analytic rubric, which elicits specific observations and often demonstrates high reliability. However, Norman and colleagues point out a conundrum where drastically increasing the number of evaluation rubric items (creating something similar to a checklist) could augment a reliability coefficient though it appears to dissociate from that evaluation rubric's validity. 27 Validity may be more than the sum of behaviors on evaluation rubric items. 28 Having numerous, highly specific evaluation items appears to undermine the rubric's function. With this investigation's evaluation rubric and its numerous items for both presentation style and presentation content, equal numeric weighting of items can in fact allow student presentations to receive a passing score while falling short of the course objectives, as was shown in the present investigation. As opposed to analytic rubrics, holistic rubrics often demonstrate lower yet acceptable reliability, while offering a higher degree of explicit connection to course objectives. A summative, holistic evaluation of presentations may improve validity by allowing expert evaluators to provide their “gut feeling” as experts on whether a performance is “outstanding,” “sufficient,” “borderline,” or “subpar” for dimensions of presentation delivery and content. A holistic rubric that integrates with criteria of the analytic rubric (Figure (Figure1) 1 ) for evaluators to reflect on but maintains a summary, overall evaluation for each dimension (delivery/content) of the performance, may allow for benefits of each type of rubric to be used advantageously. This finding has been demonstrated with OSCEs in medical education where checklists for completed items (ie, yes/no) at an OSCE station have been successfully replaced with a few reliable global impression rating scales. 29 - 31

Alternatively, and because the MFRM model was used in the current study, an items-weighting approach could be used with the analytic rubric. That is, item weighting based on the difficulty of each rubric item could suggest how many points should be given for that rubric items, eg, some items would be worth 0.25 points, while others would be worth 0.5 points or 1 point (Table (Table2). 2 ). As could be expected, the more complex the rubric scoring becomes, the less feasible the rubric is to use. This was the main reason why this revision approach was not chosen by the course coordinator following this study. As well, it does not address the conundrum that the performance may be more than the summation of behavior items in the Figure Figure1 1 rubric. This current study cannot suggest which approach would be better as each would have its merits and pitfalls.

Rubric Item Weightings Suggested in the 2008-2009 Data Many-Facet Rasch Measurement Analysis

Regardless of which approach is used, alignment of the evaluation rubric with the course objectives is imperative. Objectivity has been described as a general striving for value-free measurement (ie, free of the evaluator's interests, opinions, preferences, sentiments). 27 This is a laudable goal pursued through educational research. Strategies to reduce measurement error, termed objectification , may not necessarily lead to increased objectivity. 27 The current investigation suggested that a rubric could become too explicit if all the possible areas of an oral presentation that could be assessed (ie, objectification) were included. This appeared to dilute the effect of important items and lose validity. A holistic rubric that is more straightforward and easier to score quickly may be less likely to lose validity (ie, “lose the forest for the trees”), though operationalizing a revised rubric would need to be investigated further. Similarly, weighting items in an analytic rubric based on their importance and difficulty for students may alleviate this issue; however, adding up individual items might prove arduous. While the rubric in Figure Figure1, 1 , which has evolved over the years, is the subject of ongoing revisions, it appears a reliable rubric on which to build.

The major limitation of this study involves the observational method that was employed. Although the 2 cohorts were from a single institution, investigators did use a completely separate class of PharmD students to verify initial instrument revisions. Optimizing the rubric's rating scale involved collapsing data from misuse of a 4-category rating scale (expanded by evaluators to 7 categories) by a few of the evaluators into 4 independent categories without middle ratings. As a result of the study findings, no actual grading adjustments were made for students in the 2008-2009 presentation course; however, adjustment using the MFRM have been suggested by Roberts and colleagues. 13 Since 2008-2009, the course coordinator has made further small revisions to the rubric based on feedback from evaluators, but these have not yet been re-analyzed with the MFRM.

The evaluation rubric used in this study for student performance evaluations showed high reliability and the data analysis agreed with using 4 rating scale categories to optimize the rubric's reliability. While lenient and harsh faculty evaluators were found, variability in evaluator scoring affected grading in this course only minimally. Aside from reliability, issues of validity were raised using criterion-referenced grading. Future revisions to this evaluation rubric should reflect these criterion-referenced concerns. The rubric analyzed herein appears a suitable starting point for reliable evaluation of PharmD oral presentations, though it has limitations that could be addressed with further attention and revisions.

ACKNOWLEDGEMENT

Author contributions— MJP and EGS conceptualized the study, while MJP and GES designed it. MJP, EGS, and GES gave educational content foci for the rubric. As the study statistician, MJP analyzed and interpreted the study data. MJP reviewed the literature and drafted a manuscript. EGS and GES critically reviewed this manuscript and approved the final version for submission. MJP accepts overall responsibility for the accuracy of the data, its analysis, and this report.

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

In Crisis? Call or Text 988

Your browser is not supported

Switch to Chrome, Edge, Firefox or Safari

Page title Screening and Assessment Tools Chart

No image This comprehensive chart shows screening and assessment tools for alcohol and drug misuse. Tools are categorized by substance type, audience for screening, and administrator. The chart also provides other assessment tools that may be useful for providers doing substance use work. Authoring Agency National Institute on Drug Abuse (NIDA) Resource URL https://nida.nih.gov/nidamed-medical-health-professionals/screening-tools-resou…

Other Resources Like This

Last Updated: 03/29/2023

- Eviction Notice Forms

- Power of Attorney Forms Forms

- Bill of Sale (Purchase Agreement) Forms

- Lease Agreement Forms

- Rental Application Forms

- Living Will Forms Forms

- Recommendation Letters Forms

- Resignation Letters Forms

- Release of Liability Agreement Forms

- Promissory Note Forms

- LLC Operating Agreement Forms

- Deed of Sale Forms

- Consent Form Forms

- Support Affidavit Forms

- Paternity Affidavit Forms

- Marital Affidavit Forms

- Financial Affidavit Forms

- Residential Affidavit Forms

- Affidavit of Identity Forms

- Affidavit of Title Forms

- Employment Affidavit Forms

- Affidavit of Loss Forms

- Gift Affidavit Forms

- Small Estate Affidavit Forms

- Service Affidavit Forms

- Heirship Affidavit Forms

- Survivorship Affidavit Forms

- Desistance Affidavit Forms

- Discrepancy Affidavit Forms

- Guardianship Affidavit Forms

- Undertaking Affidavit Forms

- General Affidavit Forms

- Affidavit of Death Forms

- Evaluation Forms

Presentation Evaluation Form

Sample Oral Presentation Evaluation Forms - 7+ Free Documents in ...

Presentation evaluation form sample - 8+ free documents in word ..., 7+ oral presentation evaluation form samples - free sample ....

Download Presentation Evaluation Form Bundle

What is Presentation Evaluation Form?

A Presentation Evaluation Form is a structured tool designed for assessing and providing feedback on presentations. It systematically captures the effectiveness, content clarity, speaker’s delivery, and overall impact of a presentation. This form serves as a critical resource in educational settings, workplaces, and conferences, enabling presenters to refine their skills based on constructive feedback. Simple to understand yet comprehensive, this form bridges the gap between presenter effort and audience perception, facilitating a pathway for growth and improvement.

Presentation Evaluation Format

Title: investment presentation evaluation, section 1: presenter information, section 2: evaluation criteria.

- Clarity and Coherence:

- Depth of Content:

- Delivery and Communication:

- Engagement and Interaction:

- Use of Supporting Materials (Data, Charts, Visuals):

Section 3: Overall Rating

- Satisfactory

- Needs Improvement

Section 4: Comments for Improvement

- Open-ended section for specific feedback and suggestions.

Section 5: Evaluator Details

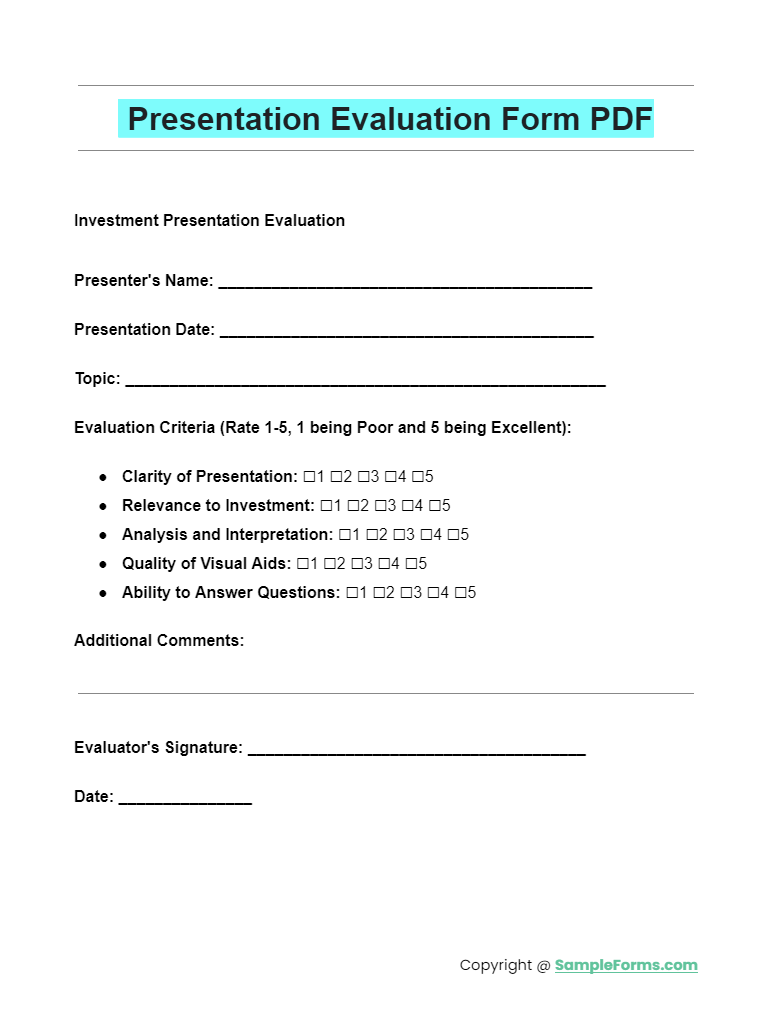

Presentation evaluation form pdf, word, google docs.

PDF Word Google Docs

Explore the essential tool for assessing presentations with our Presentation Evaluation Form PDF. Designed for clarity and effectiveness, this form aids in pinpointing areas of strength and improvement. It seamlessly integrates with the Employee Evaluation Form , ensuring comprehensive feedback and developmental insights for professionals aiming to enhance their presentation skills. You should also take a look at our Peer Evaluation Form

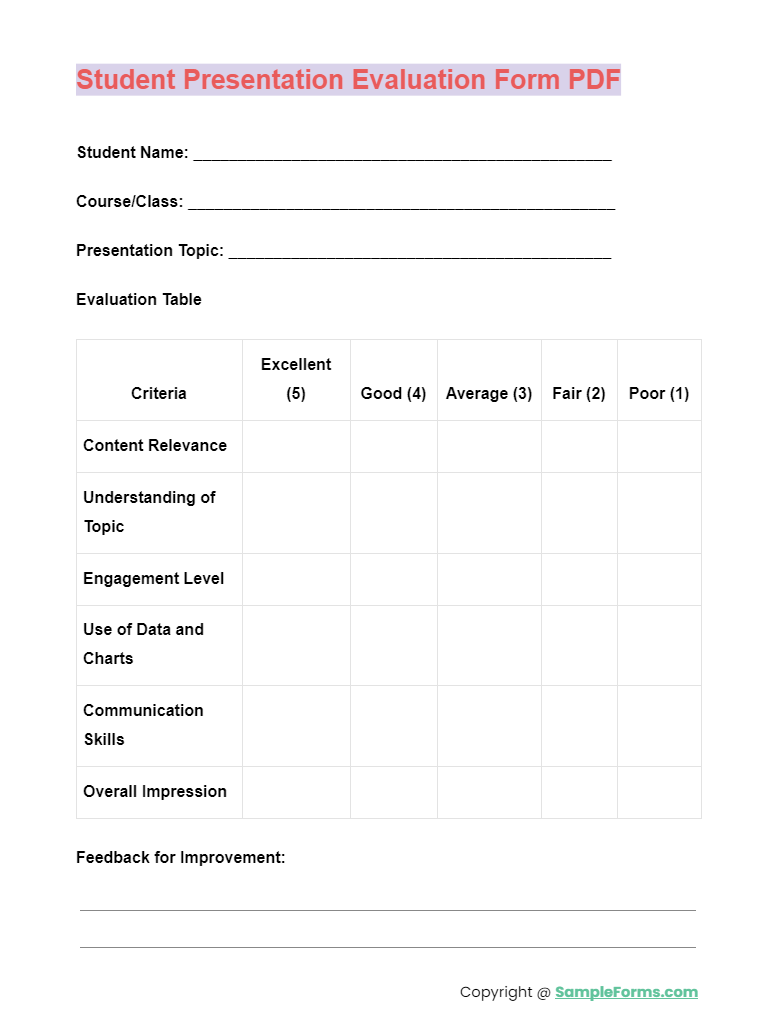

Student Presentation Evaluation Form PDF

Tailored specifically for educational settings, the Student Presentation Evaluation Form PDF facilitates constructive feedback for student presentations. It encourages growth and learning by focusing on content delivery and engagement. This form is a vital part of the Self Evaluation Form process, helping students reflect on their performance and identify self-improvement areas. You should also take a look at our Call Monitoring Evaluation Form

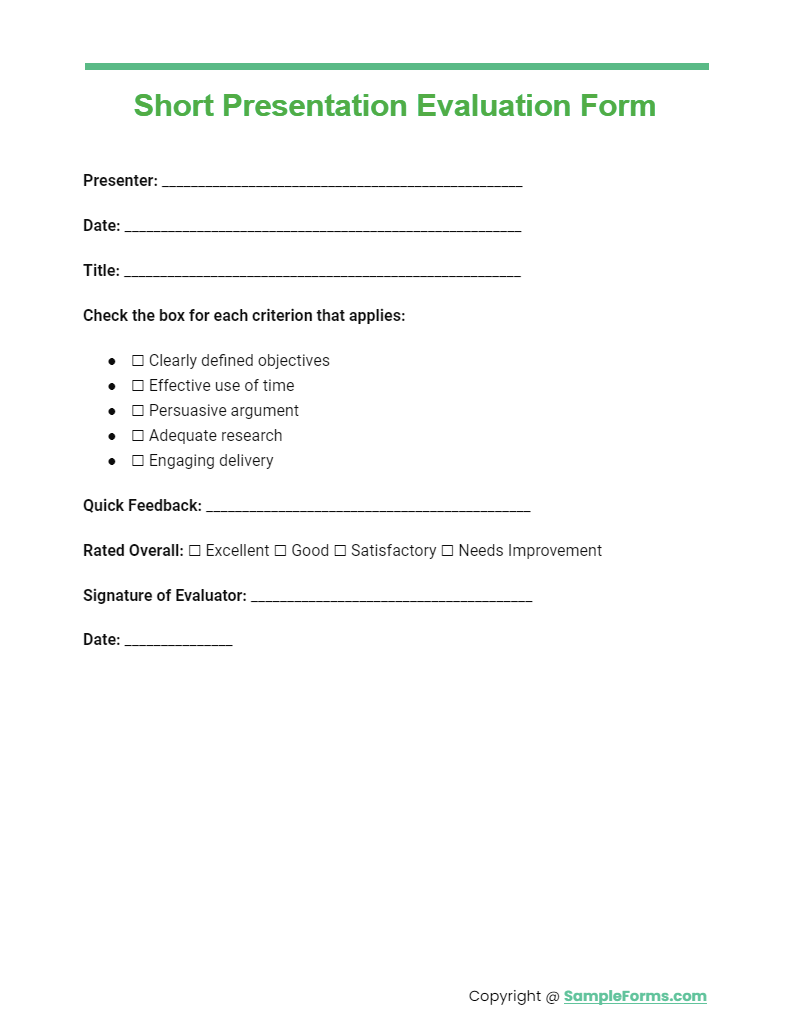

Short Presentation Evaluation Form

Our Short Presentation Evaluation Form is the perfect tool for quick and concise feedback. This streamlined version captures the essence of effective evaluation without overwhelming respondents, making it ideal for busy environments. Incorporate it into your Training Evaluation Form strategy to boost learning outcomes and presentation efficacy. You should also take a look at our Employee Performance Evaluation Form

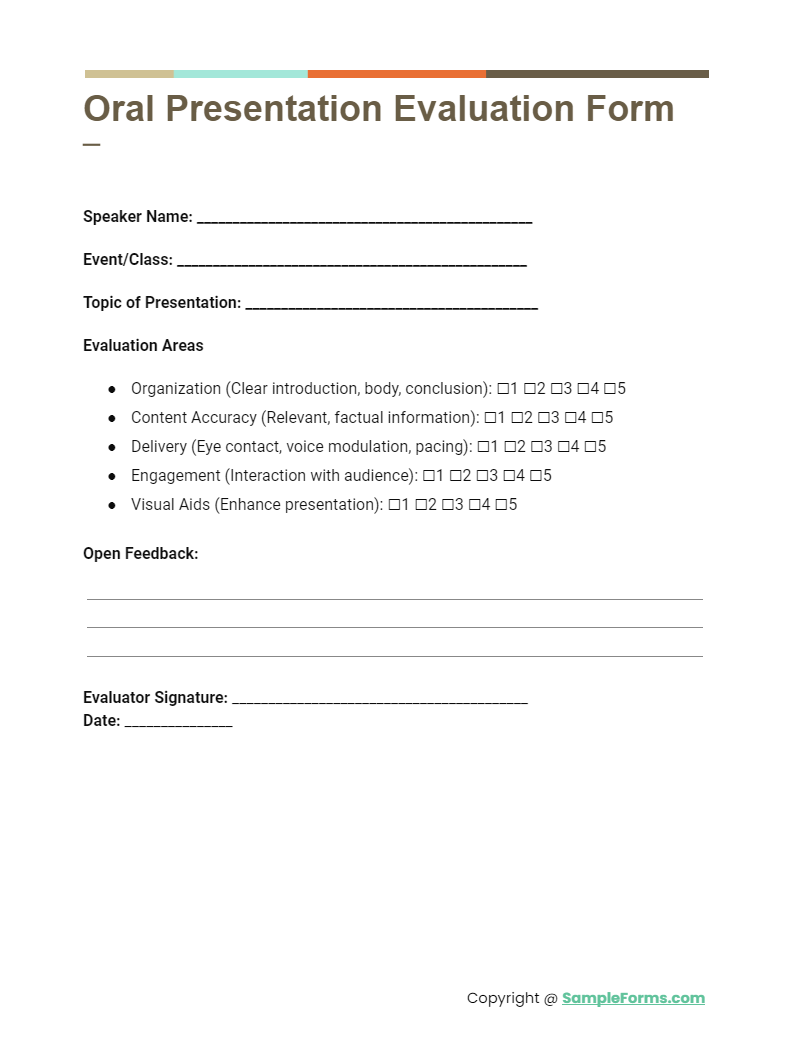

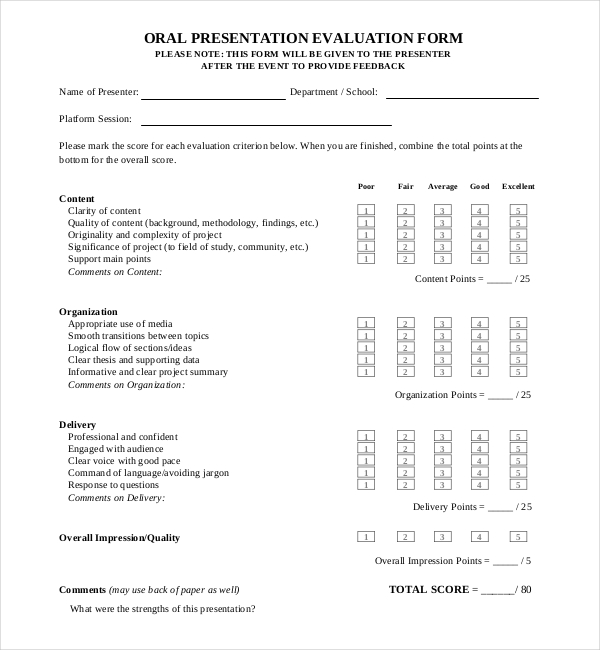

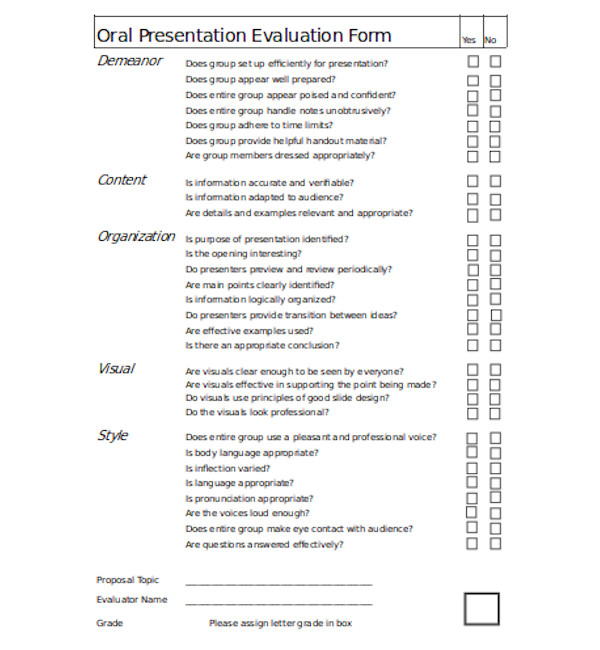

Oral Presentation Evaluation Form

The Oral Presentation Evaluation Form focuses on the delivery and content of spoken presentations. It’s designed to provide speakers with clear, actionable feedback on their verbal communication skills, engaging the audience, and conveying their message effectively. This form complements the Employee Self Evaluation Form , promoting self-awareness and improvement in public speaking skills. You should also take a look at our Interview Evaluation Form

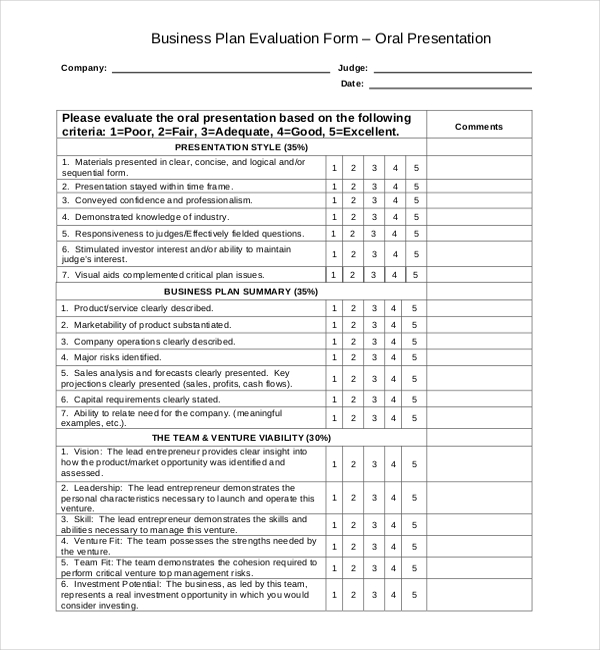

More Presentation Evaluation Form Samples Business Plan Presentation Evaluation Form

nebusinessplancompetition.com

This form is used to evaluate the oral presentation. The audience has to explain whether the materials presented were clear, logical or sequential. The form is also used to explain whether the time frame of the presentation was appropriate. They have to evaluate whether the presentation conveyed professionalism and demonstrated knowledge of the industry.

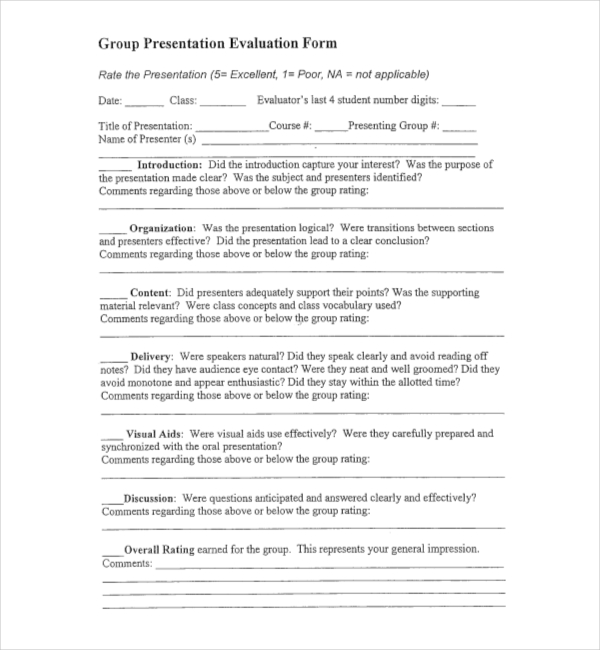

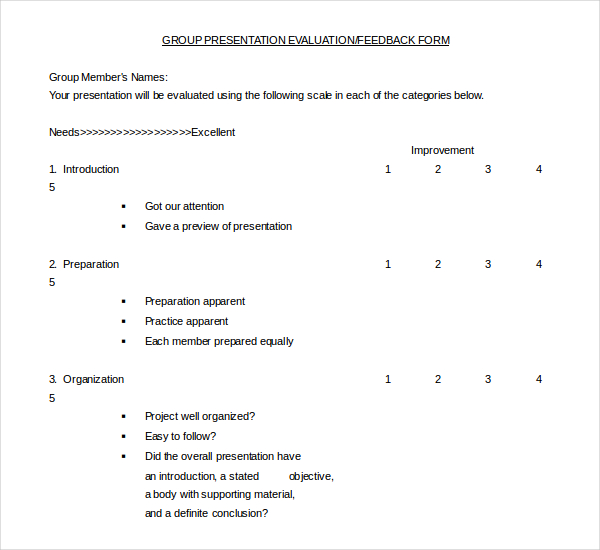

Group Presentation Evaluation Form

homepages.stmartin.edu

This form is used to explain whether the introduction was capturing their interest. They have to further explain whether the purpose of the presentation clear and logical. They have to explain whether the presentation resulted in a clear conclusion. They have to explain whether the speakers were natural and clear and whether they made eye contact.

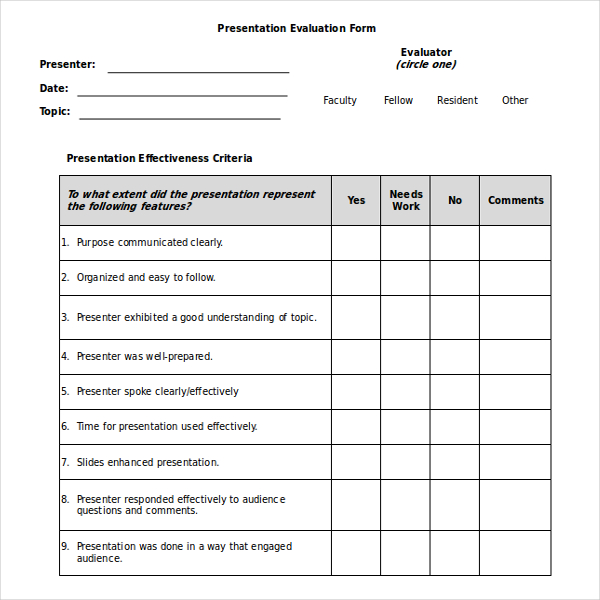

Formal Presentation Evaluation Form

This form is used by audience of the presentation to explain whether the purpose was communicated clearly. They have to further explain whether it was well organized and the presenter had understanding of the topic. The form is used to explain whether the presenter was well-prepared and spoke clearly.

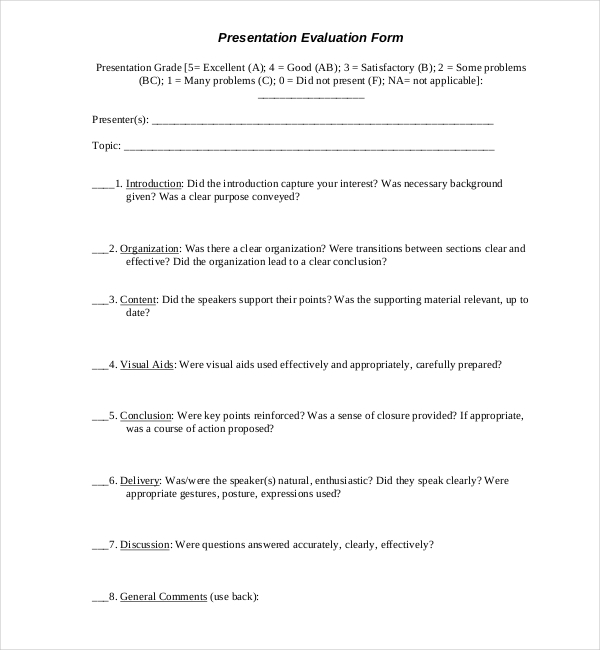

This form is used to evaluate the presentation and circling the suitable rating level. One can also use the provided space to include comments that support ratings. The aim of evaluating the presentation is to know strengths and find areas of required improvement.

Sample Group Presentation Evaluation Form

scc.spokane.edu

This form is used by students for evaluating other student’s presentation that follow a technical format. It is criteria based form which has points assigned for several criteria. This form is used by students to grade the contributions of all other members of their group who participated in a project.

Presentation Evaluation Form Sample Download

english.wisc.edu

It is vital to evaluate a presentation prior to presenting it to the audience out there. Therefore, the best thing to do after one is done making the presentation is to contact review team in the organization. He/she should have the presentation reviewed prior to the actual presentation day.

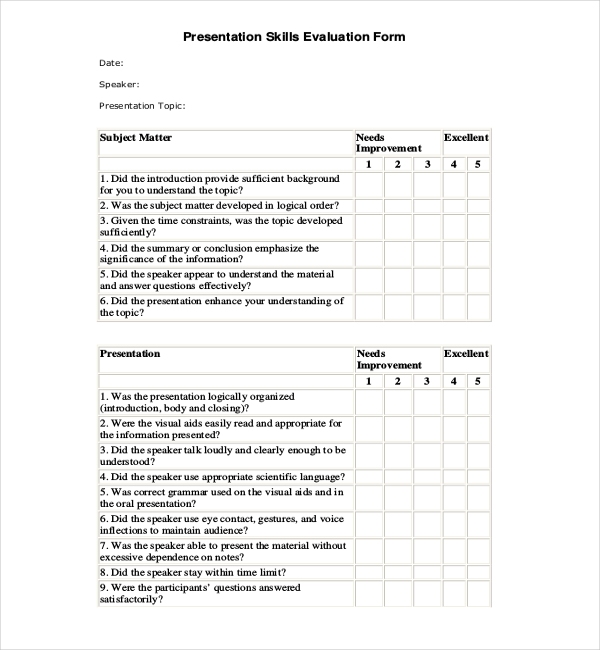

Presentation Skills Evaluation Form

samba.fsv.cuni.cz

There is sample of presentation skills Evaluation forms that one can use to conduct the evaluation. They can finally end up with the proper data as necessary. As opposed to creating a form from scratch, one can simply browse through the templates accessible. They have to explain whether the time and slides effectively used.

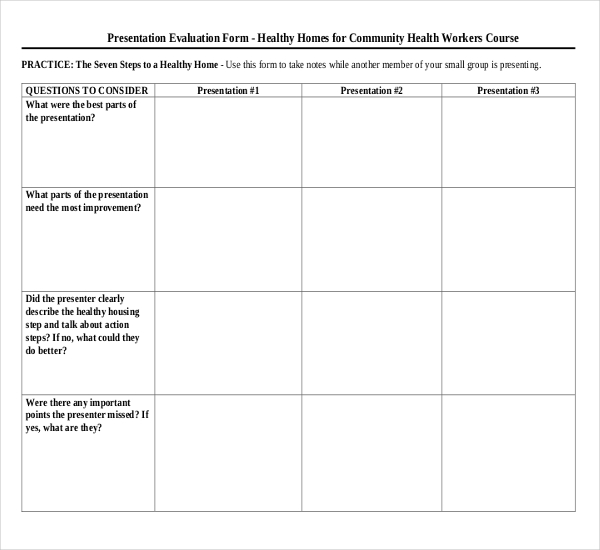

Presentation Evaluation Form Community Health Workers Course

This form is used to explain the best parts and worst parts of the presentation. The user has to explain whether the presenter described the healthy housing and action steps. They have to explain whether the presenter has missed any points and the ways presenter can improve.

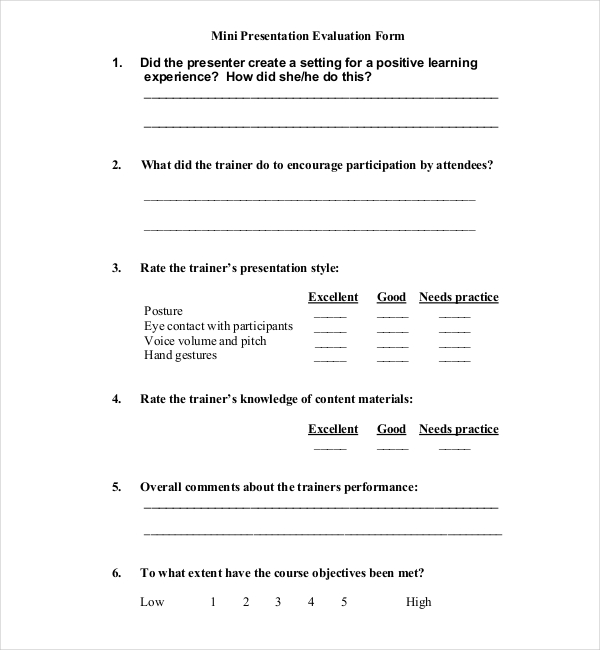

Mini Presentation Evaluation Form

This form is used to explain whether the presenter created a setting for positive learning experience and the way they did. They have to further explain the way the presenter encouraged participation. They have to rate the trainer’s presentation style, knowledge, eye contact, voice and hand gestures.

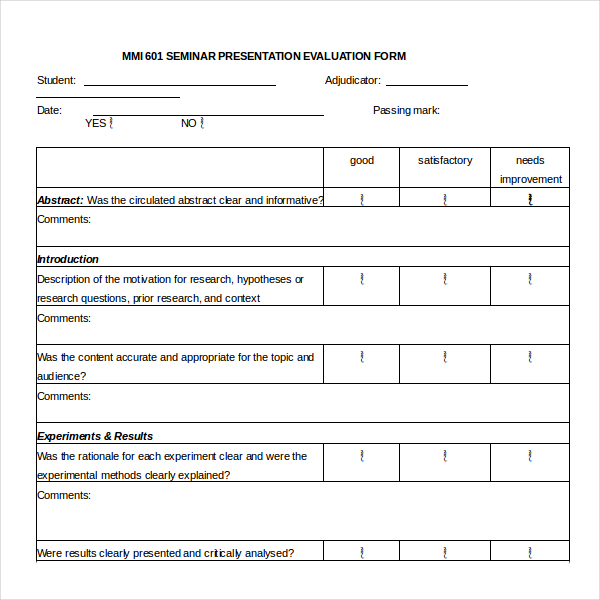

Seminar Presentation Evaluation Form

mmi.med.ualberta.ca

This form is used to give constructive feedback to the students who are presenting any of their seminars. The evaluation results will be used to enhance the effectiveness of the speaker. The speaker will discuss the evaluations with the graduate student’s adviser. This form can be used to add comments.

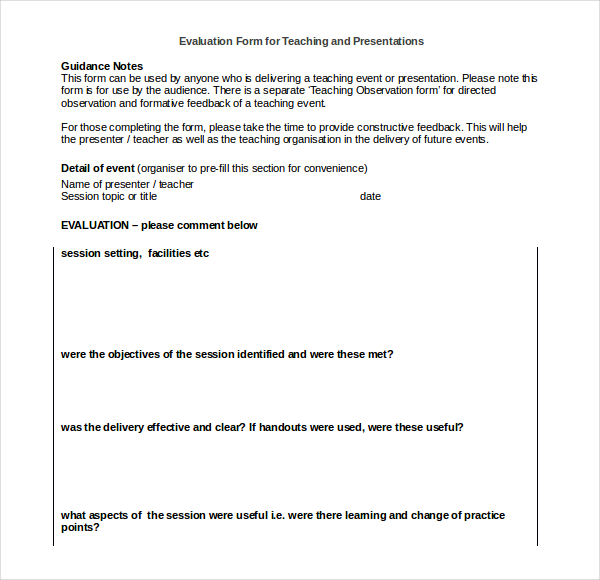

Evaluation Form for Teaching and Presentations

jrcptb.org.uk

This form is used by anyone who is providing a teaching presentation. This form is for use of the audience. There is a different Teaching Observation assessment for formative feedback and direct observation of a teaching event. They are asked to provide constructive feedback to help the presenter and the teaching organization in future events.

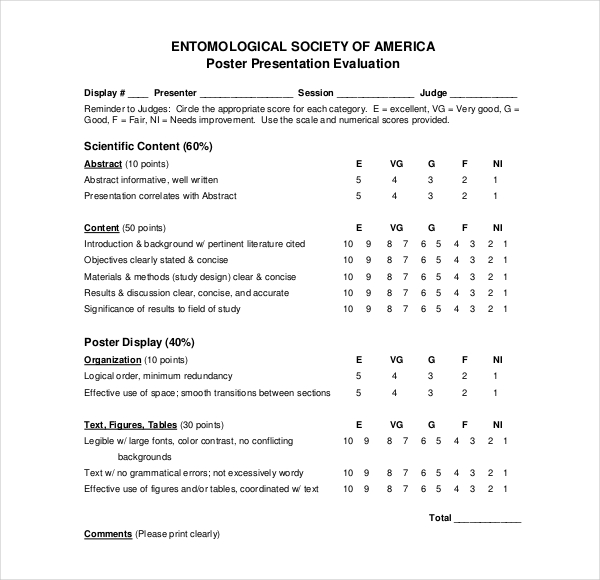

Poster Presentation Evaluation Form

This form involves inspection of the poster with the evaluation of the content and visual presentation. It is also used to discuss the plan to present poster to a reviewer. The questions asked in this process, needs to be anticipated by them. They also add comments, if necessary.

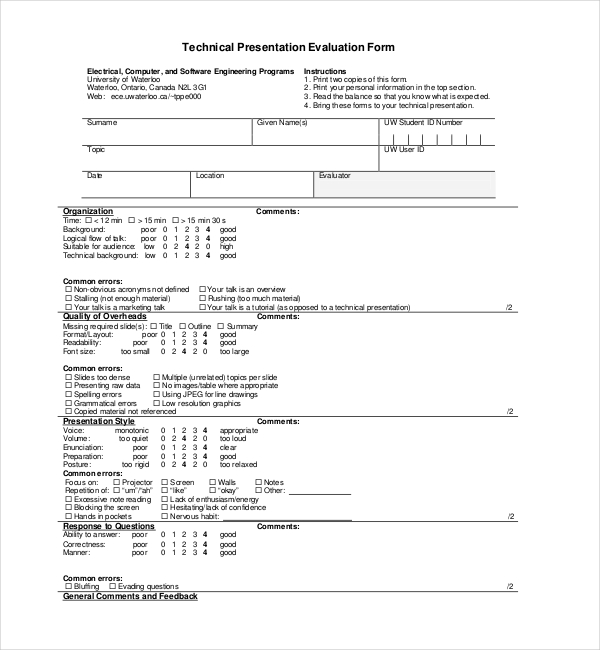

Technical Presentation Evaluation Form

uwaterloo.ca

This form is used to explain whether the introduction, preparation, content, objectives and presentation style was appropriate. It is also used to explain whether it was visually appealing, the project was well presented and the conclusion ended with a summary. One is also asked to explain whether the team was well connected with each other. One can also add overall rating of the project and add comments and grade.

msatterw.public.iastate.edu

10 Uses of Presentation Evaluation Form

- Feedback Collection: Gathers constructive feedback from the audience or evaluators.

- Speaker Improvement: Identifies strengths and areas for improvement for the presenter.

- Content Assessment: Evaluates the relevance and quality of presentation content.

- Delivery Analysis: Reviews the effectiveness of the presenter’s delivery style.

- Engagement Measurement: Gauges audience engagement and interaction.

- Visual Aid Evaluation: Assesses the impact and appropriateness of visual aids used.

- Performance Benchmarking: Sets benchmarks for future presentations.

- Training Needs Identification: Identifies training and development needs for presenters.

- Peer Review: Facilitates peer feedback and collaborative improvement.

- Confidence Building: Helps presenters gain confidence through structured feedback.

How do you write a Presentation Evaluation?

Writing a presentation evaluation begins with understanding the objectives of the presentation. Incorporate elements from the Seminar Evaluation Form to assess the relevance and delivery of content. The evaluation should include:

- An Introduction that outlines the context and purpose of the presentation, setting the stage for the feedback.

- Criteria Assessment , where each aspect of the presentation, such as content clarity, audience engagement, and visual aid effectiveness, is evaluated. For instance, using a Resume Evaluation Form might inspire the assessment of organizational skills and preparedness.

- Overall Impression and Conclusion , which summarize the presentation’s strengths and areas for improvement, providing actionable suggestions for development. This mirrors the approach in a Proposal Evaluation Form , focusing on the impact and feasibility of the content presented.

How do you Evaluate Presentation Performance?

To evaluate presentation performance effectively, consider both the content and the presenter’s delivery skills. Similar to the structured feedback provided in a Speaker Evaluation Form , the evaluation should encompass:

- Content Quality , assessing the accuracy, relevance, and organization of the information presented.

- Delivery Skills , including the presenter’s ability to communicate clearly, maintain eye contact, and engage with the audience.

- The use of Visual Aids and their contribution to the presentation’s overall impact.

- Audience Response , gauging the level of engagement and feedback received, which can be compared to insights gained from an Activity Evaluation Form .

What are 3 examples of Evaluation Forms?

Various evaluation forms can be employed to cater to different assessment needs:

- A Chef Evaluation Form is essential for culinary presentations, focusing on creativity, presentation, and technique.

- The Trainee Evaluation Form offers a comprehensive review of a trainee’s performance, including their learning progress and application of skills.

- For technology-based presentations, a Website Evaluation Form can assess the design, functionality, and user experience of digital projects.

What are the Evaluation Methods for Presentation?

Combining qualitative and quantitative methods enriches the evaluation process. Direct observation allows for real-time analysis of the presentation, while feedback surveys, akin to those outlined in a Performance Evaluation Form , gather audience impressions. Self-assessment encourages presenters to reflect on their performance, utilizing insights similar to those from a Vendor Evaluation Form . Lastly, peer reviews provide an unbiased feedback loop, essential for comprehensive evaluations. Incorporating specific forms and methods, from the Program Evaluation Form to the Basketball Evaluation Form , and even niche-focused ones like the Restaurant Employee Evaluation Form , ensures a detailed and effective presentation evaluation process. This approach not only supports the presenter’s development but also enhances the overall quality of presentations across various fields and contexts. You should also take a look at our Internship Evaluation Form .

10 Tips for Presentation Evaluation Forms

- Be Clear: Define evaluation criteria clearly and concisely.

- Stay Objective: Ensure feedback is objective and based on observable facts.

- Use Rating Scales: Incorporate rating scales for quantifiable feedback.

- Encourage Specifics: Ask for specific examples to support feedback.

- Focus on Constructive Feedback: Emphasize areas for improvement and suggestions.

- Keep It Anonymous: Anonymous feedback can elicit more honest responses.

- Be Comprehensive: Cover content, delivery, visuals, and engagement.

- Follow Up: Use the feedback for discussion and development planning.

- Customize Forms: Tailor forms to the specific presentation type and audience.

- Digital Options: Consider digital forms for ease of collection and analysis.

Can you fail a Pre Employment Physical for being Overweight?

No, being overweight alone typically does not cause failure in a pre-employment physical unless it directly affects job-specific tasks. It’s essential to focus on overall health and ability, similar to assessments in a Mentee Evaluation Form . You should also take a look at our Teacher Evaluation Form

What is usually Included in an Annual Physical Exam?

An annual physical exam typically includes checking vital signs, blood tests, assessments of your organ health, lifestyle discussions, and preventative screenings, mirroring the comprehensive approach of a Sensory Evaluation Form . You should also take a look at our Oral Presentation Evaluation Form

What do you wear to Pre Employment Paperwork?

For pre-employment paperwork, wear business casual attire unless specified otherwise. It shows professionalism, akin to preparing for a Driver Evaluation Form , emphasizing readiness and respect for the process. You should also take a look at our Food Evaluation Form

What does a Pre-employment Physical Consist of?

A pre-employment physical consists of tests measuring physical fitness for the job, including hearing, vision, strength, and possibly drug screening, akin to the tailored approach of a Workshop Evaluation Form . You should also take a look at our Functional Capacity Evaluation Form

Where can I get a Pre Employment Physical Form?

Pre-employment physical forms can be obtained from the hiring organization’s HR department or downloaded from their website, much like how one might access a Sales Evaluation Form for performance review. You should also take a look at our Bid Evaluation Form .

How to get a Pre-employment Physical?

To get a pre-employment physical, contact your prospective employer for the form and details, then schedule an appointment with a healthcare provider who understands the requirements, similar to the process for a Candidate Evaluation Form . You should also take a look at our Customer Service Evaluation Form .

In conclusion, a Presentation Evaluation Form is pivotal for both personal and professional development. Through detailed samples, forms, and letters, this guide empowers users to harness the full benefits of feedback. Whether in debates, presentations, or any public speaking scenario, the Debate Evaluation Form aspect underscores its versatility and significance. Embrace this tool to unlock a new horizon of effective communication and presentation finesse.

Related Posts

Free 8+ sample functional capacity evaluation forms in pdf | ms word, free 9+ sample self evaluation forms in pdf | ms word, free 11+ sample peer evaluation forms in pdf | ms word | excel, free 10+ employee performance evaluation forms in pdf | ms word | excel, free 5+ varieties of sports evaluation forms in pdf, free 8+ sample course evaluation forms in pdf | ms word | excel, free 8+ website evaluation forms in pdf | ms word, free 9+ sample marketing evaluation forms in pdf | ms word, free 11+ internship evaluation forms in pdf | excel | ms word, free 14+ retreat evaluation forms in pdf, free 9+ training evaluation forms in pdf | ms word, free 9+ conference evaluation forms in ms word | pdf | excel, free 3+ construction employee evaluation forms in pdf | ms word, free 20+ sample training evaluation forms in pdf | ms word | excel, free 21+ training evaluation forms in ms word, 7+ seminar evaluation form samples - free sample, example ..., sample conference evaluation form - 10+ free documents in word ..., evaluation form examples, student evaluation form samples - 9+ free documents in word, pdf.

Drug Evaluation and Classification Program, Advanced Roadside Impaired Driving Enforcement Resources

Decp, aride and dre manuals.

PDF, 88.2 MB

Sessions 1-5 Individual PowerPoints (ZIP format)

Sessions 6-8 Individual PowerPoints (ZIP format)

Sessions 9-13 Individual PowerPoints (ZIP format)

Sessions 14-16 Individual PowerPoints (ZIP format)

Sessions 17-20 Individual PowerPoints (ZIP format)

Sessions 21-30 Individual PowerPoints (ZIP format)

PDF, 38.6 MB

PDF, 37.4 MB

Sessions 1-5 individual PowerPoints (ZIP format)

Sessions 6-9 Individual PowerPoints (ZIP format)

PDF, 33.1 MB

PDF, 28.9 MB

Sessions 1-10 individual PowerPoints (ZIP format)

PDF, 64.2 MB

PDF, 63.82 MB

PDF, 37.8 MB

PDF, 37.3 MB

PDF, 157.8 MB

PDF, 152.7 MB

2017 and 2016

PDF, 20.8 MB

PDF, 19.9 MB

PDF, 13.1 MB

PDF, 12.2 MB

Get more resources from the International Drug Evaluation & Classification Program

IMAGES

VIDEO

COMMENTS

1. American Society of Health-Systems Pharmacists. ASHP guidelines on medication-use evaluation. Am J Health-Syst Pharm. 2021; 78:168-175. 2. ASHP Foundation Medication-Use Evaluation Resource Guide: Andexanet Alfa in the Management of Life-Threatening Bleeds in Patients on Direct Factor Xa-Inhibitors

evaluation and improvement of medi-cation utilization.1 Various terms have been employed to describe programs intended to achieve this goal; in add-ition to MUE, drug use evaluation (DUE) and drug utilization review (DUR) have also been used.1-3 Although these terms are sometimes used interchangeably, MUE may be differentiated in that it em-

Student Instructions: Selection a medication use evaluation based on a clinical problem relevant to your institution setting. Complete the questions provided in the module and write up the review. Set up a time with your preceptor to review the results of the completed module. Provide feedback to your preceptor on the usefulness of the points ...

The original "Template for the Evaluation of a Clinical Pharmacist," published in 1993, was based on the ACCP practice guidelines for phar-macotherapy specialists, the drug use process, and an American Society of Health-System Phar-macists (ASHP) paper on clinical practice in pharmacy.1 Feedback from users suggested that data from completed ...

An MUE can be defined as a focused effort to evaluate medication use processes or medication treatment response, with a goal of optimizing patient outcomes. 21 MUE, synonymous with "target drug" or "drug use" programs, also fits into disease state management programs that look to improve outcomes in patients with chronic illnesses. 22 ...

Presentation Content. Presents a complete history and physical Discusses pertinent laboratory data Understands pharmacology, pharmacodynamics, adverse effects, etc. of relevant drugs Exhibits understanding of patient's hospital course Demonstrates individualization of dosing regimen Identifies monitoring parameters for relevant drugs Presents ...

drug presentation evaluation form - Free download as PDF File (.pdf), Text File (.txt) or read online for free. Drug presentation evaluation form

Journal Club Presentation Evaluation Form Adapted from: Blommel ML, Abate MA. AJPE 2007;71(4) Article 63:1-6. Presenter: Reviewer: I. STUDY OVERVIEW 2 Points 1 Points 0 Points Score Introduction Authors' affiliations/study support Study objective(s) & rationale Methods - Design Case-control, cohort, controlled exp, etc.

Evaluation Principles. All evaluations—whether process or outcome, traditional or participatory—should adhere to the following four principles: utility, feasibility, propriety, and accuracy. Utility is about making sure the evaluation meets the needs of prevention stakeholders, including funders. To increase the utility of the evaluation ...

The drug evaluation monograph provides a structured method to review the major features of a drug product. A definite recommendation must be made based on need, therapeutics, side effects, cost, and other items specific to the particular agent (e.g., evidence-based treatment guidelines, dosage forms, convenience, dosage interval, inclusion on ...

Monographs provide an overview/evaluation of drugs, therapeutic classes and disease state therapies, to include efficacy, safety, cost information and recommendations for formulary placement. Prepared by pharmacists who support P&T Committees. Sometimes reviewed by specialists prior to P&T Committees. Monographs in Formulary Decision-Making.

1 The establishment and maintenance of a drug formulary requires that drugs or drug classes be objectively assessed based on scientific information (e.g., efficacy, safety, uniqueness, cost), not anecdotal prescriber experience.. 2 The drug evaluation monograph provides a structured method to review the major features of a drug product.. 3 A definite recommendation must be made based on need ...

Patient Case Presentation Evaluation Form. Student(s): Evaluator:Date: ... (Ex: clear link between patient presentation, literature evaluation and drug therapy assessment and plan) 4. Presentation Skills. 2. Presentation was logically organized and information was clearly explained.

This study investigated performance evaluation data for an oral presentation course for final-year PharmD students from 2 consecutive academic years (2007-2008 and 2008-2009). ... enabling students to demonstrate advanced drug literature evaluation and verbal presentations skills through the development and delivery of a 1-hour presentation ...

Drug Price Competition and Patent Term Restoration Act of 1984 (Hatch-Waxman Amendments) First statutory provisions expressly pertaining to generic drugs. Created the basic scheme under which ...

Screening and Assessment Tools Chart. This comprehensive chart shows screening and assessment tools for alcohol and drug misuse. Tools are categorized by substance type, audience for screening, and administrator. The chart also provides other assessment tools that may be useful for providers doing substance use work.

introduced to information and statistics on drugs and alcohol in 6th and 7th grade health curriculum. Procedure: 1.Students will draw a name of a drug from a bag. 2.Students that get the same drug will be partners in researching, creating their Presentation, and presenting their information.

Prepare the response in the format agreed on. Meet with your preceptor to review the completed drug information request. Systematic Approach to Answering Drug Information Requests. Step 1: Obtain background information. Before you can answer a drug information request, it is imperative to clearly understand the question and the circumstances ...

UNC ESOP Office of Experiential Education Seminar Presentation Evaluation Form (5.10) Student name: Date: Presentation: Case Therapeutic discussion Other Evaluator: Total time of Presentation: Key: Needs significant ... Drug Therapy: Drug therapy is described thoroughly; depth of discussion is beyond that

A Presentation Evaluation Form is a structured tool designed for assessing and providing feedback on presentations. It systematically captures the effectiveness, content clarity, speaker's delivery, and overall impact of a presentation. ... strength, and possibly drug screening, akin to the tailored approach of a Workshop Evaluation Form.

Presenter exhibited a good understanding of topic. 4. Presenter was well-prepared. 5. Presenter spoke clearly/effectively. 6. Time for presentation used effectively. 7. Slides enhanced presentation.

Tips for Creating an Effective Presentation [PDF] Writing Test Questions and Answers for CE Activities (optional if requested) [PDF] Submit a Proposal for the Midyear Clinical Meeting. Call for Educational Session Proposals. Presentation Skills. Presentation Skills Checklist [PDF] Additional Resources. ACPE Standards for Educational Activities

Powerpoint Presentations: Advanced Roadside Impaired Driving Enforcement. Sessions 1-5 individual PowerPoints (ZIP format) Powerpoint Presentations: Advanced Roadside Impaired Driving Enforcement. Sessions 6-9 Individual PowerPoints (ZIP format) Drug Evaluation and Classification (Preliminary School): Instructor Guide. PDF, 33.1 MB