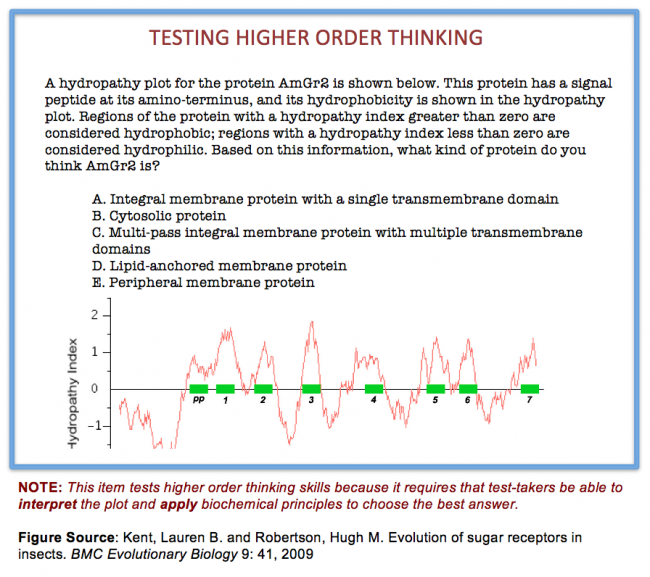

Essay type test are not reliable because

A. Their answers are different

B. Their results are different

C. Their checking is affected by examiner's mood

D. Their responding styles are different

Answer: Option C

Solution(By Examveda Team)

This Question Belongs to General Knowledge >> Teaching And Research

Join The Discussion

Comments ( 1 ).

Plz elaborate answer

Related Questions on Teaching and Research

Most important work of teacher is

A. To organize teaching work

B. To deliver lecture in class

C. To take care of children

D. To evaluate the students

A teacher should be

B. Diligent

D. Punctual

Environmental education should be taught in schools because

A. It will affect environmental pollution

B. It is important part of life

C. It will provide job to teachers

D. We cannot escape from environment

At primary level, it is better to teach in mother language because

A. It develops self-confidence in children

B. It makes learning easy

C. It is helpful in intellectual development

D. It helps children in learning in natural atmosphere

More Related Questions on Teaching and Research

Read More: MCQ Type Questions and Answers

- Arithmetic Ability

- Competitive Reasoning

- Competitive English

- Data Interpretation

- General Knowledge

- State GK

- History

- Geography

- Current Affairs

- Banking Awareness

- Computer Fundamentals

- Networking

- C Program

- Java Program

- Database

- HTML

- Javascript

- Computer Science

- Electronics and Communications Engineering

- Electrical Engineering

- Mechanical Engineering

- Civil Engineering

- Chemical Engineering

- Automobile Engineering

- Biotechnology Engineering

- Mining Engineering

- Commerce

- Management

- Philosophy

- Agriculture

- Sociology

- Political Science

- Pharmacy

Your Article Library

Essay test: types, advantages and limitations | statistics.

ADVERTISEMENTS:

After reading this article you will learn about:- 1. Introduction to Essay Test 2. Types of Essay Test 3. Advantages 4. Limitations 5. Suggestions.

Introduction to Essay Test:

The essay tests are still commonly used tools of evaluation, despite the increasingly wider applicability of the short answer and objective type questions.

There are certain outcomes of learning (e.g., organising, summarising, integrating ideas and expressing in one’s own way) which cannot be satisfactorily measured through objective type tests. The importance of essay tests lies in the measurement of such instructional outcomes.

An essay test may give full freedom to the students to write any number of pages. The required response may vary in length. An essay type question requires the pupil to plan his own answer and to explain it in his own words. The pupil exercises considerable freedom to select, organise and present his ideas. Essay type tests provide a better indication of pupil’s real achievement in learning. The answers provide a clue to nature and quality of the pupil’s thought process.

That is, we can assess how the pupil presents his ideas (whether his manner of presentation is coherent, logical and systematic) and how he concludes. In other words, the answer of the pupil reveals the structure, dynamics and functioning of pupil’s mental life.

The essay questions are generally thought to be the traditional type of questions which demand lengthy answers. They are not amenable to objective scoring as they give scope for halo-effect, inter-examiner variability and intra-examiner variability in scoring.

Types of Essay Test:

There can be many types of essay tests:

Some of these are given below with examples from different subjects:

1. Selective Recall.

e.g. What was the religious policy of Akbar?

2. Evaluative Recall.

e.g. Why did the First War of Independence in 1857 fail?

3. Comparison of two things—on a single designated basis.

e.g. Compare the contributions made by Dalton and Bohr to Atomic theory.

4. Comparison of two things—in general.

e.g. Compare Early Vedic Age with the Later Vedic Age.

5. Decision—for or against.

e.g. Which type of examination do you think is more reliable? Oral or Written. Why?

6. Causes or effects.

e.g. Discuss the effects of environmental pollution on our lives.

7. Explanation of the use or exact meaning of some phrase in a passage or a sentence.

e.g., Joint Stock Company is an artificial person. Explain ‘artificial person’ bringing out the concepts of Joint Stock Company.

8. Summary of some unit of the text or of some article.

9. Analysis

e.g. What was the role played by Mahatma Gandhi in India’s freedom struggle?

10. Statement of relationship.

e.g. Why is knowledge of Botany helpful in studying agriculture?

11. Illustration or examples (your own) of principles in science, language, etc.

e.g. Illustrate the correct use of subject-verb position in an interrogative sentence.

12. Classification.

e.g. Classify the following into Physical change and Chemical change with explanation. Water changes to vapour; Sulphuric Acid and Sodium Hydroxide react to produce Sodium Sulphate and Water; Rusting of Iron; Melting of Ice.

13. Application of rules or principles in given situations.

e.g. If you sat halfway between the middle and one end of a sea-saw, would a person sitting on the other end have to be heavier or lighter than you in order to make the sea-saw balance in the middle. Why?

14. Discussion.

e.g. Partnership is a relationship between persons who have agreed to share the profits of a business carried on by all or any of them acting for all. Discuss the essentials of partnership on the basis of this partnership.

15. Criticism—as to the adequacy, correctness, or relevance—of a printed statement or a classmate’s answer to a question on the lesson.

e.g. What is the wrong with the following statement?

The Prime Minister is the sovereign Head of State in India.

16. Outline.

e.g. Outline the steps required in computing the compound interest if the principal amount, rate of interest and time period are given as P, R and T respectively.

17. Reorganization of facts.

e.g. The student is asked to interview some persons and find out their opinion on the role of UN in world peace. In the light of data thus collected he/she can reorganise what is given in the text book.

18. Formulation of questions-problems and questions raised.

e.g. After reading a lesson the pupils are asked to raise related problems- questions.

19. New methods of procedure

e.g. Can you solve this mathematical problem by using another method?

Advantages of the Essay Tests:

1. It is relatively easier to prepare and administer a six-question extended- response essay test than to prepare and administer a comparable 60-item multiple-choice test items.

2. It is the only means that can assess an examinee’s ability to organise and present his ideas in a logical and coherent fashion.

3. It can be successfully employed for practically all the school subjects.

4. Some of the objectives such as ability to organise idea effectively, ability to criticise or justify a statement, ability to interpret, etc., can be best measured by this type of test.

5. Logical thinking and critical reasoning, systematic presentation, etc. can be best developed by this type of test.

6. It helps to induce good study habits such as making outlines and summaries, organising the arguments for and against, etc.

7. The students can show their initiative, the originality of their thought and the fertility of their imagination as they are permitted freedom of response.

8. The responses of the students need not be completely right or wrong. All degrees of comprehensiveness and accuracy are possible.

9. It largely eliminates guessing.

10. They are valuable in testing the functional knowledge and power of expression of the pupil.

Limitations of Essay Tests:

1. One of the serious limitations of the essay tests is that these tests do not give scope for larger sampling of the content. You cannot sample the course content so well with six lengthy essay questions as you can with 60 multiple-choice test items.

2. Such tests encourage selective reading and emphasise cramming.

3. Moreover, scoring may be affected by spelling, good handwriting, coloured ink, neatness, grammar, length of the answer, etc.

4. The long-answer type questions are less valid and less reliable, and as such they have little predictive value.

5. It requires an excessive time on the part of students to write; while assessing, reading essays is very time-consuming and laborious.

6. It can be assessed only by a teacher or competent professionals.

7. Improper and ambiguous wording handicaps both the students and valuers.

8. Mood of the examiner affects the scoring of answer scripts.

9. There is halo effect-biased judgement by previous impressions.

10. The scores may be affected by his personal bias or partiality for a particular point of view, his way of understanding the question, his weightage to different aspect of the answer, favouritism and nepotism, etc.

Thus, the potential disadvantages of essay type questions are :

(i) Poor predictive validity,

(ii) Limited content sampling,

(iii) Scores unreliability, and

(iv) Scoring constraints.

Suggestions for Improving Essay Tests:

The teacher can sometimes, through essay tests, gain improved insight into a student’s abilities, difficulties and ways of thinking and thus have a basis for guiding his/her learning.

(A) White Framing Questions:

1. Give adequate time and thought to the preparation of essay questions, so that they can be re-examined, revised and edited before they are used. This would increase the validity of the test.

2. The item should be so written that it will elicit the type of behaviour the teacher wants to measure. If one is interested in measuring understanding, he should not ask a question that will elicit an opinion; e.g.,

“What do you think of Buddhism in comparison to Jainism?”

3. Use words which themselves give directions e.g. define, illustrate, outline, select, classify, summarise, etc., instead of discuss, comment, explain, etc.

4. Give specific directions to students to elicit the desired response.

5. Indicate clearly the value of the question and the time suggested for answering it.

6. Do not provide optional questions in an essay test because—

(i) It is difficult to construct questions of equal difficulty;

(ii) Students do not have the ability to select those questions which they will answer best;

(iii) A good student may be penalised because he is challenged by the more difficult and complex questions.

7. Prepare and use a relatively large number of questions requiring short answers rather than just a few questions involving long answers.

8. Do not start essay questions with such words as list, who, what, whether. If we begin the questions with such words, they are likely to be short-answer question and not essay questions, as we have defined the term.

9. Adapt the length of the response and complexity of the question and answer to the maturity level of the students.

10. The wording of the questions should be clear and unambiguous.

11. It should be a power test rather than a speed test. Allow a liberal time limit so that the essay test does not become a test of speed in writing.

12. Supply the necessary training to the students in writing essay tests.

13. Questions should be graded from simple to complex so that all the testees can answer atleast a few questions.

14. Essay questions should provide value points and marking schemes.

(B) While Scoring Questions:

1. Prepare a marking scheme, suggesting the best possible answer and the weightage given to the various points of this model answer. Decide in advance which factors will be considered in evaluating an essay response.

2. While assessing the essay response, one must:

a. Use appropriate methods to minimise bias;

b. Pay attention only to the significant and relevant aspects of the answer;

c. Be careful not to let personal idiosyncrasies affect assessment;

d. Apply a uniform standard to all the papers.

3. The examinee’s identity should be concealed from the scorer. By this we can avoid the “halo effect” or “biasness” which may affect the scoring.

4. Check your marking scheme against actual responses.

5. Once the assessment has begun, the standard should not be changed, nor should it vary from paper to paper or reader to reader. Be consistent in your assessment.

6. Grade only one question at a time for all papers. This will help you in minimising the halo effect in becoming thoroughly familiar with just one set of scoring criteria and in concentrating completely on them.

7. The mechanics of expression (legibility, spelling, punctuation, grammar) should be judged separately from what the student writes, i.e. the subject matter content.

8. If possible, have two independent readings of the test and use the average as the final score.

Related Articles:

- Merits and Demerits of Objective Type Test

- Types of Recall Type Test: Simple and Completion | Objective Test

Educational Statistics , Evaluation Tools , Essay Test

Comments are closed.

Creating and Scoring Essay Tests

FatCamera / Getty Images

- Tips & Strategies

- An Introduction to Teaching

- Policies & Discipline

- Community Involvement

- School Administration

- Technology in the Classroom

- Teaching Adult Learners

- Issues In Education

- Teaching Resources

- Becoming A Teacher

- Assessments & Tests

- Elementary Education

- Secondary Education

- Special Education

- Homeschooling

- M.Ed., Curriculum and Instruction, University of Florida

- B.A., History, University of Florida

Essay tests are useful for teachers when they want students to select, organize, analyze, synthesize, and/or evaluate information. In other words, they rely on the upper levels of Bloom's Taxonomy . There are two types of essay questions: restricted and extended response.

- Restricted Response - These essay questions limit what the student will discuss in the essay based on the wording of the question. For example, "State the main differences between John Adams' and Thomas Jefferson's beliefs about federalism," is a restricted response. What the student is to write about has been expressed to them within the question.

- Extended Response - These allow students to select what they wish to include in order to answer the question. For example, "In Of Mice and Men , was George's killing of Lennie justified? Explain your answer." The student is given the overall topic, but they are free to use their own judgment and integrate outside information to help support their opinion.

Student Skills Required for Essay Tests

Before expecting students to perform well on either type of essay question, we must make sure that they have the required skills to excel. Following are four skills that students should have learned and practiced before taking essay exams:

- The ability to select appropriate material from the information learned in order to best answer the question.

- The ability to organize that material in an effective manner.

- The ability to show how ideas relate and interact in a specific context.

- The ability to write effectively in both sentences and paragraphs.

Constructing an Effective Essay Question

Following are a few tips to help in the construction of effective essay questions:

- Begin with the lesson objectives in mind. Make sure to know what you wish the student to show by answering the essay question.

- Decide if your goal requires a restricted or extended response. In general, if you wish to see if the student can synthesize and organize the information that they learned, then restricted response is the way to go. However, if you wish them to judge or evaluate something using the information taught during class, then you will want to use the extended response.

- If you are including more than one essay, be cognizant of time constraints. You do not want to punish students because they ran out of time on the test.

- Write the question in a novel or interesting manner to help motivate the student.

- State the number of points that the essay is worth. You can also provide them with a time guideline to help them as they work through the exam.

- If your essay item is part of a larger objective test, make sure that it is the last item on the exam.

Scoring the Essay Item

One of the downfalls of essay tests is that they lack in reliability. Even when teachers grade essays with a well-constructed rubric, subjective decisions are made. Therefore, it is important to try and be as reliable as possible when scoring your essay items. Here are a few tips to help improve reliability in grading:

- Determine whether you will use a holistic or analytic scoring system before you write your rubric . With the holistic grading system, you evaluate the answer as a whole, rating papers against each other. With the analytic system, you list specific pieces of information and award points for their inclusion.

- Prepare the essay rubric in advance. Determine what you are looking for and how many points you will be assigning for each aspect of the question.

- Avoid looking at names. Some teachers have students put numbers on their essays to try and help with this.

- Score one item at a time. This helps ensure that you use the same thinking and standards for all students.

- Avoid interruptions when scoring a specific question. Again, consistency will be increased if you grade the same item on all the papers in one sitting.

- If an important decision like an award or scholarship is based on the score for the essay, obtain two or more independent readers.

- Beware of negative influences that can affect essay scoring. These include handwriting and writing style bias, the length of the response, and the inclusion of irrelevant material.

- Review papers that are on the borderline a second time before assigning a final grade.

- How to Study Using the Basketball Review Game

- Creating Effective Fill-in-the-Blank Questions

- Teacher Housekeeping Tasks

- 4 Tips for Effective Classroom Management

- Dealing With Trips to the Bathroom During Class

- Tips to Cut Writing Assignment Grading Time

- Collecting Homework in the Classroom

- Why Students Cheat and How to Stop Them

- Planning Classroom Instruction

- Using Cloze Tests to Determine Reading Comprehension

- 10 Strategies to Boost Reading Comprehension

- Taking Daily Attendance

- How Scaffolding Instruction Can Improve Comprehension

- Field Trips: Pros and Cons

- Assignment Biography: Student Criteria and Rubric for Writing

- 3 Poetry Activities for Middle School Students

- New Freshmen

- New International Students

- Info about COMPOSITION

- Info about MATH

- Info about SCIENCE

- LOTE for Non-Native Speakers

- Log-in Instructions

- ALEKS PPL Math Placement Exam

- Advanced Placement (AP) Credit

- What is IB?

- Advanced Level (A-Levels) Credit

- Departmental Proficiency Exams

- Departmental Proficiency Exams in LOTE ("Languages Other Than English")

- Testing in Less Commonly Studied Languages

- FAQ on placement testing

- FAQ on proficiency testing

- Legislation FAQ

- 2024 Cutoff Scores Math

- 2024 Cutoff Scores Chemistry

- 2024 Cutoff Scores IMR-Biology

- 2024 Cutoff Scores MCB

- 2024 Cutoff Scores Physics

- 2024 Cutoff Scores Rhetoric

- 2024 Cutoff Scores ESL

- 2024 Cutoff Scores Chinese

- 2024 Cutoff Scores French

- 2024 Cutoff Scores German

- 2024 Cutoff Scores Latin

- 2024 Cutoff Scores Spanish

- 2024 Advanced Placement Program

- 2024 International Baccalaureate Program

- 2024 Advanced Level Exams

- 2023 Cutoff Scores Math

- 2023 Cutoff Scores Chemistry

- 2023 Cutoff Scores IMR-Biology

- 2023 Cutoff Scores MCB

- 2023 Cutoff Scores Physics

- 2023 Cutoff Scores Rhetoric

- 2023 Cutoff Scores ESL

- 2023 Cutoff Scores Chinese

- 2023 Cutoff Scores French

- 2023 Cutoff Scores German

- 2023 Cutoff Scores Latin

- 2023 Cutoff Scores Spanish

- 2023 Advanced Placement Program

- 2023 International Baccalaureate Program

- 2023 Advanced Level Exams

- 2022 Cutoff Scores Math

- 2022 Cutoff Scores Chemistry

- 2022 Cutoff Scores IMR-Biology

- 2022 Cutoff Scores MCB

- 2022 Cutoff Scores Physics

- 2022 Cutoff Scores Rhetoric

- 2022 Cutoff Scores ESL

- 2022 Cutoff Scores Chinese

- 2022 Cutoff Scores French

- 2022 Cutoff Scores German

- 2022 Cutoff Scores Latin

- 2022 Cutoff Scores Spanish

- 2022 Advanced Placement Program

- 2022 International Baccalaureate Program

- 2022 Advanced Level Exams

- 2021 Cutoff Scores Math

- 2021 Cutoff Scores Chemistry

- 2021 Cutoff Scores IMR-Biology

- 2021 Cutoff Scores MCB

- 2021 Cutoff Scores Physics

- 2021 Cutoff Scores Rhetoric

- 2021 Cutoff Scores ESL

- 2021 Cutoff Scores Chinese

- 2021 Cutoff Scores French

- 2021 Cutoff Scores German

- 2021 Cutoff Scores Latin

- 2021 Cutoff Scores Spanish

- 2021 Advanced Placement Program

- 2021 International Baccalaureate Program

- 2021 Advanced Level Exams

- 2020 Cutoff Scores Math

- 2020 Cutoff Scores Chemistry

- 2020 Cutoff Scores MCB

- 2020 Cutoff Scores Physics

- 2020 Cutoff Scores Rhetoric

- 2020 Cutoff Scores ESL

- 2020 Cutoff Scores Chinese

- 2020 Cutoff Scores French

- 2020 Cutoff Scores German

- 2020 Cutoff Scores Latin

- 2020 Cutoff Scores Spanish

- 2020 Advanced Placement Program

- 2020 International Baccalaureate Program

- 2020 Advanced Level Exams

- 2019 Cutoff Scores Math

- 2019 Cutoff Scores Chemistry

- 2019 Cutoff Scores MCB

- 2019 Cutoff Scores Physics

- 2019 Cutoff Scores Rhetoric

- 2019 Cutoff Scores Chinese

- 2019 Cutoff Scores ESL

- 2019 Cutoff Scores French

- 2019 Cutoff Scores German

- 2019 Cutoff Scores Latin

- 2019 Cutoff Scores Spanish

- 2019 Advanced Placement Program

- 2019 International Baccalaureate Program

- 2019 Advanced Level Exams

- 2018 Cutoff Scores Math

- 2018 Cutoff Scores Chemistry

- 2018 Cutoff Scores MCB

- 2018 Cutoff Scores Physics

- 2018 Cutoff Scores Rhetoric

- 2018 Cutoff Scores ESL

- 2018 Cutoff Scores French

- 2018 Cutoff Scores German

- 2018 Cutoff Scores Latin

- 2018 Cutoff Scores Spanish

- 2018 Advanced Placement Program

- 2018 International Baccalaureate Program

- 2018 Advanced Level Exams

- 2017 Cutoff Scores Math

- 2017 Cutoff Scores Chemistry

- 2017 Cutoff Scores MCB

- 2017 Cutoff Scores Physics

- 2017 Cutoff Scores Rhetoric

- 2017 Cutoff Scores ESL

- 2017 Cutoff Scores French

- 2017 Cutoff Scores German

- 2017 Cutoff Scores Latin

- 2017 Cutoff Scores Spanish

- 2017 Advanced Placement Program

- 2017 International Baccalaureate Program

- 2017 Advanced Level Exams

- 2016 Cutoff Scores Math

- 2016 Cutoff Scores Chemistry

- 2016 Cutoff Scores Physics

- 2016 Cutoff Scores Rhetoric

- 2016 Cutoff Scores ESL

- 2016 Cutoff Scores French

- 2016 Cutoff Scores German

- 2016 Cutoff Scores Latin

- 2016 Cutoff Scores Spanish

- 2016 Advanced Placement Program

- 2016 International Baccalaureate Program

- 2016 Advanced Level Exams

- 2015 Fall Cutoff Scores Math

- 2016 Spring Cutoff Scores Math

- 2015 Cutoff Scores Chemistry

- 2015 Cutoff Scores Physics

- 2015 Cutoff Scores Rhetoric

- 2015 Cutoff Scores ESL

- 2015 Cutoff Scores French

- 2015 Cutoff Scores German

- 2015 Cutoff Scores Latin

- 2015 Cutoff Scores Spanish

- 2015 Advanced Placement Program

- 2015 International Baccalaureate (IB) Program

- 2015 Advanced Level Exams

- 2014 Cutoff Scores Math

- 2014 Cutoff Scores Chemistry

- 2014 Cutoff Scores Physics

- 2014 Cutoff Scores Rhetoric

- 2014 Cutoff Scores ESL

- 2014 Cutoff Scores French

- 2014 Cutoff Scores German

- 2014 Cutoff Scores Latin

- 2014 Cutoff Scores Spanish

- 2014 Advanced Placement (AP) Program

- 2014 International Baccalaureate (IB) Program

- 2014 Advanced Level Examinations (A Levels)

- 2013 Cutoff Scores Math

- 2013 Cutoff Scores Chemistry

- 2013 Cutoff Scores Physics

- 2013 Cutoff Scores Rhetoric

- 2013 Cutoff Scores ESL

- 2013 Cutoff Scores French

- 2013 Cutoff Scores German

- 2013 Cutoff Scores Latin

- 2013 Cutoff Scores Spanish

- 2013 Advanced Placement (AP) Program

- 2013 International Baccalaureate (IB) Program

- 2013 Advanced Level Exams (A Levels)

- 2012 Cutoff Scores Math

- 2012 Cutoff Scores Chemistry

- 2012 Cutoff Scores Physics

- 2012 Cutoff Scores Rhetoric

- 2012 Cutoff Scores ESL

- 2012 Cutoff Scores French

- 2012 Cutoff Scores German

- 2012 Cutoff Scores Latin

- 2012 Cutoff Scores Spanish

- 2012 Advanced Placement (AP) Program

- 2012 International Baccalaureate (IB) Program

- 2012 Advanced Level Exams (A Levels)

- 2011 Cutoff Scores Math

- 2011 Cutoff Scores Chemistry

- 2011 Cutoff Scores Physics

- 2011 Cutoff Scores Rhetoric

- 2011 Cutoff Scores French

- 2011 Cutoff Scores German

- 2011 Cutoff Scores Latin

- 2011 Cutoff Scores Spanish

- 2011 Advanced Placement (AP) Program

- 2011 International Baccalaureate (IB) Program

- 2010 Cutoff Scores Math

- 2010 Cutoff Scores Chemistry

- 2010 Cutoff Scores Rhetoric

- 2010 Cutoff Scores French

- 2010 Cutoff Scores German

- 2010 Cutoff Scores Latin

- 2010 Cutoff Scores Spanish

- 2010 Advanced Placement (AP) Program

- 2010 International Baccalaureate (IB) Program

- 2009 Cutoff Scores Math

- 2009 Cutoff Scores Chemistry

- 2009 Cutoff Scores Rhetoric

- 2009 Cutoff Scores French

- 2009 Cutoff Scores German

- 2009 Cutoff Scores Latin

- 2009 Cutoff Scores Spanish

- 2009 Advanced Placement (AP) Program

- 2009 International Baccalaureate (IB) Program

- 2008 Cutoff Scores Math

- 2008 Cutoff Scores Chemistry

- 2008 Cutoff Scores Rhetoric

- 2008 Cutoff Scores French

- 2008 Cutoff Scores German

- 2008 Cutoff Scores Latin

- 2008 Cutoff Scores Spanish

- 2008 Advanced Placement (AP) Program

- 2008 International Baccalaureate (IB) Program

- Log in & Interpret Student Profiles

- Mobius View

- Classroom Test Analysis: The Total Report

- Item Analysis

- Error Report

- Omitted or Multiple Correct Answers

- QUEST Analysis

- Assigning Course Grades

Improving Your Test Questions

- ICES Online

- Myths & Misperceptions

- Longitudinal Profiles

- List of Teachers Ranked as Excellent by Their Students

- Focus Groups

- IEF Question Bank

For questions or information:

- Choosing between Objective and Subjective Test Items

Multiple-Choice Test Items

True-false test items, matching test items, completion test items, essay test items, problem solving test items, performance test items.

- Two Methods for Assessing Test Item Quality

- Assistance Offered by The Center for Innovation in Teaching and Learning (CITL)

- References for Further Reading

I. Choosing Between Objective and Subjective Test Items

There are two general categories of test items: (1) objective items which require students to select the correct response from several alternatives or to supply a word or short phrase to answer a question or complete a statement; and (2) subjective or essay items which permit the student to organize and present an original answer. Objective items include multiple-choice, true-false, matching and completion, while subjective items include short-answer essay, extended-response essay, problem solving and performance test items. For some instructional purposes one or the other item types may prove more efficient and appropriate. To begin out discussion of the relative merits of each type of test item, test your knowledge of these two item types by answering the following questions.

| (circle the correct answer) | |||

| 1. Essay exams are easier to construct than objective exams. | T | F | |

| 2. Essay exams require more thorough student preparation and study time than objective exams. | T | F | |

| 3. Essay exams require writing skills where objective exams do not. | T | F | |

| 4. Essay exams teach a person how to write. | T | F | |

| 5. Essay exams are more subjective in nature than are objective exams. | T | F | |

| 6. Objective exams encourage guessing more so than essay exams. | T | F | |

| 7. Essay exams limit the extent of content covered. | T | F | |

| 8. Essay and objective exams can be used to measure the same content or ability. | T | F | |

| 9. Essay and objective exams are both good ways to evaluate a student's level of knowledge. | T | F | |

Quiz Answers

| 1. | TRUE | Essay items are generally easier and less time consuming to construct than are most objective test items. Technically correct and content appropriate multiple-choice and true-false test items require an extensive amount of time to write and revise. For example, a professional item writer produces only 9-10 good multiple-choice items in a day's time. |

| 2. | ? | According to research findings it is still undetermined whether or not essay tests require or facilitate more thorough (or even different) student study preparation. |

| 3. | TRUE | Writing skills do affect a student's ability to communicate the correct "factual" information through an essay response. Consequently, students with good writing skills have an advantage over students who have difficulty expressing themselves through writing. |

| 4. | FALSE | Essays do not teach a student how to write but they can emphasize the importance of being able to communicate through writing. Constant use of essay tests may encourage the knowledgeable but poor writing student to improve his/her writing ability in order to improve performance. |

| 5. | TRUE | Essays are more subjective in nature due to their susceptibility to scoring influences. Different readers can rate identical responses differently, the same reader can rate the same paper differently over time, the handwriting, neatness or punctuation can unintentionally affect a paper's grade and the lack of anonymity can affect the grading process. While impossible to eliminate, scoring influences or biases can be minimized through procedures discussed later in this guide. |

| 6. | ? | Both item types encourage some form of guessing. Multiple-choice, true-false and matching items can be correctly answered through blind guessing, yet essay items can be responded to satisfactorily through well written bluffing. |

| 7. | TRUE | Due to the extent of time required by the student to respond to an essay question, only a few essay questions can be included on a classroom exam. Consequently, a larger number of objective items can be tested in the same amount of time, thus enabling the test to cover more content. |

| 8. | TRUE | Both item types can measure similar content or learning objectives. Research has shown that students respond almost identically to essay and objective test items covering the same content. Studies by Sax & Collet (1968) and Paterson (1926) conducted forty-two years apart reached the same conclusion: "...there seems to be no escape from the conclusions that the two types of exams are measuring identical things" (Paterson, 1926, p. 246). This conclusion should not be surprising; after all, a well written essay item requires that the student (1) have a store of knowledge, (2) be able to relate facts and principles, and (3) be able to organize such information into a coherent and logical written expression, whereas an objective test item requires that the student (1) have a store of knowledge, (2) be able to relate facts and principles, and (3) be able to organize such information into a coherent and logical choice among several alternatives. |

| 9. | TRUE | Both objective and essay test items are good devices for measuring student achievement. However, as seen in the previous quiz answers, there are particular measurement situations where one item type is more appropriate than the other. Following is a set of recommendations for using either objective or essay test items: (Adapted from Robert L. Ebel, Essentials of Educational Measurement, 1972, p. 144). |

1 Sax, G., & Collet, L. S. (1968). An empirical comparison of the effects of recall and multiple-choice tests on student achievement. J ournal of Educational Measurement, 5 (2), 169–173. doi:10.1111/j.1745-3984.1968.tb00622.x

Paterson, D. G. (1926). Do new and old type examinations measure different mental functions? School and Society, 24 , 246–248.

When to Use Essay or Objective Tests

Essay tests are especially appropriate when:

- the group to be tested is small and the test is not to be reused.

- you wish to encourage and reward the development of student skill in writing.

- you are more interested in exploring the student's attitudes than in measuring his/her achievement.

- you are more confident of your ability as a critical and fair reader than as an imaginative writer of good objective test items.

Objective tests are especially appropriate when:

- the group to be tested is large and the test may be reused.

- highly reliable test scores must be obtained as efficiently as possible.

- impartiality of evaluation, absolute fairness, and freedom from possible test scoring influences (e.g., fatigue, lack of anonymity) are essential.

- you are more confident of your ability to express objective test items clearly than of your ability to judge essay test answers correctly.

- there is more pressure for speedy reporting of scores than for speedy test preparation.

Either essay or objective tests can be used to:

- measure almost any important educational achievement a written test can measure.

- test understanding and ability to apply principles.

- test ability to think critically.

- test ability to solve problems.

- test ability to select relevant facts and principles and to integrate them toward the solution of complex problems.

In addition to the preceding suggestions, it is important to realize that certain item types are better suited than others for measuring particular learning objectives. For example, learning objectives requiring the student to demonstrate or to show , may be better measured by performance test items, whereas objectives requiring the student to explain or to describe may be better measured by essay test items. The matching of learning objective expectations with certain item types can help you select an appropriate kind of test item for your classroom exam as well as provide a higher degree of test validity (i.e., testing what is supposed to be tested). To further illustrate, several sample learning objectives and appropriate test items are provided on the following page.

| Learning Objectives | Most Suitable Test Item | |

|---|---|---|

| The student will be able to categorize and name the parts of the human skeletal system. | Objective Test Item (M-C, T-F, Matching) | |

| The student will be able to critique and appraise another student's English composition on the basis of its organization. | Essay Test Item (Extended-Response) | |

| The student will demonstrate safe laboratory skills. | Performance Test Item | |

| The student will be able to cite four examples of satire that Twain uses in . | Essay Test Item (Short-Answer) |

After you have decided to use either an objective, essay or both objective and essay exam, the next step is to select the kind(s) of objective or essay item that you wish to include on the exam. To help you make such a choice, the different kinds of objective and essay items are presented in the following section. The various kinds of items are briefly described and compared to one another in terms of their advantages and limitations for use. Also presented is a set of general suggestions for the construction of each item variation.

II. Suggestions for Using and Writing Test Items

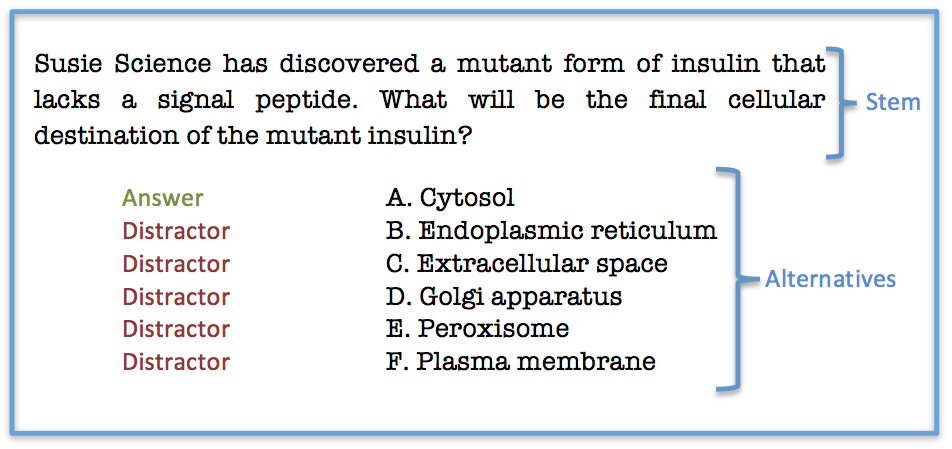

The multiple-choice item consists of two parts: (a) the stem, which identifies the question or problem and (b) the response alternatives. Students are asked to select the one alternative that best completes the statement or answers the question. For example:

Sample Multiple-Choice Item

| (a) | ||

| (b) | ||

*correct response

Advantages in Using Multiple-Choice Items

Multiple-choice items can provide...

- versatility in measuring all levels of cognitive ability.

- highly reliable test scores.

- scoring efficiency and accuracy.

- objective measurement of student achievement or ability.

- a wide sampling of content or objectives.

- a reduced guessing factor when compared to true-false items.

- different response alternatives which can provide diagnostic feedback.

Limitations in Using Multiple-Choice Items

Multiple-choice items...

- are difficult and time consuming to construct.

- lead an instructor to favor simple recall of facts.

- place a high degree of dependence on the student's reading ability and instructor's writing ability.

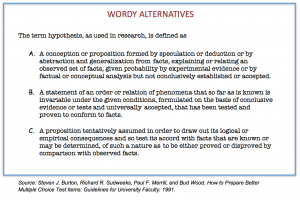

Suggestions For Writing Multiple-Choice Test Items

| 1. When possible, state the stem as a direct question rather than as an incomplete statement. | |

| Undesirable: | |

| Desirable: | |

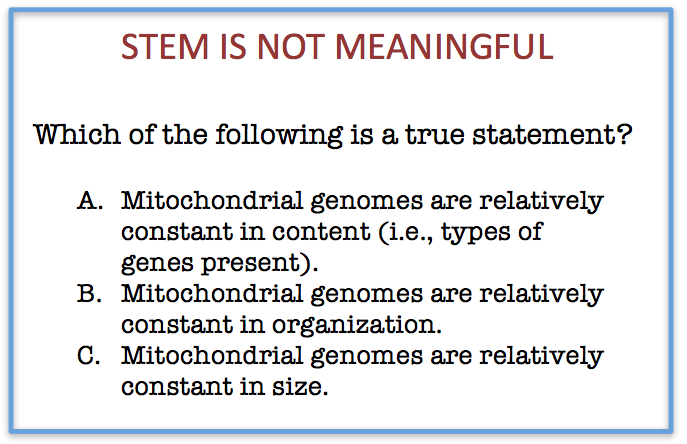

| 2. Present a definite, explicit and singular question or problem in the stem. | |

| Undesirable: | |

| Desirable: | |

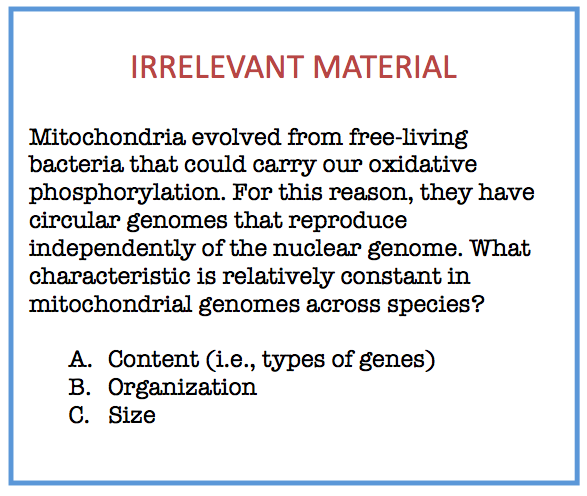

| 3. Eliminate excessive verbiage or irrelevant information from the stem. | |

| Undesirable: | |

| Desirable: | |

| 4. Include in the stem any word(s) that might otherwise be repeated in each alternative. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Undesirable: |

Item Alternatives

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

IMAGES

VIDEO

COMMENTS

The key issue is the subjective nature of grading and the potential for examiner bias, not the variety in student responses. Conclusion. Essay type tests are not reliable mainly because their checking is affected by the examiner's mood, biases, and personal judgments, leading to inconsistent and unreliable scoring.

11. It should be a power test rather than a speed test. Allow a liberal time limit so that the essay test does not become a test of speed in writing. 12. Supply the necessary training to the students in writing essay tests. 13. Questions should be graded from simple to complex so that all the testees can answer atleast a few questions. 14.

One of the real limitations of the essay test in actual practice that it is not measuring what it is assumed to measure. Doty lyzed the essay test items and answers for 214 different items. by teachers in fifth and sixth grades and found that only twelve. items, less than 6 percent, "unquestionably measured something.

the essay test produced the essays in testing conditions for Advanced Reading and Writing class. Research Instruments The writing samples. Forty-four scripts of one essay sample written in testing conditions in order to achieve the objective : "By means of the awareness of essay types, essay writers will analyze, synthesize

A reliable and valid assessment of the writing skill has been a longstanding issue in language testing. The nature the writing ability, the qualities of good writing and the ... The same as other types of direct tests, essay exams are subject to subjective assessment and reliability problems. Differences among raters concerning which

the test—but not equally well on every edition of the test. When a classroom teacher gives the students an essay test, typically there is only one rater—the teacher. That rater usually is the only user of the scores and is not concerned about whether the ratings would be consistent with those of another rater. But when an essay test is part

Avoid looking at names. Some teachers have students put numbers on their essays to try and help with this. Score one item at a time. This helps ensure that you use the same thinking and standards for all students. Avoid interruptions when scoring a specific question.

The essay test is probably the most popular of all types of teacher-made tests. In general, a classroom essay test consists of a small number of questions to which the student is expected to demonstrate his/her ability to (a) recall factual knowledge, (b) organize this knowledge and (c) present the knowledge in a logical, integrated answer to ...

Each test consisted of an essay and multiple-choice part. Test reliabilities tended to be higher for the essay parts. The grader reliabilities of the essay parts were high, but there were ...

The present study was designed to test the reliability of traditional essay type questions and to see the effect of 'structuring' on the reliability of those questions. Sixty‐two final MBBS students were divided into two groups of 31 each. ... Group A was administered a 2‐hour test containing five traditional essay questions picked up ...

If reliable reading is to be accomplished, the essay-test questions must be so formulated that a definite, restricted type of answer is required. This statement does not mean that the questions must test simply ability to recall memorized facts (although typical ques-tions, in essay or objective tests, do require chiefly rote memory);

A test with a reliability of .6o is probably satisfactory for group comparisons, but individual scores are of little value unless the reliability is at least .80. The re- liability is, however, much higher than the reliability of an English essay examination in which the conditions are not carefully con-. trolled.

Do not give either the examinee or the grader too much freedom in Essay and Short-Answer Questions (continued from page one) (continued on page three) Editor's Note In the October issue of The Learning Link, the article, Helpful Tips for Creating Valid and Reliable Tests: Writing Multiple Choice Questions was erroneously listed as co-authored.

Reliability is a key concept in research that measures how consistent and trustworthy the results are. In this article, you will learn about the four types of reliability in research: test-retest, inter-rater, parallel forms, and internal consistency. You will also find definitions and examples of each type, as well as tips on how to improve reliability in your own research.

This workbook is the first in a series of three workbooks designed to improve the. development and use of effective essay questions. It focuses on the writing and use of. essay questions. The second booklet in the series focuses on scoring student responses to. essay questions.

Reliability is about the consistency of a measure, and validity is about the accuracy of a measure.opt. It's important to consider reliability and validity when you are creating your research design, planning your methods, and writing up your results, especially in quantitative research. Failing to do so can lead to several types of research ...

Background & Aims: The related studies has shown that students learning is under the direct influence of assessment and evaluation methods. Many researchers believe that essay tests can assess the quality of the students' learning, however essay tests scoring a big challenge which causes their unreliability in many cases. Unlike objective tests that measure the examinees' ability independent ...

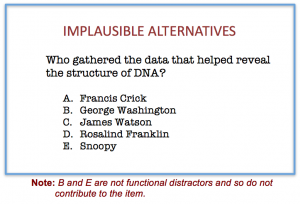

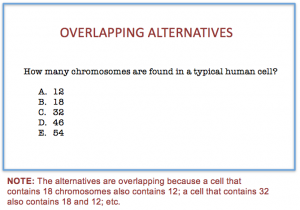

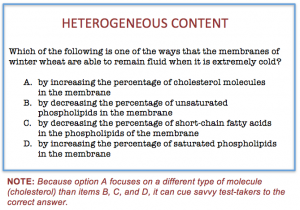

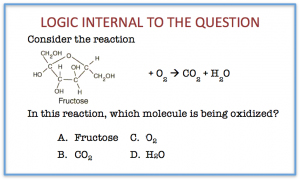

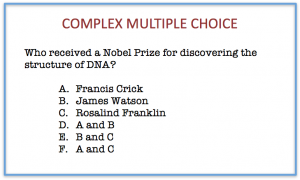

1. Avoid complex multiple choice items, in which some or all of the alternatives consist of different combinations of options. As with "all of the above" answers, a sophisticated test-taker can use partial knowledge to achieve a correct answer. 2. Keep the specific content of items independent of one another.

Short Answer & Essay Tests. Strategies, Ideas, and Recommendations from the faculty Development Literature. General Strategies. Do not use essay questions to evaluate understanding that could be tested with multiple-choice questions. Save essay questions for testing higher levels of thought (application, synthesis, and evaluation), not recall ...

Finding Common Errors. Here are some common proofreading issues that come up for many writers. For grammatical or spelling errors, try underlining or highlighting words that often trip you up. On a sentence level, take note of which errors you make frequently. Also make note of common sentence errors you have such as run-on sentences, comma ...

about the question, and they do not want you to bring in other sources. • Consider your audience. It can be difficult to know how much background information or context to provide when you are writing a paper. Here are some useful guidelines: o If you're writing a research paper, do not assume that your reader has read