- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Survey Research: Definition, Examples and Methods

Survey Research is a quantitative research method used for collecting data from a set of respondents. It has been perhaps one of the most used methodologies in the industry for several years due to the multiple benefits and advantages that it has when collecting and analyzing data.

LEARN ABOUT: Behavioral Research

In this article, you will learn everything about survey research, such as types, methods, and examples.

Survey Research Definition

Survey Research is defined as the process of conducting research using surveys that researchers send to survey respondents. The data collected from surveys is then statistically analyzed to draw meaningful research conclusions. In the 21st century, every organization’s eager to understand what their customers think about their products or services and make better business decisions. Researchers can conduct research in multiple ways, but surveys are proven to be one of the most effective and trustworthy research methods. An online survey is a method for extracting information about a significant business matter from an individual or a group of individuals. It consists of structured survey questions that motivate the participants to respond. Creditable survey research can give these businesses access to a vast information bank. Organizations in media, other companies, and even governments rely on survey research to obtain accurate data.

The traditional definition of survey research is a quantitative method for collecting information from a pool of respondents by asking multiple survey questions. This research type includes the recruitment of individuals collection, and analysis of data. It’s useful for researchers who aim to communicate new features or trends to their respondents.

LEARN ABOUT: Level of Analysis Generally, it’s the primary step towards obtaining quick information about mainstream topics and conducting more rigorous and detailed quantitative research methods like surveys/polls or qualitative research methods like focus groups/on-call interviews can follow. There are many situations where researchers can conduct research using a blend of both qualitative and quantitative strategies.

LEARN ABOUT: Survey Sampling

Survey Research Methods

Survey research methods can be derived based on two critical factors: Survey research tool and time involved in conducting research. There are three main survey research methods, divided based on the medium of conducting survey research:

- Online/ Email: Online survey research is one of the most popular survey research methods today. The survey cost involved in online survey research is extremely minimal, and the responses gathered are highly accurate.

- Phone: Survey research conducted over the telephone ( CATI survey ) can be useful in collecting data from a more extensive section of the target population. There are chances that the money invested in phone surveys will be higher than other mediums, and the time required will be higher.

- Face-to-face: Researchers conduct face-to-face in-depth interviews in situations where there is a complicated problem to solve. The response rate for this method is the highest, but it can be costly.

Further, based on the time taken, survey research can be classified into two methods:

- Longitudinal survey research: Longitudinal survey research involves conducting survey research over a continuum of time and spread across years and decades. The data collected using this survey research method from one time period to another is qualitative or quantitative. Respondent behavior, preferences, and attitudes are continuously observed over time to analyze reasons for a change in behavior or preferences. For example, suppose a researcher intends to learn about the eating habits of teenagers. In that case, he/she will follow a sample of teenagers over a considerable period to ensure that the collected information is reliable. Often, cross-sectional survey research follows a longitudinal study .

- Cross-sectional survey research: Researchers conduct a cross-sectional survey to collect insights from a target audience at a particular time interval. This survey research method is implemented in various sectors such as retail, education, healthcare, SME businesses, etc. Cross-sectional studies can either be descriptive or analytical. It is quick and helps researchers collect information in a brief period. Researchers rely on the cross-sectional survey research method in situations where descriptive analysis of a subject is required.

Survey research also is bifurcated according to the sampling methods used to form samples for research: Probability and Non-probability sampling. Every individual in a population should be considered equally to be a part of the survey research sample. Probability sampling is a sampling method in which the researcher chooses the elements based on probability theory. The are various probability research methods, such as simple random sampling , systematic sampling, cluster sampling, stratified random sampling, etc. Non-probability sampling is a sampling method where the researcher uses his/her knowledge and experience to form samples.

LEARN ABOUT: Survey Sample Sizes

The various non-probability sampling techniques are :

- Convenience sampling

- Snowball sampling

- Consecutive sampling

- Judgemental sampling

- Quota sampling

Process of implementing survey research methods:

- Decide survey questions: Brainstorm and put together valid survey questions that are grammatically and logically appropriate. Understanding the objective and expected outcomes of the survey helps a lot. There are many surveys where details of responses are not as important as gaining insights about what customers prefer from the provided options. In such situations, a researcher can include multiple-choice questions or closed-ended questions . Whereas, if researchers need to obtain details about specific issues, they can consist of open-ended questions in the questionnaire. Ideally, the surveys should include a smart balance of open-ended and closed-ended questions. Use survey questions like Likert Scale , Semantic Scale, Net Promoter Score question, etc., to avoid fence-sitting.

LEARN ABOUT: System Usability Scale

- Finalize a target audience: Send out relevant surveys as per the target audience and filter out irrelevant questions as per the requirement. The survey research will be instrumental in case the target population decides on a sample. This way, results can be according to the desired market and be generalized to the entire population.

LEARN ABOUT: Testimonial Questions

- Send out surveys via decided mediums: Distribute the surveys to the target audience and patiently wait for the feedback and comments- this is the most crucial step of the survey research. The survey needs to be scheduled, keeping in mind the nature of the target audience and its regions. Surveys can be conducted via email, embedded in a website, shared via social media, etc., to gain maximum responses.

- Analyze survey results: Analyze the feedback in real-time and identify patterns in the responses which might lead to a much-needed breakthrough for your organization. GAP, TURF Analysis , Conjoint analysis, Cross tabulation, and many such survey feedback analysis methods can be used to spot and shed light on respondent behavior. Use a good survey analysis software . Researchers can use the results to implement corrective measures to improve customer/employee satisfaction.

Reasons to conduct survey research

The most crucial and integral reason for conducting market research using surveys is that you can collect answers regarding specific, essential questions. You can ask these questions in multiple survey formats as per the target audience and the intent of the survey. Before designing a study, every organization must figure out the objective of carrying this out so that the study can be structured, planned, and executed to perfection.

LEARN ABOUT: Research Process Steps

Questions that need to be on your mind while designing a survey are:

- What is the primary aim of conducting the survey?

- How do you plan to utilize the collected survey data?

- What type of decisions do you plan to take based on the points mentioned above?

There are three critical reasons why an organization must conduct survey research.

- Understand respondent behavior to get solutions to your queries: If you’ve carefully curated a survey, the respondents will provide insights about what they like about your organization as well as suggestions for improvement. To motivate them to respond, you must be very vocal about how secure their responses will be and how you will utilize the answers. This will push them to be 100% honest about their feedback, opinions, and comments. Online surveys or mobile surveys have proved their privacy, and due to this, more and more respondents feel free to put forth their feedback through these mediums.

- Present a medium for discussion: A survey can be the perfect platform for respondents to provide criticism or applause for an organization. Important topics like product quality or quality of customer service etc., can be put on the table for discussion. A way you can do it is by including open-ended questions where the respondents can write their thoughts. This will make it easy for you to correlate your survey to what you intend to do with your product or service.

- Strategy for never-ending improvements: An organization can establish the target audience’s attributes from the pilot phase of survey research . Researchers can use the criticism and feedback received from this survey to improve the product/services. Once the company successfully makes the improvements, it can send out another survey to measure the change in feedback keeping the pilot phase the benchmark. By doing this activity, the organization can track what was effectively improved and what still needs improvement.

Survey Research Scales

There are four main scales for the measurement of variables:

- Nominal Scale: A nominal scale associates numbers with variables for mere naming or labeling, and the numbers usually have no other relevance. It is the most basic of the four levels of measurement.

- Ordinal Scale: The ordinal scale has an innate order within the variables along with labels. It establishes the rank between the variables of a scale but not the difference value between the variables.

- Interval Scale: The interval scale is a step ahead in comparison to the other two scales. Along with establishing a rank and name of variables, the scale also makes known the difference between the two variables. The only drawback is that there is no fixed start point of the scale, i.e., the actual zero value is absent.

- Ratio Scale: The ratio scale is the most advanced measurement scale, which has variables that are labeled in order and have a calculated difference between variables. In addition to what interval scale orders, this scale has a fixed starting point, i.e., the actual zero value is present.

Benefits of survey research

In case survey research is used for all the right purposes and is implemented properly, marketers can benefit by gaining useful, trustworthy data that they can use to better the ROI of the organization.

Other benefits of survey research are:

- Minimum investment: Mobile surveys and online surveys have minimal finance invested per respondent. Even with the gifts and other incentives provided to the people who participate in the study, online surveys are extremely economical compared to paper-based surveys.

- Versatile sources for response collection: You can conduct surveys via various mediums like online and mobile surveys. You can further classify them into qualitative mediums like focus groups , and interviews and quantitative mediums like customer-centric surveys. Due to the offline survey response collection option, researchers can conduct surveys in remote areas with limited internet connectivity. This can make data collection and analysis more convenient and extensive.

- Reliable for respondents: Surveys are extremely secure as the respondent details and responses are kept safeguarded. This anonymity makes respondents answer the survey questions candidly and with absolute honesty. An organization seeking to receive explicit responses for its survey research must mention that it will be confidential.

Survey research design

Researchers implement a survey research design in cases where there is a limited cost involved and there is a need to access details easily. This method is often used by small and large organizations to understand and analyze new trends, market demands, and opinions. Collecting information through tactfully designed survey research can be much more effective and productive than a casually conducted survey.

There are five stages of survey research design:

- Decide an aim of the research: There can be multiple reasons for a researcher to conduct a survey, but they need to decide a purpose for the research. This is the primary stage of survey research as it can mold the entire path of a survey, impacting its results.

- Filter the sample from target population: Who to target? is an essential question that a researcher should answer and keep in mind while conducting research. The precision of the results is driven by who the members of a sample are and how useful their opinions are. The quality of respondents in a sample is essential for the results received for research and not the quantity. If a researcher seeks to understand whether a product feature will work well with their target market, he/she can conduct survey research with a group of market experts for that product or technology.

- Zero-in on a survey method: Many qualitative and quantitative research methods can be discussed and decided. Focus groups, online interviews, surveys, polls, questionnaires, etc. can be carried out with a pre-decided sample of individuals.

- Design the questionnaire: What will the content of the survey be? A researcher is required to answer this question to be able to design it effectively. What will the content of the cover letter be? Or what are the survey questions of this questionnaire? Understand the target market thoroughly to create a questionnaire that targets a sample to gain insights about a survey research topic.

- Send out surveys and analyze results: Once the researcher decides on which questions to include in a study, they can send it across to the selected sample . Answers obtained from this survey can be analyzed to make product-related or marketing-related decisions.

Survey examples: 10 tips to design the perfect research survey

Picking the right survey design can be the key to gaining the information you need to make crucial decisions for all your research. It is essential to choose the right topic, choose the right question types, and pick a corresponding design. If this is your first time creating a survey, it can seem like an intimidating task. But with QuestionPro, each step of the process is made simple and easy.

Below are 10 Tips To Design The Perfect Research Survey:

- Set your SMART goals: Before conducting any market research or creating a particular plan, set your SMART Goals . What is that you want to achieve with the survey? How will you measure it promptly, and what are the results you are expecting?

- Choose the right questions: Designing a survey can be a tricky task. Asking the right questions may help you get the answers you are looking for and ease the task of analyzing. So, always choose those specific questions – relevant to your research.

- Begin your survey with a generalized question: Preferably, start your survey with a general question to understand whether the respondent uses the product or not. That also provides an excellent base and intro for your survey.

- Enhance your survey: Choose the best, most relevant, 15-20 questions. Frame each question as a different question type based on the kind of answer you would like to gather from each. Create a survey using different types of questions such as multiple-choice, rating scale, open-ended, etc. Look at more survey examples and four measurement scales every researcher should remember.

- Prepare yes/no questions: You may also want to use yes/no questions to separate people or branch them into groups of those who “have purchased” and those who “have not yet purchased” your products or services. Once you separate them, you can ask them different questions.

- Test all electronic devices: It becomes effortless to distribute your surveys if respondents can answer them on different electronic devices like mobiles, tablets, etc. Once you have created your survey, it’s time to TEST. You can also make any corrections if needed at this stage.

- Distribute your survey: Once your survey is ready, it is time to share and distribute it to the right audience. You can share handouts and share them via email, social media, and other industry-related offline/online communities.

- Collect and analyze responses: After distributing your survey, it is time to gather all responses. Make sure you store your results in a particular document or an Excel sheet with all the necessary categories mentioned so that you don’t lose your data. Remember, this is the most crucial stage. Segregate your responses based on demographics, psychographics, and behavior. This is because, as a researcher, you must know where your responses are coming from. It will help you to analyze, predict decisions, and help write the summary report.

- Prepare your summary report: Now is the time to share your analysis. At this stage, you should mention all the responses gathered from a survey in a fixed format. Also, the reader/customer must get clarity about your goal, which you were trying to gain from the study. Questions such as – whether the product or service has been used/preferred or not. Do respondents prefer some other product to another? Any recommendations?

Having a tool that helps you carry out all the necessary steps to carry out this type of study is a vital part of any project. At QuestionPro, we have helped more than 10,000 clients around the world to carry out data collection in a simple and effective way, in addition to offering a wide range of solutions to take advantage of this data in the best possible way.

From dashboards, advanced analysis tools, automation, and dedicated functions, in QuestionPro, you will find everything you need to execute your research projects effectively. Uncover insights that matter the most!

MORE LIKE THIS

Total Experience in Trinidad & Tobago — Tuesday CX Thoughts

Oct 29, 2024

You Can’t Please Everyone — Tuesday CX Thoughts

Oct 22, 2024

Life@QuestionPro Presents: Andrews Sekar

Oct 14, 2024

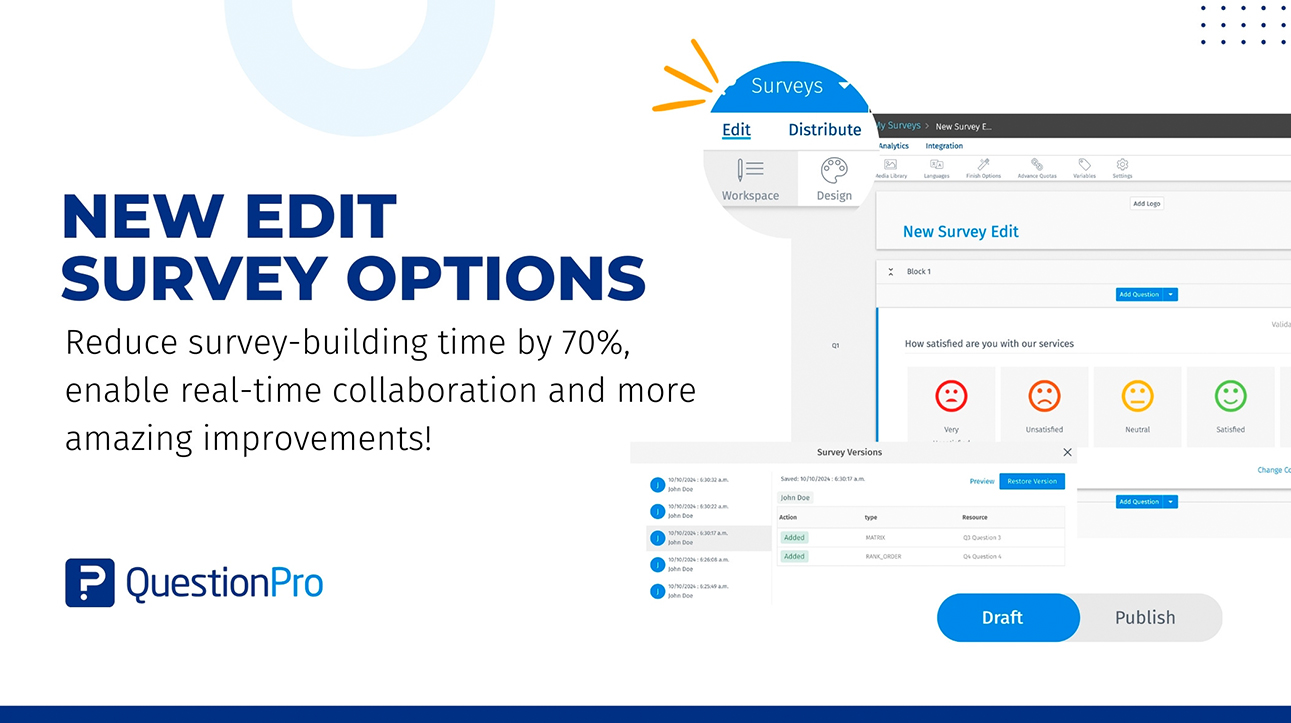

Edit survey: A new way of survey building and collaboration

Oct 10, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- What’s Coming Up

- Workforce Intelligence

IMAGES

VIDEO