Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

10 Experimental research

Experimental research—often considered to be the ‘gold standard’ in research designs—is one of the most rigorous of all research designs. In this design, one or more independent variables are manipulated by the researcher (as treatments), subjects are randomly assigned to different treatment levels (random assignment), and the results of the treatments on outcomes (dependent variables) are observed. The unique strength of experimental research is its internal validity (causality) due to its ability to link cause and effect through treatment manipulation, while controlling for the spurious effect of extraneous variable.

Experimental research is best suited for explanatory research—rather than for descriptive or exploratory research—where the goal of the study is to examine cause-effect relationships. It also works well for research that involves a relatively limited and well-defined set of independent variables that can either be manipulated or controlled. Experimental research can be conducted in laboratory or field settings. Laboratory experiments , conducted in laboratory (artificial) settings, tend to be high in internal validity, but this comes at the cost of low external validity (generalisability), because the artificial (laboratory) setting in which the study is conducted may not reflect the real world. Field experiments are conducted in field settings such as in a real organisation, and are high in both internal and external validity. But such experiments are relatively rare, because of the difficulties associated with manipulating treatments and controlling for extraneous effects in a field setting.

Experimental research can be grouped into two broad categories: true experimental designs and quasi-experimental designs. Both designs require treatment manipulation, but while true experiments also require random assignment, quasi-experiments do not. Sometimes, we also refer to non-experimental research, which is not really a research design, but an all-inclusive term that includes all types of research that do not employ treatment manipulation or random assignment, such as survey research, observational research, and correlational studies.

Basic concepts

Treatment and control groups. In experimental research, some subjects are administered one or more experimental stimulus called a treatment (the treatment group ) while other subjects are not given such a stimulus (the control group ). The treatment may be considered successful if subjects in the treatment group rate more favourably on outcome variables than control group subjects. Multiple levels of experimental stimulus may be administered, in which case, there may be more than one treatment group. For example, in order to test the effects of a new drug intended to treat a certain medical condition like dementia, if a sample of dementia patients is randomly divided into three groups, with the first group receiving a high dosage of the drug, the second group receiving a low dosage, and the third group receiving a placebo such as a sugar pill (control group), then the first two groups are experimental groups and the third group is a control group. After administering the drug for a period of time, if the condition of the experimental group subjects improved significantly more than the control group subjects, we can say that the drug is effective. We can also compare the conditions of the high and low dosage experimental groups to determine if the high dose is more effective than the low dose.

Treatment manipulation. Treatments are the unique feature of experimental research that sets this design apart from all other research methods. Treatment manipulation helps control for the ‘cause’ in cause-effect relationships. Naturally, the validity of experimental research depends on how well the treatment was manipulated. Treatment manipulation must be checked using pretests and pilot tests prior to the experimental study. Any measurements conducted before the treatment is administered are called pretest measures , while those conducted after the treatment are posttest measures .

Random selection and assignment. Random selection is the process of randomly drawing a sample from a population or a sampling frame. This approach is typically employed in survey research, and ensures that each unit in the population has a positive chance of being selected into the sample. Random assignment, however, is a process of randomly assigning subjects to experimental or control groups. This is a standard practice in true experimental research to ensure that treatment groups are similar (equivalent) to each other and to the control group prior to treatment administration. Random selection is related to sampling, and is therefore more closely related to the external validity (generalisability) of findings. However, random assignment is related to design, and is therefore most related to internal validity. It is possible to have both random selection and random assignment in well-designed experimental research, but quasi-experimental research involves neither random selection nor random assignment.

Threats to internal validity. Although experimental designs are considered more rigorous than other research methods in terms of the internal validity of their inferences (by virtue of their ability to control causes through treatment manipulation), they are not immune to internal validity threats. Some of these threats to internal validity are described below, within the context of a study of the impact of a special remedial math tutoring program for improving the math abilities of high school students.

History threat is the possibility that the observed effects (dependent variables) are caused by extraneous or historical events rather than by the experimental treatment. For instance, students’ post-remedial math score improvement may have been caused by their preparation for a math exam at their school, rather than the remedial math program.

Maturation threat refers to the possibility that observed effects are caused by natural maturation of subjects (e.g., a general improvement in their intellectual ability to understand complex concepts) rather than the experimental treatment.

Testing threat is a threat in pre-post designs where subjects’ posttest responses are conditioned by their pretest responses. For instance, if students remember their answers from the pretest evaluation, they may tend to repeat them in the posttest exam.

Not conducting a pretest can help avoid this threat.

Instrumentation threat , which also occurs in pre-post designs, refers to the possibility that the difference between pretest and posttest scores is not due to the remedial math program, but due to changes in the administered test, such as the posttest having a higher or lower degree of difficulty than the pretest.

Mortality threat refers to the possibility that subjects may be dropping out of the study at differential rates between the treatment and control groups due to a systematic reason, such that the dropouts were mostly students who scored low on the pretest. If the low-performing students drop out, the results of the posttest will be artificially inflated by the preponderance of high-performing students.

Regression threat —also called a regression to the mean—refers to the statistical tendency of a group’s overall performance to regress toward the mean during a posttest rather than in the anticipated direction. For instance, if subjects scored high on a pretest, they will have a tendency to score lower on the posttest (closer to the mean) because their high scores (away from the mean) during the pretest were possibly a statistical aberration. This problem tends to be more prevalent in non-random samples and when the two measures are imperfectly correlated.

Two-group experimental designs

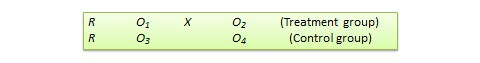

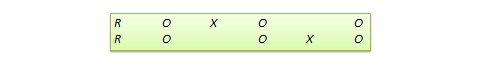

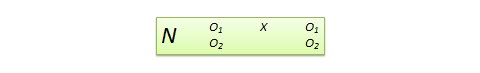

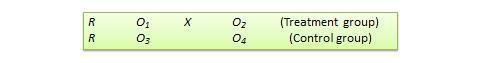

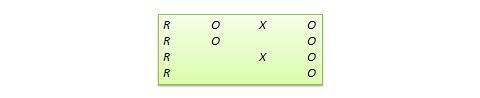

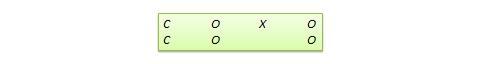

Pretest-posttest control group design . In this design, subjects are randomly assigned to treatment and control groups, subjected to an initial (pretest) measurement of the dependent variables of interest, the treatment group is administered a treatment (representing the independent variable of interest), and the dependent variables measured again (posttest). The notation of this design is shown in Figure 10.1.

Statistical analysis of this design involves a simple analysis of variance (ANOVA) between the treatment and control groups. The pretest-posttest design handles several threats to internal validity, such as maturation, testing, and regression, since these threats can be expected to influence both treatment and control groups in a similar (random) manner. The selection threat is controlled via random assignment. However, additional threats to internal validity may exist. For instance, mortality can be a problem if there are differential dropout rates between the two groups, and the pretest measurement may bias the posttest measurement—especially if the pretest introduces unusual topics or content.

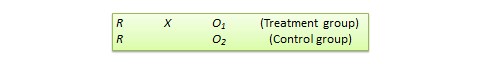

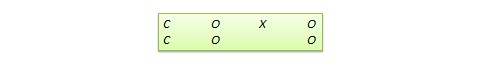

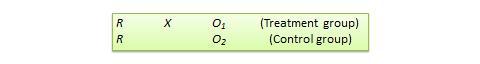

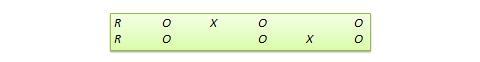

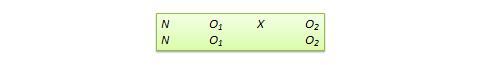

Posttest -only control group design . This design is a simpler version of the pretest-posttest design where pretest measurements are omitted. The design notation is shown in Figure 10.2.

The treatment effect is measured simply as the difference in the posttest scores between the two groups:

The appropriate statistical analysis of this design is also a two-group analysis of variance (ANOVA). The simplicity of this design makes it more attractive than the pretest-posttest design in terms of internal validity. This design controls for maturation, testing, regression, selection, and pretest-posttest interaction, though the mortality threat may continue to exist.

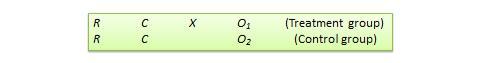

Because the pretest measure is not a measurement of the dependent variable, but rather a covariate, the treatment effect is measured as the difference in the posttest scores between the treatment and control groups as:

Due to the presence of covariates, the right statistical analysis of this design is a two-group analysis of covariance (ANCOVA). This design has all the advantages of posttest-only design, but with internal validity due to the controlling of covariates. Covariance designs can also be extended to pretest-posttest control group design.

Factorial designs

Two-group designs are inadequate if your research requires manipulation of two or more independent variables (treatments). In such cases, you would need four or higher-group designs. Such designs, quite popular in experimental research, are commonly called factorial designs. Each independent variable in this design is called a factor , and each subdivision of a factor is called a level . Factorial designs enable the researcher to examine not only the individual effect of each treatment on the dependent variables (called main effects), but also their joint effect (called interaction effects).

In a factorial design, a main effect is said to exist if the dependent variable shows a significant difference between multiple levels of one factor, at all levels of other factors. No change in the dependent variable across factor levels is the null case (baseline), from which main effects are evaluated. In the above example, you may see a main effect of instructional type, instructional time, or both on learning outcomes. An interaction effect exists when the effect of differences in one factor depends upon the level of a second factor. In our example, if the effect of instructional type on learning outcomes is greater for three hours/week of instructional time than for one and a half hours/week, then we can say that there is an interaction effect between instructional type and instructional time on learning outcomes. Note that the presence of interaction effects dominate and make main effects irrelevant, and it is not meaningful to interpret main effects if interaction effects are significant.

Hybrid experimental designs

Hybrid designs are those that are formed by combining features of more established designs. Three such hybrid designs are randomised bocks design, Solomon four-group design, and switched replications design.

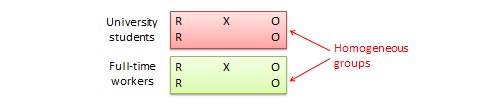

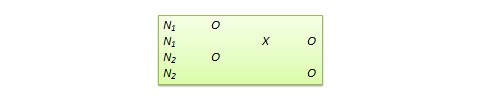

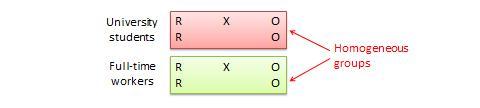

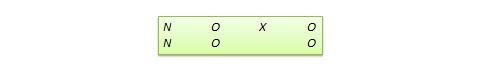

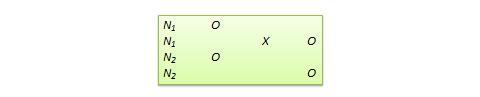

Randomised block design. This is a variation of the posttest-only or pretest-posttest control group design where the subject population can be grouped into relatively homogeneous subgroups (called blocks ) within which the experiment is replicated. For instance, if you want to replicate the same posttest-only design among university students and full-time working professionals (two homogeneous blocks), subjects in both blocks are randomly split between the treatment group (receiving the same treatment) and the control group (see Figure 10.5). The purpose of this design is to reduce the ‘noise’ or variance in data that may be attributable to differences between the blocks so that the actual effect of interest can be detected more accurately.

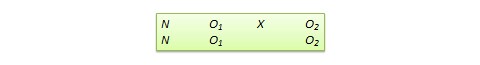

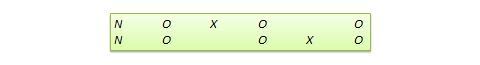

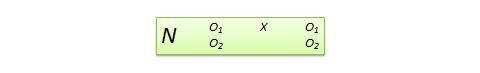

Solomon four-group design . In this design, the sample is divided into two treatment groups and two control groups. One treatment group and one control group receive the pretest, and the other two groups do not. This design represents a combination of posttest-only and pretest-posttest control group design, and is intended to test for the potential biasing effect of pretest measurement on posttest measures that tends to occur in pretest-posttest designs, but not in posttest-only designs. The design notation is shown in Figure 10.6.

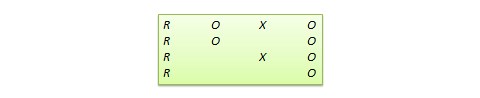

Switched replication design . This is a two-group design implemented in two phases with three waves of measurement. The treatment group in the first phase serves as the control group in the second phase, and the control group in the first phase becomes the treatment group in the second phase, as illustrated in Figure 10.7. In other words, the original design is repeated or replicated temporally with treatment/control roles switched between the two groups. By the end of the study, all participants will have received the treatment either during the first or the second phase. This design is most feasible in organisational contexts where organisational programs (e.g., employee training) are implemented in a phased manner or are repeated at regular intervals.

Quasi-experimental designs

Quasi-experimental designs are almost identical to true experimental designs, but lacking one key ingredient: random assignment. For instance, one entire class section or one organisation is used as the treatment group, while another section of the same class or a different organisation in the same industry is used as the control group. This lack of random assignment potentially results in groups that are non-equivalent, such as one group possessing greater mastery of certain content than the other group, say by virtue of having a better teacher in a previous semester, which introduces the possibility of selection bias . Quasi-experimental designs are therefore inferior to true experimental designs in interval validity due to the presence of a variety of selection related threats such as selection-maturation threat (the treatment and control groups maturing at different rates), selection-history threat (the treatment and control groups being differentially impacted by extraneous or historical events), selection-regression threat (the treatment and control groups regressing toward the mean between pretest and posttest at different rates), selection-instrumentation threat (the treatment and control groups responding differently to the measurement), selection-testing (the treatment and control groups responding differently to the pretest), and selection-mortality (the treatment and control groups demonstrating differential dropout rates). Given these selection threats, it is generally preferable to avoid quasi-experimental designs to the greatest extent possible.

In addition, there are quite a few unique non-equivalent designs without corresponding true experimental design cousins. Some of the more useful of these designs are discussed next.

Regression discontinuity (RD) design . This is a non-equivalent pretest-posttest design where subjects are assigned to the treatment or control group based on a cut-off score on a preprogram measure. For instance, patients who are severely ill may be assigned to a treatment group to test the efficacy of a new drug or treatment protocol and those who are mildly ill are assigned to the control group. In another example, students who are lagging behind on standardised test scores may be selected for a remedial curriculum program intended to improve their performance, while those who score high on such tests are not selected from the remedial program.

Because of the use of a cut-off score, it is possible that the observed results may be a function of the cut-off score rather than the treatment, which introduces a new threat to internal validity. However, using the cut-off score also ensures that limited or costly resources are distributed to people who need them the most, rather than randomly across a population, while simultaneously allowing a quasi-experimental treatment. The control group scores in the RD design do not serve as a benchmark for comparing treatment group scores, given the systematic non-equivalence between the two groups. Rather, if there is no discontinuity between pretest and posttest scores in the control group, but such a discontinuity persists in the treatment group, then this discontinuity is viewed as evidence of the treatment effect.

Proxy pretest design . This design, shown in Figure 10.11, looks very similar to the standard NEGD (pretest-posttest) design, with one critical difference: the pretest score is collected after the treatment is administered. A typical application of this design is when a researcher is brought in to test the efficacy of a program (e.g., an educational program) after the program has already started and pretest data is not available. Under such circumstances, the best option for the researcher is often to use a different prerecorded measure, such as students’ grade point average before the start of the program, as a proxy for pretest data. A variation of the proxy pretest design is to use subjects’ posttest recollection of pretest data, which may be subject to recall bias, but nevertheless may provide a measure of perceived gain or change in the dependent variable.

Separate pretest-posttest samples design . This design is useful if it is not possible to collect pretest and posttest data from the same subjects for some reason. As shown in Figure 10.12, there are four groups in this design, but two groups come from a single non-equivalent group, while the other two groups come from a different non-equivalent group. For instance, say you want to test customer satisfaction with a new online service that is implemented in one city but not in another. In this case, customers in the first city serve as the treatment group and those in the second city constitute the control group. If it is not possible to obtain pretest and posttest measures from the same customers, you can measure customer satisfaction at one point in time, implement the new service program, and measure customer satisfaction (with a different set of customers) after the program is implemented. Customer satisfaction is also measured in the control group at the same times as in the treatment group, but without the new program implementation. The design is not particularly strong, because you cannot examine the changes in any specific customer’s satisfaction score before and after the implementation, but you can only examine average customer satisfaction scores. Despite the lower internal validity, this design may still be a useful way of collecting quasi-experimental data when pretest and posttest data is not available from the same subjects.

An interesting variation of the NEDV design is a pattern-matching NEDV design , which employs multiple outcome variables and a theory that explains how much each variable will be affected by the treatment. The researcher can then examine if the theoretical prediction is matched in actual observations. This pattern-matching technique—based on the degree of correspondence between theoretical and observed patterns—is a powerful way of alleviating internal validity concerns in the original NEDV design.

Perils of experimental research

Experimental research is one of the most difficult of research designs, and should not be taken lightly. This type of research is often best with a multitude of methodological problems. First, though experimental research requires theories for framing hypotheses for testing, much of current experimental research is atheoretical. Without theories, the hypotheses being tested tend to be ad hoc, possibly illogical, and meaningless. Second, many of the measurement instruments used in experimental research are not tested for reliability and validity, and are incomparable across studies. Consequently, results generated using such instruments are also incomparable. Third, often experimental research uses inappropriate research designs, such as irrelevant dependent variables, no interaction effects, no experimental controls, and non-equivalent stimulus across treatment groups. Findings from such studies tend to lack internal validity and are highly suspect. Fourth, the treatments (tasks) used in experimental research may be diverse, incomparable, and inconsistent across studies, and sometimes inappropriate for the subject population. For instance, undergraduate student subjects are often asked to pretend that they are marketing managers and asked to perform a complex budget allocation task in which they have no experience or expertise. The use of such inappropriate tasks, introduces new threats to internal validity (i.e., subject’s performance may be an artefact of the content or difficulty of the task setting), generates findings that are non-interpretable and meaningless, and makes integration of findings across studies impossible.

The design of proper experimental treatments is a very important task in experimental design, because the treatment is the raison d’etre of the experimental method, and must never be rushed or neglected. To design an adequate and appropriate task, researchers should use prevalidated tasks if available, conduct treatment manipulation checks to check for the adequacy of such tasks (by debriefing subjects after performing the assigned task), conduct pilot tests (repeatedly, if necessary), and if in doubt, use tasks that are simple and familiar for the respondent sample rather than tasks that are complex or unfamiliar.

In summary, this chapter introduced key concepts in the experimental design research method and introduced a variety of true experimental and quasi-experimental designs. Although these designs vary widely in internal validity, designs with less internal validity should not be overlooked and may sometimes be useful under specific circumstances and empirical contingencies.

Social Science Research: Principles, Methods and Practices (Revised edition) Copyright © 2019 by Anol Bhattacherjee is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- A Quick Guide to Experimental Design | 5 Steps & Examples

A Quick Guide to Experimental Design | 5 Steps & Examples

Published on 11 April 2022 by Rebecca Bevans . Revised on 5 December 2022.

Experiments are used to study causal relationships . You manipulate one or more independent variables and measure their effect on one or more dependent variables.

Experimental design means creating a set of procedures to systematically test a hypothesis . A good experimental design requires a strong understanding of the system you are studying.

There are five key steps in designing an experiment:

- Consider your variables and how they are related

- Write a specific, testable hypothesis

- Design experimental treatments to manipulate your independent variable

- Assign subjects to groups, either between-subjects or within-subjects

- Plan how you will measure your dependent variable

For valid conclusions, you also need to select a representative sample and control any extraneous variables that might influence your results. If if random assignment of participants to control and treatment groups is impossible, unethical, or highly difficult, consider an observational study instead.

Table of contents

Step 1: define your variables, step 2: write your hypothesis, step 3: design your experimental treatments, step 4: assign your subjects to treatment groups, step 5: measure your dependent variable, frequently asked questions about experimental design.

You should begin with a specific research question . We will work with two research question examples, one from health sciences and one from ecology:

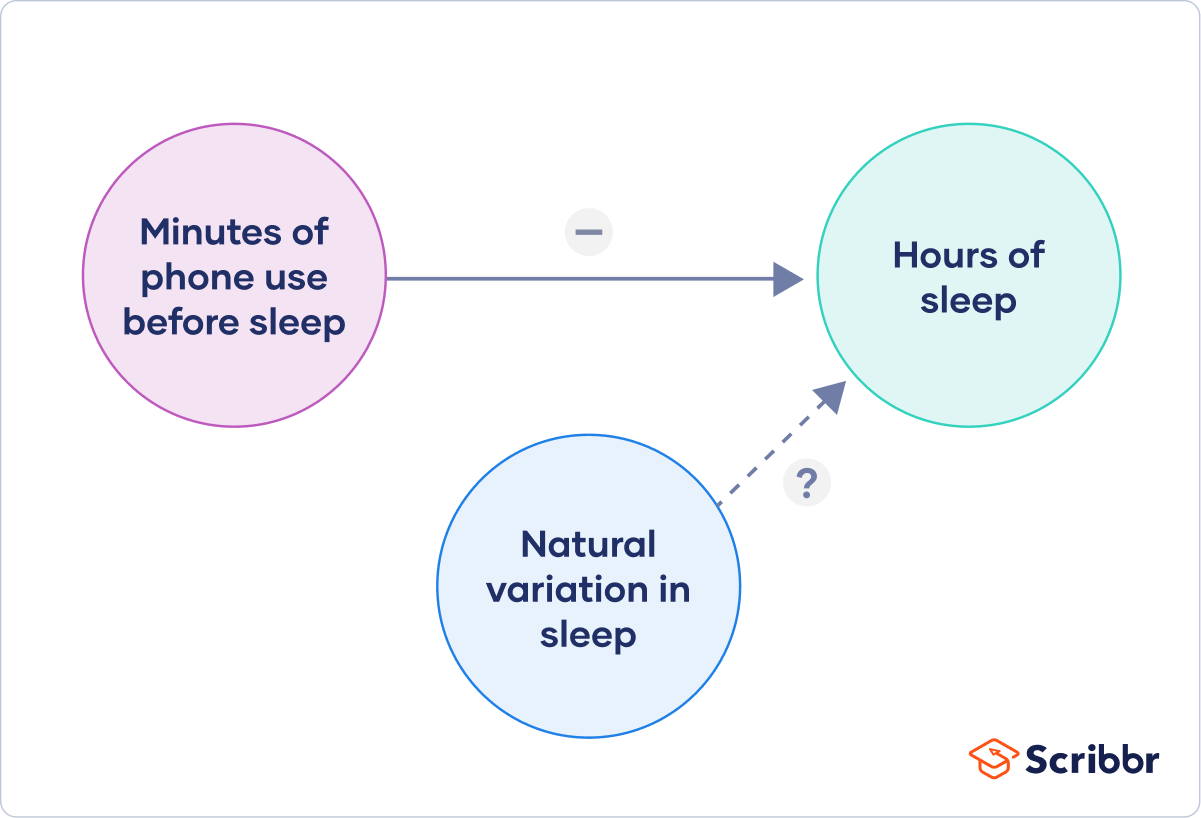

To translate your research question into an experimental hypothesis, you need to define the main variables and make predictions about how they are related.

Start by simply listing the independent and dependent variables .

Then you need to think about possible extraneous and confounding variables and consider how you might control them in your experiment.

Finally, you can put these variables together into a diagram. Use arrows to show the possible relationships between variables and include signs to show the expected direction of the relationships.

Here we predict that increasing temperature will increase soil respiration and decrease soil moisture, while decreasing soil moisture will lead to decreased soil respiration.

Prevent plagiarism, run a free check.

Now that you have a strong conceptual understanding of the system you are studying, you should be able to write a specific, testable hypothesis that addresses your research question.

The next steps will describe how to design a controlled experiment . In a controlled experiment, you must be able to:

- Systematically and precisely manipulate the independent variable(s).

- Precisely measure the dependent variable(s).

- Control any potential confounding variables.

If your study system doesn’t match these criteria, there are other types of research you can use to answer your research question.

How you manipulate the independent variable can affect the experiment’s external validity – that is, the extent to which the results can be generalised and applied to the broader world.

First, you may need to decide how widely to vary your independent variable.

- just slightly above the natural range for your study region.

- over a wider range of temperatures to mimic future warming.

- over an extreme range that is beyond any possible natural variation.

Second, you may need to choose how finely to vary your independent variable. Sometimes this choice is made for you by your experimental system, but often you will need to decide, and this will affect how much you can infer from your results.

- a categorical variable : either as binary (yes/no) or as levels of a factor (no phone use, low phone use, high phone use).

- a continuous variable (minutes of phone use measured every night).

How you apply your experimental treatments to your test subjects is crucial for obtaining valid and reliable results.

First, you need to consider the study size : how many individuals will be included in the experiment? In general, the more subjects you include, the greater your experiment’s statistical power , which determines how much confidence you can have in your results.

Then you need to randomly assign your subjects to treatment groups . Each group receives a different level of the treatment (e.g. no phone use, low phone use, high phone use).

You should also include a control group , which receives no treatment. The control group tells us what would have happened to your test subjects without any experimental intervention.

When assigning your subjects to groups, there are two main choices you need to make:

- A completely randomised design vs a randomised block design .

- A between-subjects design vs a within-subjects design .

Randomisation

An experiment can be completely randomised or randomised within blocks (aka strata):

- In a completely randomised design , every subject is assigned to a treatment group at random.

- In a randomised block design (aka stratified random design), subjects are first grouped according to a characteristic they share, and then randomly assigned to treatments within those groups.

Sometimes randomisation isn’t practical or ethical , so researchers create partially-random or even non-random designs. An experimental design where treatments aren’t randomly assigned is called a quasi-experimental design .

Between-subjects vs within-subjects

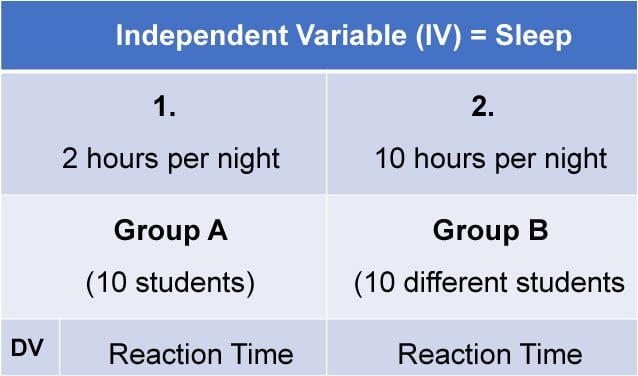

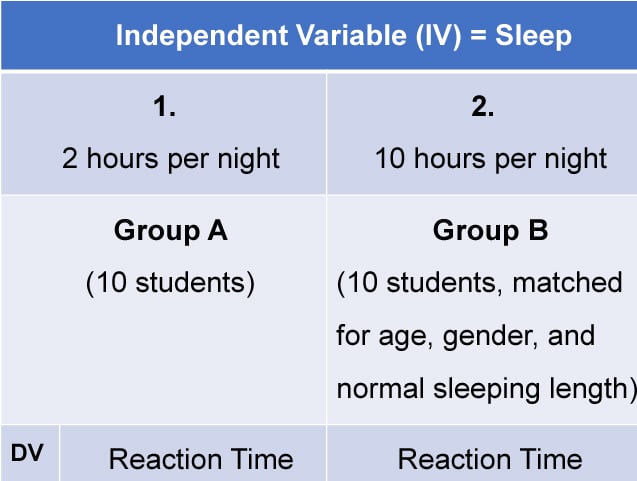

In a between-subjects design (also known as an independent measures design or classic ANOVA design), individuals receive only one of the possible levels of an experimental treatment.

In medical or social research, you might also use matched pairs within your between-subjects design to make sure that each treatment group contains the same variety of test subjects in the same proportions.

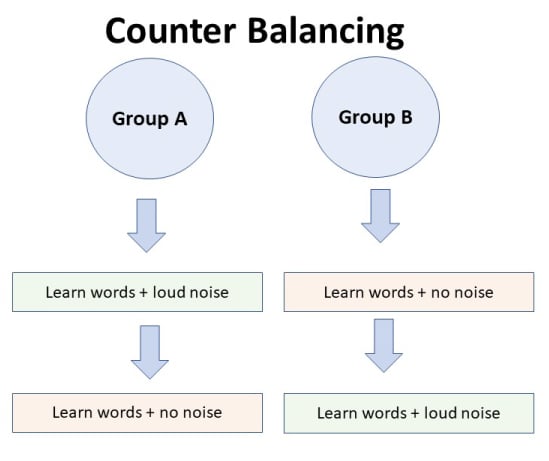

In a within-subjects design (also known as a repeated measures design), every individual receives each of the experimental treatments consecutively, and their responses to each treatment are measured.

Within-subjects or repeated measures can also refer to an experimental design where an effect emerges over time, and individual responses are measured over time in order to measure this effect as it emerges.

Counterbalancing (randomising or reversing the order of treatments among subjects) is often used in within-subjects designs to ensure that the order of treatment application doesn’t influence the results of the experiment.

Finally, you need to decide how you’ll collect data on your dependent variable outcomes. You should aim for reliable and valid measurements that minimise bias or error.

Some variables, like temperature, can be objectively measured with scientific instruments. Others may need to be operationalised to turn them into measurable observations.

- Ask participants to record what time they go to sleep and get up each day.

- Ask participants to wear a sleep tracker.

How precisely you measure your dependent variable also affects the kinds of statistical analysis you can use on your data.

Experiments are always context-dependent, and a good experimental design will take into account all of the unique considerations of your study system to produce information that is both valid and relevant to your research question.

Experimental designs are a set of procedures that you plan in order to examine the relationship between variables that interest you.

To design a successful experiment, first identify:

- A testable hypothesis

- One or more independent variables that you will manipulate

- One or more dependent variables that you will measure

When designing the experiment, first decide:

- How your variable(s) will be manipulated

- How you will control for any potential confounding or lurking variables

- How many subjects you will include

- How you will assign treatments to your subjects

The key difference between observational studies and experiments is that, done correctly, an observational study will never influence the responses or behaviours of participants. Experimental designs will have a treatment condition applied to at least a portion of participants.

A confounding variable , also called a confounder or confounding factor, is a third variable in a study examining a potential cause-and-effect relationship.

A confounding variable is related to both the supposed cause and the supposed effect of the study. It can be difficult to separate the true effect of the independent variable from the effect of the confounding variable.

In your research design , it’s important to identify potential confounding variables and plan how you will reduce their impact.

In a between-subjects design , every participant experiences only one condition, and researchers assess group differences between participants in various conditions.

In a within-subjects design , each participant experiences all conditions, and researchers test the same participants repeatedly for differences between conditions.

The word ‘between’ means that you’re comparing different conditions between groups, while the word ‘within’ means you’re comparing different conditions within the same group.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bevans, R. (2022, December 05). A Quick Guide to Experimental Design | 5 Steps & Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/guide-to-experimental-design/

Is this article helpful?

Rebecca Bevans

Research Methodology and Scientific Writing pp 93–133 Cite as

Experimental Research

- C. George Thomas 2

- First Online: 25 February 2021

4280 Accesses

Experiments are part of the scientific method that helps to decide the fate of two or more competing hypotheses or explanations on a phenomenon. The term ‘experiment’ arises from Latin, Experiri, which means, ‘to try’. The knowledge accrues from experiments differs from other types of knowledge in that it is always shaped upon observation or experience. In other words, experiments generate empirical knowledge. In fact, the emphasis on experimentation in the sixteenth and seventeenth centuries for establishing causal relationships for various phenomena happening in nature heralded the resurgence of modern science from its roots in ancient philosophy spearheaded by great Greek philosophers such as Aristotle.

The strongest arguments prove nothing so long as the conclusions are not verified by experience. Experimental science is the queen of sciences and the goal of all speculation . Roger Bacon (1214–1294)

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Bibliography

Best, J.W. and Kahn, J.V. 1993. Research in Education (7th Ed., Indian Reprint, 2004). Prentice–Hall of India, New Delhi, 435p.

Google Scholar

Campbell, D. and Stanley, J. 1963. Experimental and quasi-experimental designs for research. In: Gage, N.L., Handbook of Research on Teaching. Rand McNally, Chicago, pp. 171–247.

Chandel, S.R.S. 1991. A Handbook of Agricultural Statistics. Achal Prakashan Mandir, Kanpur, 560p.

Cox, D.R. 1958. Planning of Experiments. John Wiley & Sons, New York, 308p.

Fathalla, M.F. and Fathalla, M.M.F. 2004. A Practical Guide for Health Researchers. WHO Regional Publications Eastern Mediterranean Series 30. World Health Organization Regional Office for the Eastern Mediterranean, Cairo, 232p.

Fowkes, F.G.R., and Fulton, P.M. 1991. Critical appraisal of published research: Introductory guidelines. Br. Med. J. 302: 1136–1140.

Gall, M.D., Borg, W.R., and Gall, J.P. 1996. Education Research: An Introduction (6th Ed.). Longman, New York, 788p.

Gomez, K.A. 1972. Techniques for Field Experiments with Rice. International Rice Research Institute, Manila, Philippines, 46p.

Gomez, K.A. and Gomez, A.A. 1984. Statistical Procedures for Agricultural Research (2nd Ed.). John Wiley & Sons, New York, 680p.

Hill, A.B. 1971. Principles of Medical Statistics (9th Ed.). Oxford University Press, New York, 390p.

Holmes, D., Moody, P., and Dine, D. 2010. Research Methods for the Bioscience (2nd Ed.). Oxford University Press, Oxford, 457p.

Kerlinger, F.N. 1986. Foundations of Behavioural Research (3rd Ed.). Holt, Rinehart and Winston, USA. 667p.

Kirk, R.E. 2012. Experimental Design: Procedures for the Behavioural Sciences (4th Ed.). Sage Publications, 1072p.

Kothari, C.R. 2004. Research Methodology: Methods and Techniques (2nd Ed.). New Age International, New Delhi, 401p.

Kumar, R. 2011. Research Methodology: A Step-by step Guide for Beginners (3rd Ed.). Sage Publications India, New Delhi, 415p.

Leedy, P.D. and Ormrod, J.L. 2010. Practical Research: Planning and Design (9th Ed.), Pearson Education, New Jersey, 360p.

Marder, M.P. 2011. Research Methods for Science. Cambridge University Press, 227p.

Panse, V.G. and Sukhatme, P.V. 1985. Statistical Methods for Agricultural Workers (4th Ed., revised: Sukhatme, P.V. and Amble, V. N.). ICAR, New Delhi, 359p.

Ross, S.M. and Morrison, G.R. 2004. Experimental research methods. In: Jonassen, D.H. (ed.), Handbook of Research for Educational Communications and Technology (2nd Ed.). Lawrence Erlbaum Associates, New Jersey, pp. 10211043.

Snedecor, G.W. and Cochran, W.G. 1980. Statistical Methods (7th Ed.). Iowa State University Press, Ames, Iowa, 507p.

Download references

Author information

Authors and affiliations.

Kerala Agricultural University, Thrissur, Kerala, India

C. George Thomas

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to C. George Thomas .

Rights and permissions

Reprints and permissions

Copyright information

© 2021 The Author(s)

About this chapter

Cite this chapter.

Thomas, C.G. (2021). Experimental Research. In: Research Methodology and Scientific Writing . Springer, Cham. https://doi.org/10.1007/978-3-030-64865-7_5

Download citation

DOI : https://doi.org/10.1007/978-3-030-64865-7_5

Published : 25 February 2021

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-64864-0

Online ISBN : 978-3-030-64865-7

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Foundations

- Write Paper

Search form

- Experiments

- Anthropology

- Self-Esteem

- Social Anxiety

Experimental Research

Experimental research is commonly used in sciences such as sociology and psychology, physics, chemistry, biology and medicine etc.

This article is a part of the guide:

- Pretest-Posttest

- Third Variable

- Research Bias

- Independent Variable

- Between Subjects

Browse Full Outline

- 1 Experimental Research

- 2.1 Independent Variable

- 2.2 Dependent Variable

- 2.3 Controlled Variables

- 2.4 Third Variable

- 3.1 Control Group

- 3.2 Research Bias

- 3.3.1 Placebo Effect

- 3.3.2 Double Blind Method

- 4.1 Randomized Controlled Trials

- 4.2 Pretest-Posttest

- 4.3 Solomon Four Group

- 4.4 Between Subjects

- 4.5 Within Subject

- 4.6 Repeated Measures

- 4.7 Counterbalanced Measures

- 4.8 Matched Subjects

It is a collection of research designs which use manipulation and controlled testing to understand causal processes. Generally, one or more variables are manipulated to determine their effect on a dependent variable.

The experimental method is a systematic and scientific approach to research in which the researcher manipulates one or more variables, and controls and measures any change in other variables.

Experimental Research is often used where:

- There is time priority in a causal relationship ( cause precedes effect )

- There is consistency in a causal relationship (a cause will always lead to the same effect)

- The magnitude of the correlation is great.

(Reference: en.wikipedia.org)

The word experimental research has a range of definitions. In the strict sense, experimental research is what we call a true experiment .

This is an experiment where the researcher manipulates one variable, and control/randomizes the rest of the variables. It has a control group , the subjects have been randomly assigned between the groups, and the researcher only tests one effect at a time. It is also important to know what variable(s) you want to test and measure.

A very wide definition of experimental research, or a quasi experiment , is research where the scientist actively influences something to observe the consequences. Most experiments tend to fall in between the strict and the wide definition.

A rule of thumb is that physical sciences, such as physics, chemistry and geology tend to define experiments more narrowly than social sciences, such as sociology and psychology, which conduct experiments closer to the wider definition.

Aims of Experimental Research

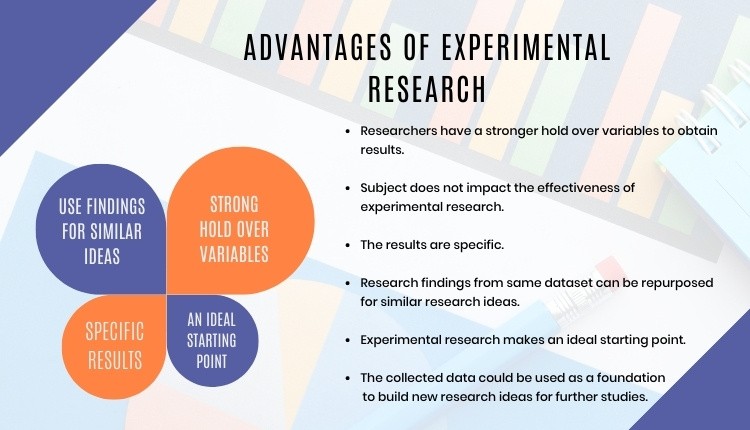

Experiments are conducted to be able to predict phenomenons. Typically, an experiment is constructed to be able to explain some kind of causation . Experimental research is important to society - it helps us to improve our everyday lives.

Identifying the Research Problem

After deciding the topic of interest, the researcher tries to define the research problem . This helps the researcher to focus on a more narrow research area to be able to study it appropriately. Defining the research problem helps you to formulate a research hypothesis , which is tested against the null hypothesis .

The research problem is often operationalizationed , to define how to measure the research problem. The results will depend on the exact measurements that the researcher chooses and may be operationalized differently in another study to test the main conclusions of the study.

An ad hoc analysis is a hypothesis invented after testing is done, to try to explain why the contrary evidence. A poor ad hoc analysis may be seen as the researcher's inability to accept that his/her hypothesis is wrong, while a great ad hoc analysis may lead to more testing and possibly a significant discovery.

Constructing the Experiment

There are various aspects to remember when constructing an experiment. Planning ahead ensures that the experiment is carried out properly and that the results reflect the real world, in the best possible way.

Sampling Groups to Study

Sampling groups correctly is especially important when we have more than one condition in the experiment. One sample group often serves as a control group , whilst others are tested under the experimental conditions.

Deciding the sample groups can be done in using many different sampling techniques. Population sampling may chosen by a number of methods, such as randomization , "quasi-randomization" and pairing.

Reducing sampling errors is vital for getting valid results from experiments. Researchers often adjust the sample size to minimize chances of random errors .

Here are some common sampling techniques :

- probability sampling

- non-probability sampling

- simple random sampling

- convenience sampling

- stratified sampling

- systematic sampling

- cluster sampling

- sequential sampling

- disproportional sampling

- judgmental sampling

- snowball sampling

- quota sampling

Creating the Design

The research design is chosen based on a range of factors. Important factors when choosing the design are feasibility, time, cost, ethics, measurement problems and what you would like to test. The design of the experiment is critical for the validity of the results.

Typical Designs and Features in Experimental Design

- Pretest-Posttest Design Check whether the groups are different before the manipulation starts and the effect of the manipulation. Pretests sometimes influence the effect.

- Control Group Control groups are designed to measure research bias and measurement effects, such as the Hawthorne Effect or the Placebo Effect . A control group is a group not receiving the same manipulation as the experimental group. Experiments frequently have 2 conditions, but rarely more than 3 conditions at the same time.

- Randomized Controlled Trials Randomized Sampling, comparison between an Experimental Group and a Control Group and strict control/randomization of all other variables

- Solomon Four-Group Design With two control groups and two experimental groups. Half the groups have a pretest and half do not have a pretest. This to test both the effect itself and the effect of the pretest.

- Between Subjects Design Grouping Participants to Different Conditions

- Within Subject Design Participants Take Part in the Different Conditions - See also: Repeated Measures Design

- Counterbalanced Measures Design Testing the effect of the order of treatments when no control group is available/ethical

- Matched Subjects Design Matching Participants to Create Similar Experimental- and Control-Groups

- Double-Blind Experiment Neither the researcher, nor the participants, know which is the control group. The results can be affected if the researcher or participants know this.

- Bayesian Probability Using bayesian probability to "interact" with participants is a more "advanced" experimental design. It can be used for settings were there are many variables which are hard to isolate. The researcher starts with a set of initial beliefs, and tries to adjust them to how participants have responded

Pilot Study

It may be wise to first conduct a pilot-study or two before you do the real experiment. This ensures that the experiment measures what it should, and that everything is set up right.

Minor errors, which could potentially destroy the experiment, are often found during this process. With a pilot study, you can get information about errors and problems, and improve the design, before putting a lot of effort into the real experiment.

If the experiments involve humans, a common strategy is to first have a pilot study with someone involved in the research, but not too closely, and then arrange a pilot with a person who resembles the subject(s) . Those two different pilots are likely to give the researcher good information about any problems in the experiment.

Conducting the Experiment

An experiment is typically carried out by manipulating a variable, called the independent variable , affecting the experimental group. The effect that the researcher is interested in, the dependent variable(s) , is measured.

Identifying and controlling non-experimental factors which the researcher does not want to influence the effects, is crucial to drawing a valid conclusion. This is often done by controlling variables , if possible, or randomizing variables to minimize effects that can be traced back to third variables . Researchers only want to measure the effect of the independent variable(s) when conducting an experiment , allowing them to conclude that this was the reason for the effect.

Analysis and Conclusions

In quantitative research , the amount of data measured can be enormous. Data not prepared to be analyzed is called "raw data". The raw data is often summarized as something called "output data", which typically consists of one line per subject (or item). A cell of the output data is, for example, an average of an effect in many trials for a subject. The output data is used for statistical analysis, e.g. significance tests, to see if there really is an effect.

The aim of an analysis is to draw a conclusion , together with other observations. The researcher might generalize the results to a wider phenomenon, if there is no indication of confounding variables "polluting" the results.

If the researcher suspects that the effect stems from a different variable than the independent variable, further investigation is needed to gauge the validity of the results. An experiment is often conducted because the scientist wants to know if the independent variable is having any effect upon the dependent variable. Variables correlating are not proof that there is causation .

Experiments are more often of quantitative nature than qualitative nature, although it happens.

Examples of Experiments

This website contains many examples of experiments. Some are not true experiments , but involve some kind of manipulation to investigate a phenomenon. Others fulfill most or all criteria of true experiments.

Here are some examples of scientific experiments:

Social Psychology

- Stanley Milgram Experiment - Will people obey orders, even if clearly dangerous?

- Asch Experiment - Will people conform to group behavior?

- Stanford Prison Experiment - How do people react to roles? Will you behave differently?

- Good Samaritan Experiment - Would You Help a Stranger? - Explaining Helping Behavior

- Law Of Segregation - The Mendel Pea Plant Experiment

- Transforming Principle - Griffith's Experiment about Genetics

- Ben Franklin Kite Experiment - Struck by Lightning

- J J Thomson Cathode Ray Experiment

- Psychology 101

- Flags and Countries

- Capitals and Countries

Oskar Blakstad (Jul 10, 2008). Experimental Research. Retrieved Apr 23, 2024 from Explorable.com: https://explorable.com/experimental-research

You Are Allowed To Copy The Text

The text in this article is licensed under the Creative Commons-License Attribution 4.0 International (CC BY 4.0) .

This means you're free to copy, share and adapt any parts (or all) of the text in the article, as long as you give appropriate credit and provide a link/reference to this page.

That is it. You don't need our permission to copy the article; just include a link/reference back to this page. You can use it freely (with some kind of link), and we're also okay with people reprinting in publications like books, blogs, newsletters, course-material, papers, wikipedia and presentations (with clear attribution).

Want to stay up to date? Follow us!

Get all these articles in 1 guide.

Want the full version to study at home, take to school or just scribble on?

Whether you are an academic novice, or you simply want to brush up your skills, this book will take your academic writing skills to the next level.

Download electronic versions: - Epub for mobiles and tablets - For Kindle here - For iBooks here - PDF version here

Save this course for later

Don't have time for it all now? No problem, save it as a course and come back to it later.

Footer bottom

- Privacy Policy

- Subscribe to our RSS Feed

- Like us on Facebook

- Follow us on Twitter

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.10: Correlational and Experimental Research

- Last updated

- Save as PDF

- Page ID 60387

- Lumen Learning

Learning Outcomes

- Explain correlational research

- Describe the value of experimental research

Correlational Research

When scientists passively observe and measure phenomena it is called correlational research . Here, researchers do not intervene and change behavior, as they do in experiments. In correlational research, the goal is to identify patterns of relationships, but not cause and effect. Importantly, with correlational research, you can examine only two variables at a time, no more and no less.

So, what if you wanted to test whether spending money on others is related to happiness, but you don’t have $20 to give to each participant in order to have them spend it for your experiment? You could use a correlational design—which is exactly what Professor Elizabeth Dunn (2008) at the University of British Columbia did when she conducted research on spending and happiness. She asked people how much of their income they spent on others or donated to charity, and later she asked them how happy they were. Do you think these two variables were related? Yes, they were! The more money people reported spending on others, the happier they were.

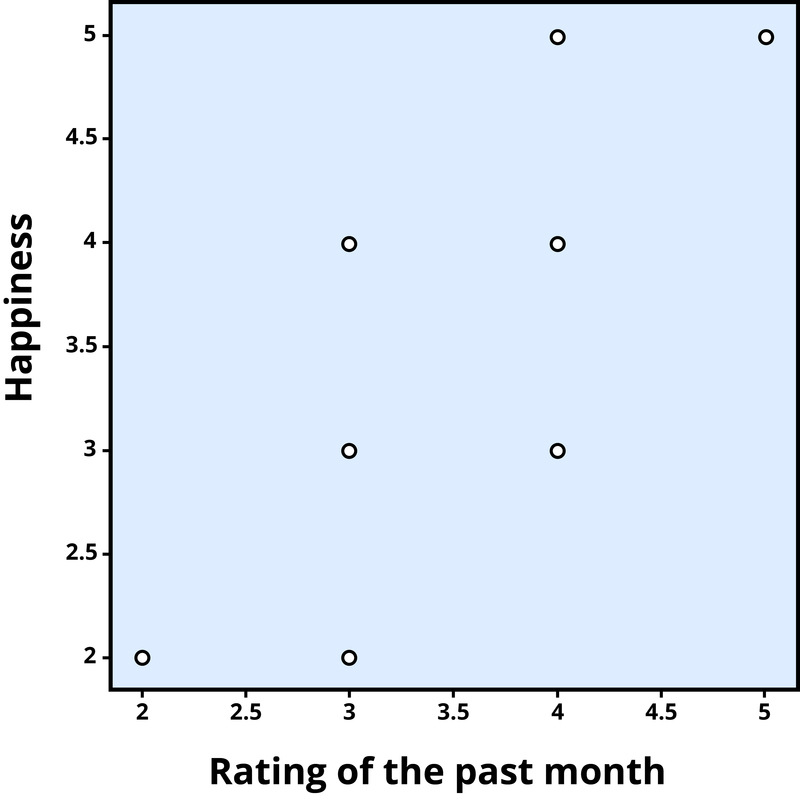

Understanding Correlation

To find out how well two variables correlate, you can plot the relationship between the two scores on what is known as a scatterplot . In the scatterplot, each dot represents a data point. (In this case it’s individuals, but it could be some other unit.) Importantly, each dot provides us with two pieces of information—in this case, information about how good the person rated the past month (x-axis) and how happy the person felt in the past month (y-axis). Which variable is plotted on which axis does not matter.

The association between two variables can be summarized statistically using the correlation coefficient (abbreviated as r). A correlation coefficient provides information about the direction and strength of the association between two variables. For the example above, the direction of the association is positive. This means that people who perceived the past month as being good reported feeling more happy, whereas people who perceived the month as being bad reported feeling less happy.

With a positive correlation , the two variables go up or down together. In a scatterplot, the dots form a pattern that extends from the bottom left to the upper right (just as they do in Figure 1). The r value for a positive correlation is indicated by a positive number (although, the positive sign is usually omitted). Here, the r value is .81.

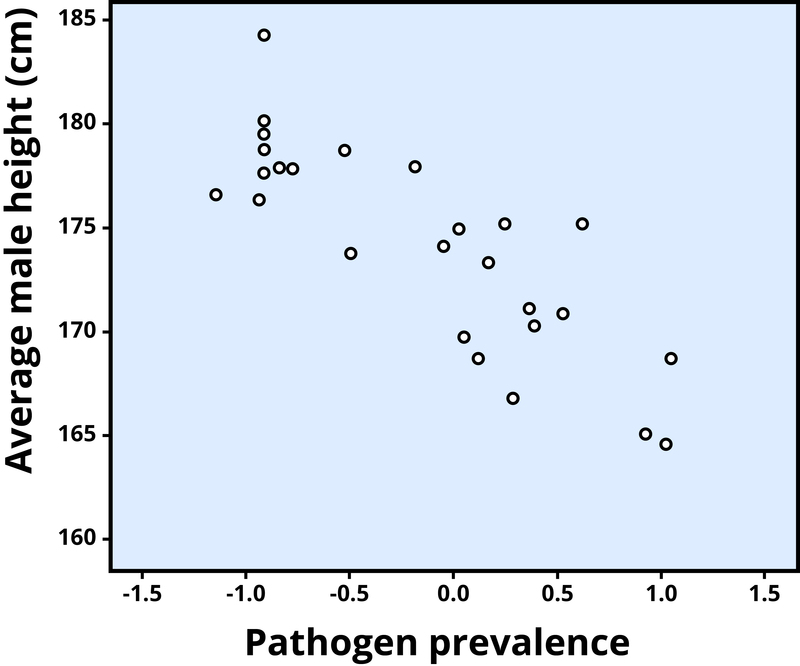

A negative correlation is one in which the two variables move in opposite directions. That is, as one variable goes up, the other goes down. Figure 2 shows the association between the average height of males in a country (y-axis) and the pathogen prevalence (or commonness of disease; x-axis) of that country. In this scatterplot, each dot represents a country. Notice how the dots extend from the top left to the bottom right. What does this mean in real-world terms? It means that people are shorter in parts of the world where there is more disease. The r value for a negative correlation is indicated by a negative number—that is, it has a minus (–) sign in front of it. Here, it is –.83.

The strength of a correlation has to do with how well the two variables align. Recall that in Professor Dunn’s correlational study, spending on others positively correlated with happiness; the more money people reported spending on others, the happier they reported to be. At this point you may be thinking to yourself, I know a very generous person who gave away lots of money to other people but is miserable! Or maybe you know of a very stingy person who is happy as can be. Yes, there might be exceptions. If an association has many exceptions, it is considered a weak correlation. If an association has few or no exceptions, it is considered a strong correlation. A strong correlation is one in which the two variables always, or almost always, go together. In the example of happiness and how good the month has been, the association is strong. The stronger a correlation is, the tighter the dots in the scatterplot will be arranged along a sloped line.

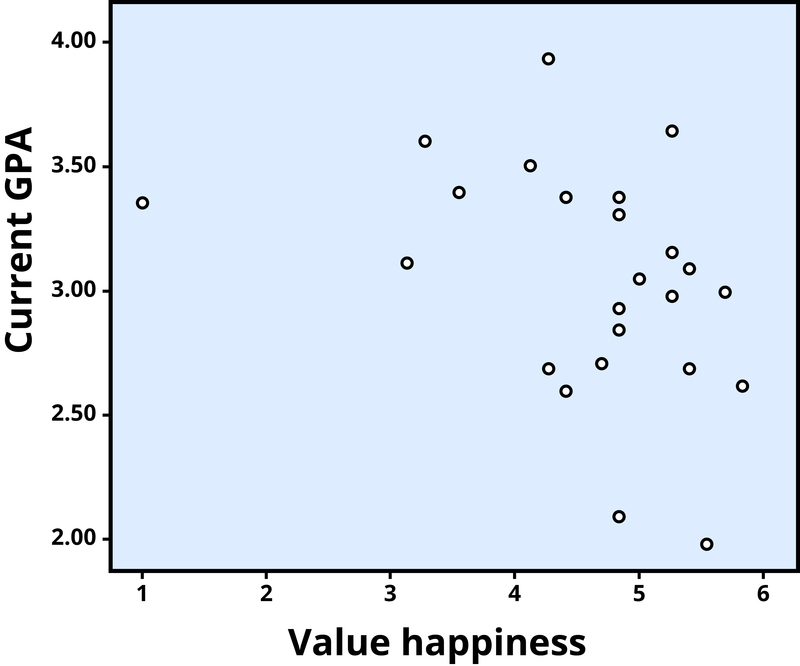

The r value of a strong correlation will have a high absolute value (a perfect correlation has an absolute value of the whole number one, or 1.00). In other words, you disregard whether there is a negative sign in front of the r value, and just consider the size of the numerical value itself. If the absolute value is large, it is a strong correlation. A weak correlation is one in which the two variables correspond some of the time, but not most of the time. Figure 3 shows the relation between valuing happiness and grade point average (GPA). People who valued happiness more tended to earn slightly lower grades, but there were lots of exceptions to this. The r value for a weak correlation will have a low absolute value. If two variables are so weakly related as to be unrelated, we say they are uncorrelated, and the r value will be zero or very close to zero. In the previous example, is the correlation between height and pathogen prevalence strong? Compared to Figure 3, the dots in Figure 2 are tighter and less dispersed. The absolute value of –.83 is large (closer to one than to zero). Therefore, it is a strong negative correlation.

Problems with correlation

If generosity and happiness are positively correlated, should we conclude that being generous causes happiness? Similarly, if height and pathogen prevalence are negatively correlated, should we conclude that disease causes shortness? From a correlation alone, we can’t be certain. For example, in the first case, it may be that happiness causes generosity, or that generosity causes happiness. Or, a third variable might cause both happiness and generosity, creating the illusion of a direct link between the two. For example, wealth could be the third variable that causes both greater happiness and greater generosity. This is why correlation does not mean causation—an often repeated phrase among psychologists.

In this video, University of Pennsylvania psychologist and bestselling author, Angela Duckworth describes the correlational research that informed her understanding of grit.

A TED element has been excluded from this version of the text. You can view it online here: http://pb.libretexts.org/lsdm/?p=66

You can view the transcript for “Grit: The power of passion and perseverance | Angela Lee Duckworth” here (opens in new window) .

link to learning

Click through this interactive presentation to examine actual research studies.

https://assessments.lumenlearning.co...essments/16503

Experimental Research

Experiments are designed to test hypotheses (or specific statements about the relationship between variables ) in a controlled setting in efforts to explain how certain factors or events produce outcomes. A variable is anything that changes in value. Concepts are operationalized or transformed into variables in research which means that the researcher must specify exactly what is going to be measured in the study. For example, if we are interested in studying marital satisfaction, we have to specify what marital satisfaction really means or what we are going to use as an indicator of marital satisfaction. What is something measurable that would indicate some level of marital satisfaction? Would it be the amount of time couples spend together each day? Or eye contact during a discussion about money? Or maybe a subject’s score on a marital satisfaction scale? Each of these is measurable but these may not be equally valid or accurate indicators of marital satisfaction. What do you think? These are the kinds of considerations researchers must make when working through the design.

The experimental method is the only research method that can measure cause and effect relationships between variables. Three conditions must be met in order to establish cause and effect. Experimental designs are useful in meeting these conditions:

- The independent and dependent variables must be related. In other words, when one is altered, the other changes in response. The independent variable is something altered or introduced by the researcher; sometimes thought of as the treatment or intervention. The dependent variable is the outcome or the factor affected by the introduction of the independent variable; the dependent variable depends on the independent variable. For example, if we are looking at the impact of exercise on stress levels, the independent variable would be exercise; the dependent variable would be stress.

- The cause must come before the effect. Experiments measure subjects on the dependent variable before exposing them to the independent variable (establishing a baseline). So we would measure the subjects’ level of stress before introducing exercise and then again after the exercise to see if there has been a change in stress levels. (Observational and survey research does not always allow us to look at the timing of these events which makes understanding causality problematic with these methods.)

- The cause must be isolated. The researcher must ensure that no outside, perhaps unknown variables, are actually causing the effect we see. The experimental design helps make this possible. In an experiment, we would make sure that our subjects’ diets were held constant throughout the exercise program. Otherwise, the diet might really be creating a change in stress level rather than exercise.

A basic experimental design involves beginning with a sample (or subset of a population) and randomly assigning subjects to one of two groups: the experimental group or the control group . Ideally, to prevent bias, the participants would be blind to their condition (not aware of which group they are in) and the researchers would also be blind to each participant’s condition (referred to as “ double blind “). The experimental group is the group that is going to be exposed to an independent variable or condition the researcher is introducing as a potential cause of an event. The control group is going to be used for comparison and is going to have the same experience as the experimental group but will not be exposed to the independent variable. This helps address the placebo effect, which is that a group may expect changes to happen just by participating. After exposing the experimental group to the independent variable, the two groups are measured again to see if a change has occurred. If so, we are in a better position to suggest that the independent variable caused the change in the dependent variable . The basic experimental model looks like this:

The major advantage of the experimental design is that of helping to establish cause and effect relationships. A disadvantage of this design is the difficulty of translating much of what concerns us about human behavior into a laboratory setting.

Link to Learning

Have you ever wondered why people make decisions that seem to be in opposition to their longterm best interest? In Eldar Shafir’s TED Talk Living Under Scarcity , Shafir describes a series of experiments that shed light on how scarcity (real or perceived) affects our decisions.

https://assessments.lumenlearning.co...essments/16504

https://assessments.lumenlearning.co...essments/16505

https://assessments.lumenlearning.co...essments/16506

https://assessments.lumenlearning.co...essments/16507 https://assessments.lumenlearning.co...essments/16508

[glossary-page] [glossary-term]control group:[/glossary-term] [glossary-definition]a comparison group that is equivalent to the experimental group, but is not given the independent variable[/glossary-definition]

[glossary-term]correlation:[/glossary-term] [glossary-definition]the relationship between two or more variables; when two variables are correlated, one variable changes as the other does[/glossary-definition]

[glossary-term]correlation coefficient:[/glossary-term] [glossary-definition]number from -1 to +1, indicating the strength and direction of the relationship between variables, and usually represented by r[/glossary-definition]

[glossary-term]correlational research:[/glossary-term] [glossary-definition]research design with the goal of identifying patterns of relationships, but not cause and effect[/glossary-definition]

[glossary-term]dependent variable:[/glossary-term] [glossary-definition]the outcome or variable that is supposedly affected by the independent variable[/glossary-definition]

[glossary-term]double-blind:[/glossary-term] [glossary-definition]a research design in which neither the participants nor the researchers know whether an individual is assigned to the experimental group or the control group[/glossary-definition]

[glossary-term]experimental group:[/glossary-term] [glossary-definition]the group of participants in an experiment who receive the independent variable[/glossary-definition]

[glossary-term]experiments:[/glossary-term] [glossary-definition]designed to test hypotheses in a controlled setting in efforts to explain how certain factors or events produce outcomes; the only research method that measures cause and effect relationships between variables[/glossary-definition]

[glossary-term]hypotheses:[/glossary-term] [glossary-definition]specific statements or predictions about the relationship between variables[/glossary-definition]

[glossary-term]independent variable:[/glossary-term] [glossary-definition]something that is manipulated or introduced by the researcher to the experimental group; treatment or intervention[/glossary-definition]

[glossary-term]negative correlation:[/glossary-term] [glossary-definition]two variables change in different directions, with one becoming larger as the other becomes smaller; a negative correlation is not the same thing as no correlation[/glossary-definition]

[glossary-term]operationalized:[/glossary-term] [glossary-definition]concepts transformed into variables that can be measured in research[/glossary-definition]

[glossary-term]positive correlation:[/glossary-term] [glossary-definition]two variables change in the same direction, both becoming either larger or smaller[/glossary-definition]

[glossary-term]scatterplot:[/glossary-term] [glossary-definition]a plot or mathematical diagram consisting of data points that represent two variables[/glossary-definition]

[glossary-term]variables:[/glossary-term] [glossary-definition]factors that change in value[/glossary-definition] [/glossary-page]

Contributors and Attributions

- Modification, adaptation, and original content. Provided by : Lumen Learning. License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

- Psyc 200 Lifespan Psychology. Authored by : Laura Overstreet. Located at : http://opencourselibrary.org/econ-201/ . License : CC BY: Attribution

- Research Designs. Authored by : Christie Napa Scollon. Provided by : Singapore Management University. Project : The Noba Project. License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

- Vocabulary and review about correlational research. Provided by : Lumen Learning. Located at : https://courses.lumenlearning.com/waymaker-psychology/wp-admin/post.php?post=1848&action=edit . License : CC BY: Attribution

- Grit: The power of passion and perseverance. Authored by : Angela Lee Duckworth. Provided by : TED. Located at : https://www.ted.com/talks/angela_lee_duckworth_grit_the_power_of_passion_and_perseverance . License : CC BY-NC-ND: Attribution-NonCommercial-NoDerivatives

Experimental Design: Types, Examples & Methods

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Experimental design refers to how participants are allocated to different groups in an experiment. Types of design include repeated measures, independent groups, and matched pairs designs.

Probably the most common way to design an experiment in psychology is to divide the participants into two groups, the experimental group and the control group, and then introduce a change to the experimental group, not the control group.

The researcher must decide how he/she will allocate their sample to the different experimental groups. For example, if there are 10 participants, will all 10 participants participate in both groups (e.g., repeated measures), or will the participants be split in half and take part in only one group each?

Three types of experimental designs are commonly used:

1. Independent Measures

Independent measures design, also known as between-groups , is an experimental design where different participants are used in each condition of the independent variable. This means that each condition of the experiment includes a different group of participants.

This should be done by random allocation, ensuring that each participant has an equal chance of being assigned to one group.

Independent measures involve using two separate groups of participants, one in each condition. For example:

- Con : More people are needed than with the repeated measures design (i.e., more time-consuming).

- Pro : Avoids order effects (such as practice or fatigue) as people participate in one condition only. If a person is involved in several conditions, they may become bored, tired, and fed up by the time they come to the second condition or become wise to the requirements of the experiment!

- Con : Differences between participants in the groups may affect results, for example, variations in age, gender, or social background. These differences are known as participant variables (i.e., a type of extraneous variable ).

- Control : After the participants have been recruited, they should be randomly assigned to their groups. This should ensure the groups are similar, on average (reducing participant variables).

2. Repeated Measures Design

Repeated Measures design is an experimental design where the same participants participate in each independent variable condition. This means that each experiment condition includes the same group of participants.

Repeated Measures design is also known as within-groups or within-subjects design .

- Pro : As the same participants are used in each condition, participant variables (i.e., individual differences) are reduced.

- Con : There may be order effects. Order effects refer to the order of the conditions affecting the participants’ behavior. Performance in the second condition may be better because the participants know what to do (i.e., practice effect). Or their performance might be worse in the second condition because they are tired (i.e., fatigue effect). This limitation can be controlled using counterbalancing.

- Pro : Fewer people are needed as they participate in all conditions (i.e., saves time).

- Control : To combat order effects, the researcher counter-balances the order of the conditions for the participants. Alternating the order in which participants perform in different conditions of an experiment.

Counterbalancing

Suppose we used a repeated measures design in which all of the participants first learned words in “loud noise” and then learned them in “no noise.”

We expect the participants to learn better in “no noise” because of order effects, such as practice. However, a researcher can control for order effects using counterbalancing.

The sample would be split into two groups: experimental (A) and control (B). For example, group 1 does ‘A’ then ‘B,’ and group 2 does ‘B’ then ‘A.’ This is to eliminate order effects.

Although order effects occur for each participant, they balance each other out in the results because they occur equally in both groups.

3. Matched Pairs Design

A matched pairs design is an experimental design where pairs of participants are matched in terms of key variables, such as age or socioeconomic status. One member of each pair is then placed into the experimental group and the other member into the control group .

One member of each matched pair must be randomly assigned to the experimental group and the other to the control group.

- Con : If one participant drops out, you lose 2 PPs’ data.

- Pro : Reduces participant variables because the researcher has tried to pair up the participants so that each condition has people with similar abilities and characteristics.

- Con : Very time-consuming trying to find closely matched pairs.

- Pro : It avoids order effects, so counterbalancing is not necessary.

- Con : Impossible to match people exactly unless they are identical twins!

- Control : Members of each pair should be randomly assigned to conditions. However, this does not solve all these problems.

Experimental design refers to how participants are allocated to an experiment’s different conditions (or IV levels). There are three types:

1. Independent measures / between-groups : Different participants are used in each condition of the independent variable.

2. Repeated measures /within groups : The same participants take part in each condition of the independent variable.

3. Matched pairs : Each condition uses different participants, but they are matched in terms of important characteristics, e.g., gender, age, intelligence, etc.

Learning Check

Read about each of the experiments below. For each experiment, identify (1) which experimental design was used; and (2) why the researcher might have used that design.

1 . To compare the effectiveness of two different types of therapy for depression, depressed patients were assigned to receive either cognitive therapy or behavior therapy for a 12-week period.

The researchers attempted to ensure that the patients in the two groups had similar severity of depressed symptoms by administering a standardized test of depression to each participant, then pairing them according to the severity of their symptoms.

2 . To assess the difference in reading comprehension between 7 and 9-year-olds, a researcher recruited each group from a local primary school. They were given the same passage of text to read and then asked a series of questions to assess their understanding.

3 . To assess the effectiveness of two different ways of teaching reading, a group of 5-year-olds was recruited from a primary school. Their level of reading ability was assessed, and then they were taught using scheme one for 20 weeks.

At the end of this period, their reading was reassessed, and a reading improvement score was calculated. They were then taught using scheme two for a further 20 weeks, and another reading improvement score for this period was calculated. The reading improvement scores for each child were then compared.

4 . To assess the effect of the organization on recall, a researcher randomly assigned student volunteers to two conditions.

Condition one attempted to recall a list of words that were organized into meaningful categories; condition two attempted to recall the same words, randomly grouped on the page.

Experiment Terminology

Ecological validity.

The degree to which an investigation represents real-life experiences.

Experimenter effects

These are the ways that the experimenter can accidentally influence the participant through their appearance or behavior.

Demand characteristics

The clues in an experiment lead the participants to think they know what the researcher is looking for (e.g., the experimenter’s body language).

Independent variable (IV)

The variable the experimenter manipulates (i.e., changes) is assumed to have a direct effect on the dependent variable.

Dependent variable (DV)

Variable the experimenter measures. This is the outcome (i.e., the result) of a study.

Extraneous variables (EV)

All variables which are not independent variables but could affect the results (DV) of the experiment. Extraneous variables should be controlled where possible.

Confounding variables

Variable(s) that have affected the results (DV), apart from the IV. A confounding variable could be an extraneous variable that has not been controlled.

Random Allocation

Randomly allocating participants to independent variable conditions means that all participants should have an equal chance of taking part in each condition.

The principle of random allocation is to avoid bias in how the experiment is carried out and limit the effects of participant variables.

Order effects

Changes in participants’ performance due to their repeating the same or similar test more than once. Examples of order effects include:

(i) practice effect: an improvement in performance on a task due to repetition, for example, because of familiarity with the task;

(ii) fatigue effect: a decrease in performance of a task due to repetition, for example, because of boredom or tiredness.

Variables in Research | Types, Definiton & Examples

Introduction

What is a variable, what are the 5 types of variables in research, other variables in research.

Variables are fundamental components of research that allow for the measurement and analysis of data. They can be defined as characteristics or properties that can take on different values. In research design , understanding the types of variables and their roles is crucial for developing hypotheses , designing methods , and interpreting results .

This article outlines the the types of variables in research, including their definitions and examples, to provide a clear understanding of their use and significance in research studies. By categorizing variables into distinct groups based on their roles in research, their types of data, and their relationships with other variables, researchers can more effectively structure their studies and achieve more accurate conclusions.